Hi all,

If you have issues with int8 profiling times (typically on large models / datasets), e.g. you see this:

Profiling int8 model...

Profiling 3% done

Profiling 7% done

Profiling 12% done

Profiling 16% done

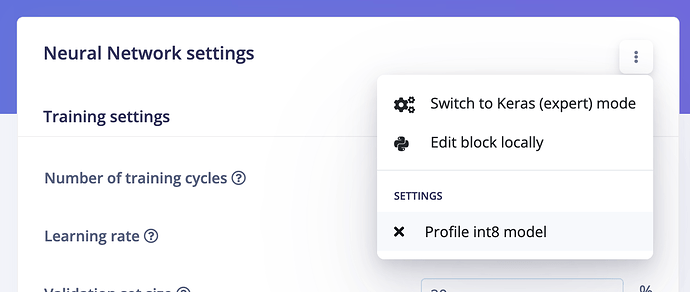

You can now disable int8 profiling by clicking the three dots on any neural network page and disabling the option:

Note that this also disables int8 deploys, but e.g. on Linux or on some accelerators (e.g. Syntiant) we use f32 models anyway as an input, so no harm there.

The reason why int8 profiling is so slow is because there’s no hardware acceleration for quantized models in TensorFlow Lite on x86 (XNNPACK promises some, but at least not on the models we’ve tried it with) leading to 50-100x slower inferencing times than f32 (!). Somewhere over the next months we’ll switch profiling to Arm machines which have accelerated int8 kernels.

Let me know if you have any questions!