Hi,

I tested Object Detection models on RPI and Jetson Nano boards.

By the way, there was a double difference in Inference time.

RPI v4 : Quad core Cortex-A72 (ARM v8) 64-bit SoC

JetsonNano : Quad-core ARM® A57 CPU

I understand that both boards have excellent core performance.

For RPI, it took 450ms ~ 490ms.

For Jetson nano, it took 220ms ~ 250ms.

I wonder what the reason.

Regards

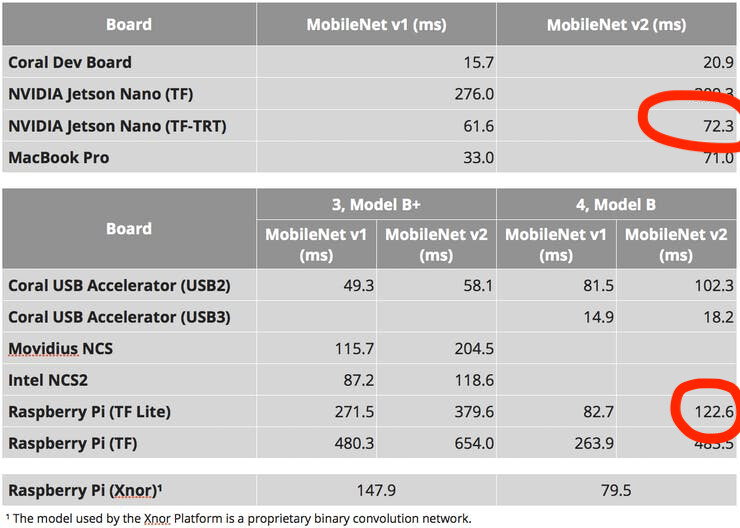

Not entirely sure why, but you can see the same from other benchmarks. E.g. this is from Alasdair Allan:

The Jetson Nano is clocked a bit higher (1.95GHz vs 1.5GHz) and has a big heat sink, while the Rpi is fanless - so it throttles faster.

Note that we’re still not running at full performance on the Jetson Nano for object detection models, as we cannot use the GPU (image classification can), so expect to see even bigger improvements there.

2 Likes

@janjongboom Thanks to you, my curiosity has been solved.