So, trained my model on the website for pattern recognition. After training, I exported my model locally on my my computer. I followed the tutorials step by step as mentioned on website. On RPi 4, I end up getting a inference speed of 98ms. However, when on website it was claimed that on rpi 4, we would achieve an inference speed of 3ms.

Could you help me with this discrepancy ? Also, what else can be changed in the code so as to achieve a higher inference speed on my RPi 4?

Hi @prarthana,

Could you share your project ID so we can take a look at the estimated inference speeds?

Sure, here is the project id : 197629

Also, I wanted to add to my description that first i downloaded the trained model on my laptop where I got fps for around 120-130hz. And then using ssh protocol I transferred the folder to rpi 4. On rpi 4 , I built it again and then got an inference speed of 98ms i.e roughly around 10hz.

Hi @prarthana,

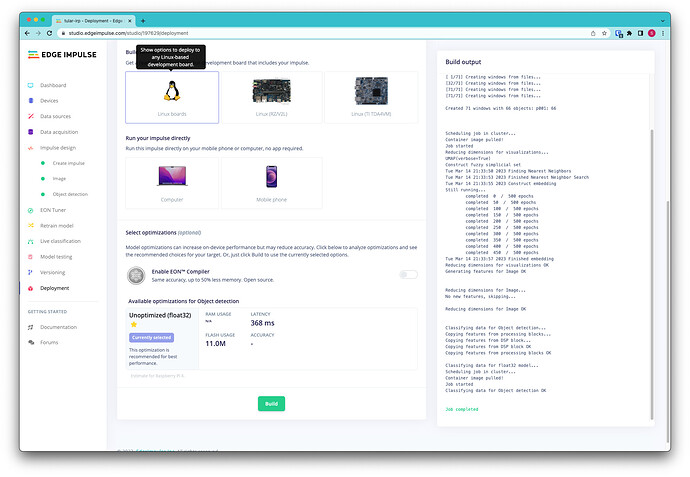

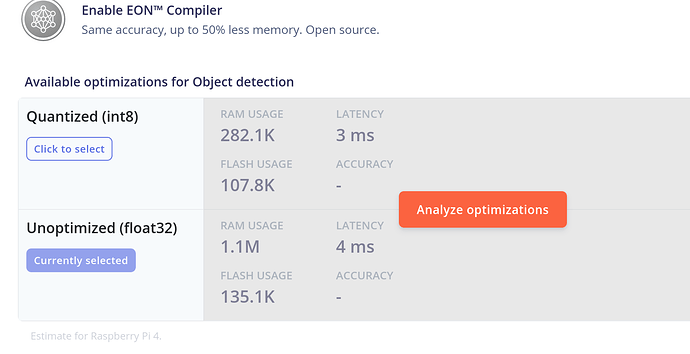

Where do you see the estimated inference time of 3ms? When I run the analyzer on the deployment page of your project, it predicts 368 ms of inference time for the RPi 4.