Question/Issue:

Hello! I have a problem.

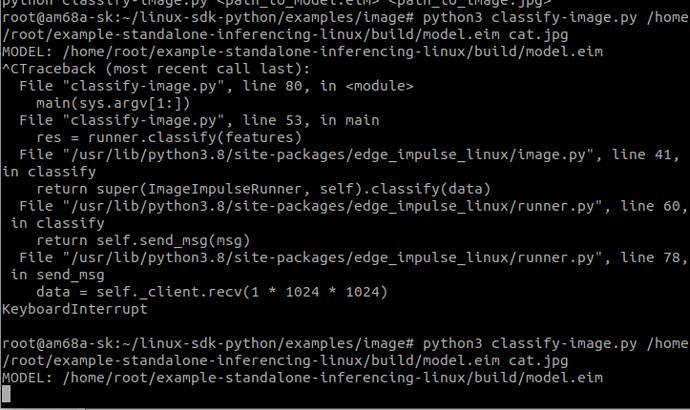

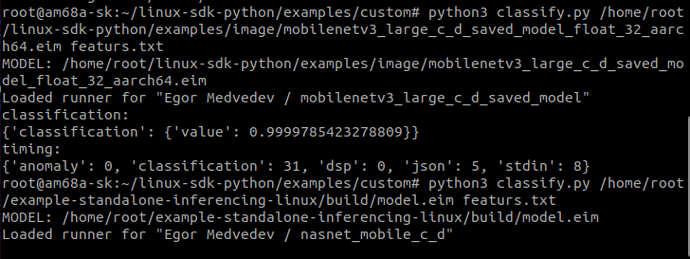

I’m using an SK AM68a board from Texas Instruments(PSDK version is 08_06_00_10). On the edge impulse website I can save the model in the tidlrt for am68a format. To compile tidl rt into an executable file I use instructions from your github (GitHub - edgeimpulse/example-standalone-inferencing-linux: Builds and runs an exported impulse locally (Linux)). For model inference I use Python sdk (GitHub - edgeimpulse/linux-sdk-python: Use Edge Impulse for Linux models from Python). When I try to output the result I get nothing. I’m attaching a screenshot. After the inscription MODEL: /home/root/example-standalone-inferencing-linux/build/model.eim nothing happens. (here I used nasnetmobile for image classification).

Project ID:

339533

Context/Use case: