Hi,

Previously I was talking about this here.

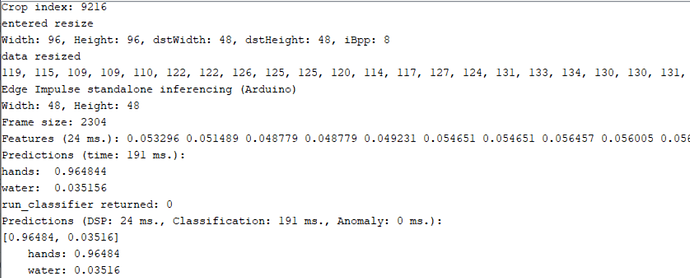

On my Arduino Nano 33 BLE Sense, the static_buffer example works well. I have tried to convert the sketch into one that continuously captures an image and classifies it, but on device, the results are different from the results in studio. The link below classifies the image correctly, but the screenshot below shows the same image being classified incorrectly. Have I written the sketch incorrectly?

https://studio.edgeimpulse.com/studio/24610/classification#load-sample-21235873

/* Edge Impulse Arduino examples

Copyright (c) 2021 EdgeImpulse Inc.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in

all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

SOFTWARE.

*/

/* Includes ---------------------------------------------------------------- */

#include <faucet-water-hand-classification_inference.h>

#include <Arduino_OV767X.h>

const int myBytesPerPixel = 1; // Grayscale = 1 Byte

// raw frame buffer from the camera

#define FRAME_BUFFER_COLS 160

#define FRAME_BUFFER_ROWS 120

uint8_t frame_buffer[FRAME_BUFFER_COLS * FRAME_BUFFER_ROWS] = { 0 };

// cutout that we want (this does not do a resize, which would also be an option, but you'll need some resize lib for that)

#define CUTOUT_COLS EI_CLASSIFIER_INPUT_WIDTH * 2 //96

#define CUTOUT_ROWS EI_CLASSIFIER_INPUT_HEIGHT * 2

uint8_t cropped_image[CUTOUT_COLS * CUTOUT_ROWS] = { 0 };

// resized cutout from frame_buffer

#define RESIZE_COLS EI_CLASSIFIER_INPUT_WIDTH //48

#define RESIZE_ROWS EI_CLASSIFIER_INPUT_HEIGHT

uint8_t resized_image[RESIZE_COLS * RESIZE_ROWS] = { 0 };

const int cutout_row_start = 0;

const int cutout_col_start = 0;

int counter = 0;

void myCrop() {

int index = 0;

// Serial.println("entered crop");

// loop through rows and columns grabbing wanted bytes

for (int y = cutout_row_start; y < cutout_row_start + CUTOUT_ROWS; y++) {

for (int x = (cutout_col_start * myBytesPerPixel); x < ((cutout_col_start + CUTOUT_COLS) * myBytesPerPixel); x++) {

cropped_image[index++] = frame_buffer[(y * FRAME_BUFFER_COLS * myBytesPerPixel) + x];

}

}

// Serial.println("data cropped");

Serial.printf("Crop index: %d\n", index);

}

/**

This function is called by the classifier to get data

We don't want to have a separate copy of the cutout here, so we'll read from the frame buffer dynamically

*/

int cutout_get_data(size_t offset, size_t length, float *out_ptr) {

// so offset and length naturally operate on the *cutout*, so we need to cut it out from the real framebuffer

//size_t bytes_left = length;

//size_t out_ptr_ix = 0;

//length = 2304 = 48^2

//offset - ignore?

//crop frame_buffer - first 96x96 pixels

//resize frame_buffer - 48x48

//out_ptr - put resized data in here

if (counter == 3) {

for (int i = 0; i < RESIZE_COLS * RESIZE_ROWS; i++) {

out_ptr[i] = resized_image[i];

//Serial.print(out_ptr[i]);

if (i != RESIZE_COLS * RESIZE_ROWS - 1) {

//Serial.print(", ");

}

}

//Serial.println();

counter = 0;

}

counter++;

// and done!

return 0;

}

/**

@brief Arduino setup function

*/

void setup()

{

// put your setup code here, to run once:

Serial.begin(115200);

while (!Serial);

Serial.println("Edge Impulse Inferencing Demo");

if (!Camera.begin(QQVGA, GRAYSCALE, 1)) { //Resolution, Byte Format, FPS 1 or 5

Serial.println("Failed to initialize camera!");

while (1);

}

Serial.println(EI_CLASSIFIER_TFLITE_ARENA_SIZE);

Camera.verticalFlip();

Camera.autoExposure();

Camera.autoGain();

delay(1000);

//bytesPerFrame = Camera.width() * Camera.height() * Camera.bytesPerPixel();

}

/**

@brief Arduino main function

*/

void loop()

{

ei_impulse_result_t result = { 0 };

Camera.readFrame((uint8_t*)frame_buffer);

myCrop();

resizeImage(CUTOUT_COLS, CUTOUT_ROWS, cropped_image, RESIZE_COLS, RESIZE_ROWS, resized_image, myBytesPerPixel * 8);

for (int i = 0; i < 2304; i++) {

Serial.print(resized_image[i]);

if (i != 2303) {

Serial.print(", ");

}

}

Serial.println();

// set up a signal to read from the frame buffer

signal_t signal;

signal.total_length = EI_CLASSIFIER_INPUT_WIDTH * EI_CLASSIFIER_INPUT_HEIGHT;

signal.get_data = &cutout_get_data;

ei_printf("Edge Impulse standalone inferencing (Arduino)\n");

Serial.printf("Width: %d, Height: %d\n", EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT);

Serial.printf("Frame size: %d\n", EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE);

// if (sizeof(frame_buffer) / sizeof(uint16_t) != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE) {

// ei_printf("The size of your 'features' array is not correct. Expected %lu items, but had %lu\n",

// EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE, sizeof(frame_buffer) / sizeof(uint16_t));

// delay(1000);

// return;

// }

// invoke the impulse

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, true /* debug */);

ei_printf("run_classifier returned: %d\n", res);

if (res != 0) return;

// print the predictions

ei_printf("Predictions ");

ei_printf("(DSP: %d ms., Classification: %d ms., Anomaly: %d ms.)",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

ei_printf(": \n");

ei_printf("[");

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf("%.5f", result.classification[ix].value);

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(", ");

#else

if (ix != EI_CLASSIFIER_LABEL_COUNT - 1) {

ei_printf(", ");

}

#endif

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf("%.3f", result.anomaly);

#endif

ei_printf("]\n");

// human-readable predictions

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf(" %s: %.5f\n", result.classification[ix].label, result.classification[ix].value);

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(" anomaly score: %.3f\n", result.anomaly);

#endif

delay(1000);

}

/**

@brief Printf function uses vsnprintf and output using Arduino Serial

@param[in] format Variable argument list

*/

void ei_printf(const char *format, ...) {

static char print_buf[1024] = { 0 };

va_list args;

va_start(args, format);

int r = vsnprintf(print_buf, sizeof(print_buf), format, args);

va_end(args);

if (r > 0) {

Serial.write(print_buf);

}

}

//---------------------------------------------------------------------------------------------------------------------

// This include file works in the Arduino environment

// to define the Cortex-M intrinsics

#ifdef __ARM_FEATURE_SIMD32

#include <device.h>

#endif

// This needs to be < 16 or it won't fit. Cortex-M4 only has SIMD for signed multiplies

#define FRAC_BITS 14

#define FRAC_VAL (1<<FRAC_BITS)

#define FRAC_MASK (FRAC_VAL - 1)

//

// Resize

//

// Assumes that the destination buffer is dword-aligned

// Can be used to resize the image smaller or larger

// If resizing much smaller than 1/3 size, then a more rubust algorithm should average all of the pixels

// This algorithm uses bilinear interpolation - averages a 2x2 region to generate each new pixel

//

// Optimized for 32-bit MCUs

// supports 8 and 16-bit pixels

void resizeImage(int srcWidth, int srcHeight, uint8_t *srcImage, int dstWidth, int dstHeight, uint8_t *dstImage, int iBpp)

{

uint32_t src_x_accum, src_y_accum; // accumulators and fractions for scaling the image

uint32_t x_frac, nx_frac, y_frac, ny_frac;

int x, y, ty, tx;

Serial.println("entered resize");

Serial.printf("Width: %d, Height: %d, dstWidth: %d, dstHeight: %d, iBpp: %d\n", srcWidth, srcHeight, dstWidth, dstHeight, iBpp);

if (iBpp != 8 && iBpp != 16)

return;

src_y_accum = FRAC_VAL / 2; // start at 1/2 pixel in to account for integer downsampling which might miss pixels

const uint32_t src_x_frac = (srcWidth * FRAC_VAL) / dstWidth;

const uint32_t src_y_frac = (srcHeight * FRAC_VAL) / dstHeight;

const uint32_t r_mask = 0xf800f800;

const uint32_t g_mask = 0x07e007e0;

const uint32_t b_mask = 0x001f001f;

uint8_t *s, *d;

uint16_t *s16, *d16;

uint32_t x_frac2, y_frac2; // for 16-bit SIMD

for (y = 0; y < dstHeight; y++) {

ty = src_y_accum >> FRAC_BITS; // src y

y_frac = src_y_accum & FRAC_MASK;

src_y_accum += src_y_frac;

ny_frac = FRAC_VAL - y_frac; // y fraction and 1.0 - y fraction

y_frac2 = ny_frac | (y_frac << 16); // for M4/M4 SIMD

s = &srcImage[ty * srcWidth];

s16 = (uint16_t *)&srcImage[ty * srcWidth * 2];

d = &dstImage[y * dstWidth];

d16 = (uint16_t *)&dstImage[y * dstWidth * 2];

src_x_accum = FRAC_VAL / 2; // start at 1/2 pixel in to account for integer downsampling which might miss pixels

if (iBpp == 8) {

for (x = 0; x < dstWidth; x++) {

uint32_t tx, p00, p01, p10, p11;

tx = src_x_accum >> FRAC_BITS;

x_frac = src_x_accum & FRAC_MASK;

nx_frac = FRAC_VAL - x_frac; // x fraction and 1.0 - x fraction

x_frac2 = nx_frac | (x_frac << 16);

src_x_accum += src_x_frac;

p00 = s[tx]; p10 = s[tx + 1];

p01 = s[tx + srcWidth]; p11 = s[tx + srcWidth + 1];

#ifdef __ARM_FEATURE_SIMD32

p00 = __SMLAD(p00 | (p10 << 16), x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // top line

p01 = __SMLAD(p01 | (p11 << 16), x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // bottom line

p00 = __SMLAD(p00 | (p01 << 16), y_frac2, FRAC_VAL / 2) >> FRAC_BITS; // combine

#else // generic C code

p00 = ((p00 * nx_frac) + (p10 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // top line

p01 = ((p01 * nx_frac) + (p11 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // bottom line

p00 = ((p00 * ny_frac) + (p01 * y_frac) + FRAC_VAL / 2) >> FRAC_BITS; // combine top + bottom

#endif // Cortex-M4/M7

*d++ = (uint8_t)p00; // store new pixel

} // for x

} // 8-bpp

else

{ // RGB565

for (x = 0; x < dstWidth; x++) {

uint32_t tx, p00, p01, p10, p11;

uint32_t r00, r01, r10, r11, g00, g01, g10, g11, b00, b01, b10, b11;

tx = src_x_accum >> FRAC_BITS;

x_frac = src_x_accum & FRAC_MASK;

nx_frac = FRAC_VAL - x_frac; // x fraction and 1.0 - x fraction

x_frac2 = nx_frac | (x_frac << 16);

src_x_accum += src_x_frac;

p00 = __builtin_bswap16(s16[tx]); p10 = __builtin_bswap16(s16[tx + 1]);

p01 = __builtin_bswap16(s16[tx + srcWidth]); p11 = __builtin_bswap16(s16[tx + srcWidth + 1]);

#ifdef __ARM_FEATURE_SIMD32

{

p00 |= (p10 << 16);

p01 |= (p11 << 16);

r00 = (p00 & r_mask) >> 1; g00 = p00 & g_mask; b00 = p00 & b_mask;

r01 = (p01 & r_mask) >> 1; g01 = p01 & g_mask; b01 = p01 & b_mask;

r00 = __SMLAD(r00, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // top line

r01 = __SMLAD(r01, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // bottom line

r00 = __SMLAD(r00 | (r01 << 16), y_frac2, FRAC_VAL / 2) >> FRAC_BITS; // combine

g00 = __SMLAD(g00, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // top line

g01 = __SMLAD(g01, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // bottom line

g00 = __SMLAD(g00 | (g01 << 16), y_frac2, FRAC_VAL / 2) >> FRAC_BITS; // combine

b00 = __SMLAD(b00, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // top line

b01 = __SMLAD(b01, x_frac2, FRAC_VAL / 2) >> FRAC_BITS; // bottom line

b00 = __SMLAD(b00 | (b01 << 16), y_frac2, FRAC_VAL / 2) >> FRAC_BITS; // combine

}

#else // generic C code

{

r00 = (p00 & r_mask) >> 1; g00 = p00 & g_mask; b00 = p00 & b_mask;

r10 = (p10 & r_mask) >> 1; g10 = p10 & g_mask; b10 = p10 & b_mask;

r01 = (p01 & r_mask) >> 1; g01 = p01 & g_mask; b01 = p01 & b_mask;

r11 = (p11 & r_mask) >> 1; g11 = p11 & g_mask; b11 = p11 & b_mask;

r00 = ((r00 * nx_frac) + (r10 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // top line

r01 = ((r01 * nx_frac) + (r11 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // bottom line

r00 = ((r00 * ny_frac) + (r01 * y_frac) + FRAC_VAL / 2) >> FRAC_BITS; // combine top + bottom

g00 = ((g00 * nx_frac) + (g10 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // top line

g01 = ((g01 * nx_frac) + (g11 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // bottom line

g00 = ((g00 * ny_frac) + (g01 * y_frac) + FRAC_VAL / 2) >> FRAC_BITS; // combine top + bottom

b00 = ((b00 * nx_frac) + (b10 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // top line

b01 = ((b01 * nx_frac) + (b11 * x_frac) + FRAC_VAL / 2) >> FRAC_BITS; // bottom line

b00 = ((b00 * ny_frac) + (b01 * y_frac) + FRAC_VAL / 2) >> FRAC_BITS; // combine top + bottom

}

#endif // Cortex-M4/M7

r00 = (r00 << 1) & r_mask;

g00 = g00 & g_mask;

b00 = b00 & b_mask;

p00 = (r00 | g00 | b00); // re-combine color components

*d16++ = (uint16_t)__builtin_bswap16(p00); // store new pixel

} // for x

} // 16-bpp

} // for y

Serial.println("data resized");

} /* resizeImage() */

//

Thanks.