Question/Issue:

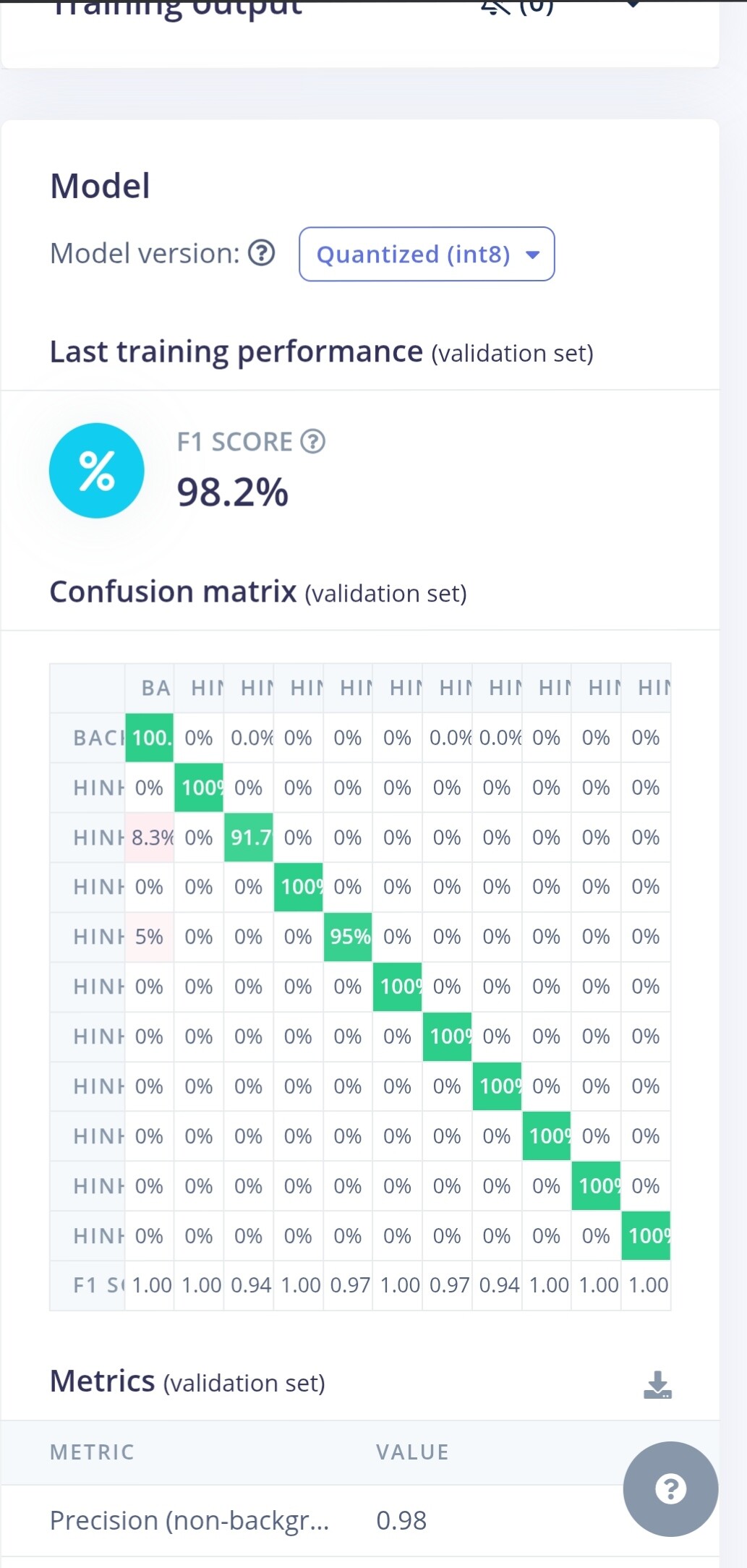

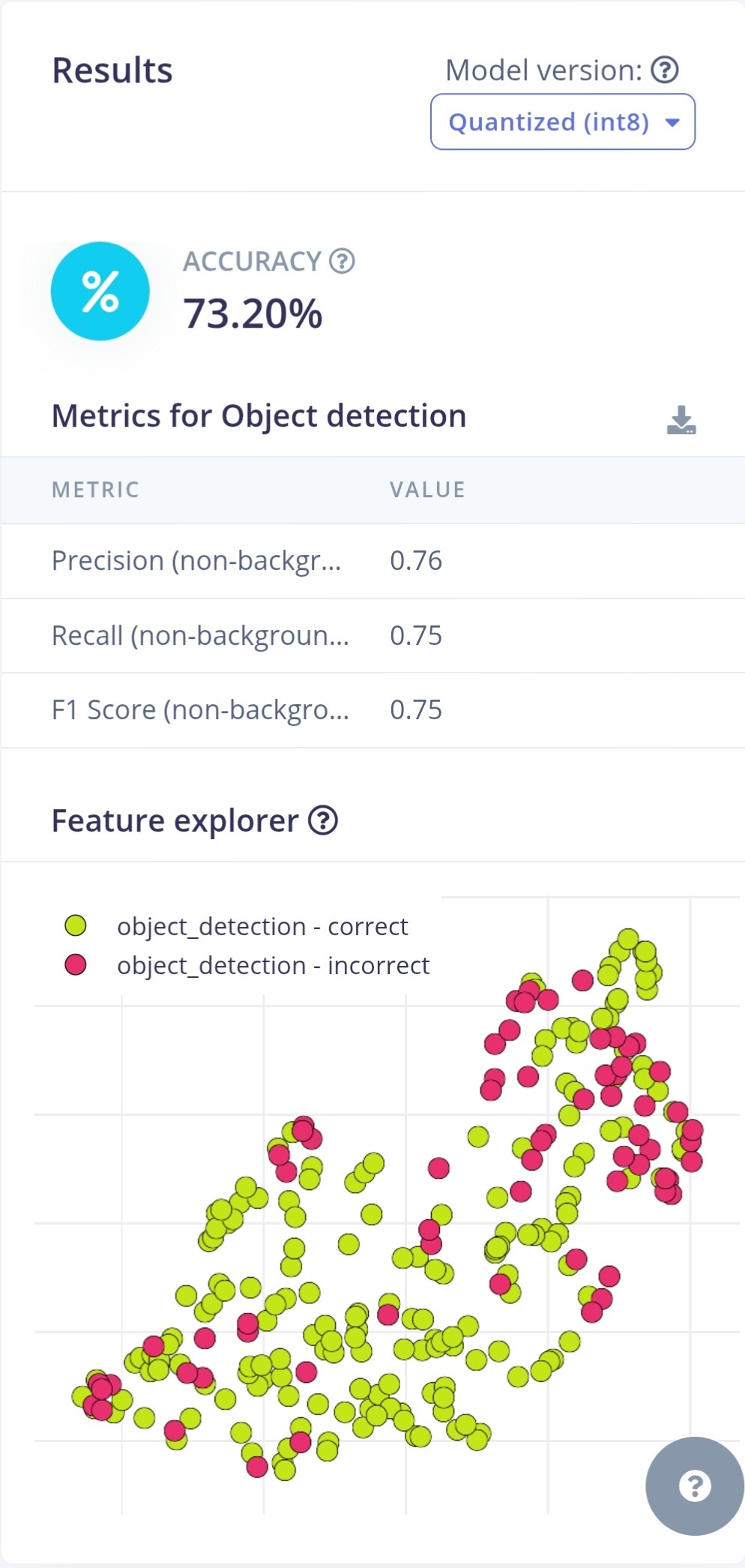

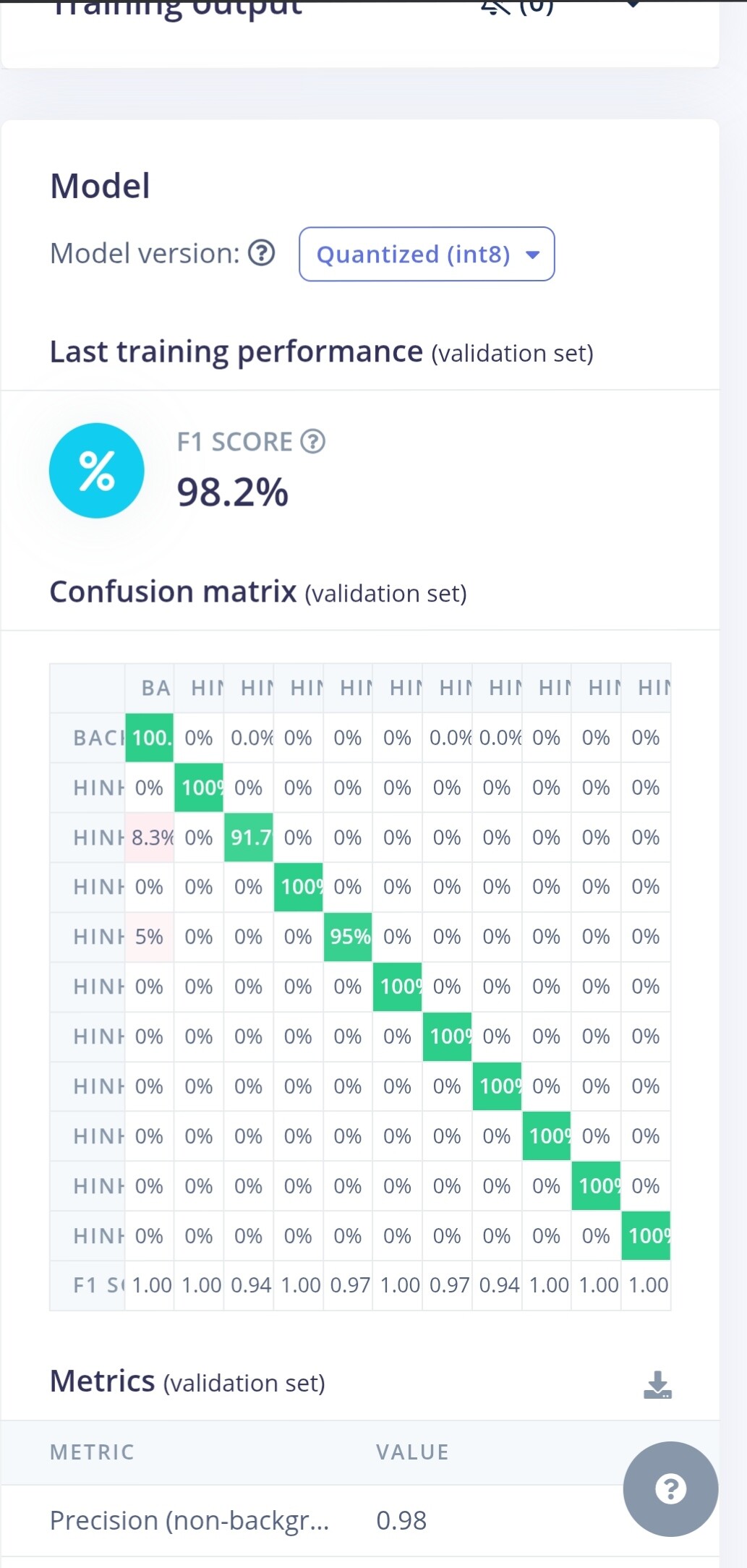

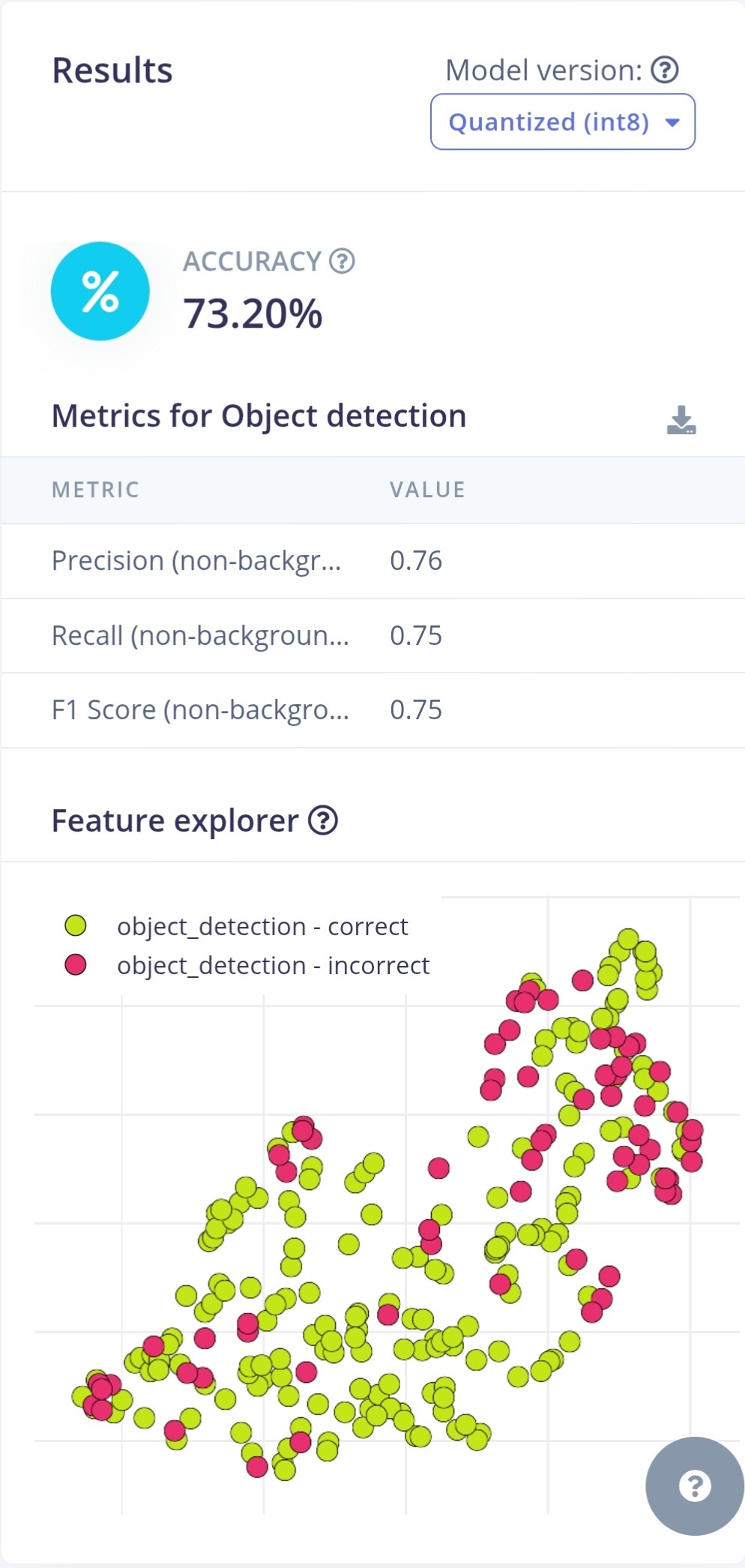

When I trained the model by Object detection with bounding boxes, It gave a good accuracy. However, when I used model testing, It gave me the bad accuracy.

Project ID:

https://studio.edgeimpulse.com/studio/764300

(Impulse “5”)

Steps Taken:

- I trained the model

- It gave good F1 score

- Model testing gave low accuracy

Expected Outcome:

Model testing should gives good accuracy.

Actual Outcome:

It gives bad accuracy

Reproducibility:

Environment:

Additional Information:

[Any other information that might be relevant]

So I took pictures in RGB format and uploaded them into both Training and Testing (I didn’t use auto splitting).

When I checked all the samples that were predicted wrong, I see that almost of them have different colors to the shapes’colors in Training. Is this because of the Grayscale issue or anything else?

Should I take pictures in Grayscale format instead of RGB? The colors aren’t important for my project. My project will predict only 1 shape in the picture (It can also predict the big shapes that are made from some different shapes, EX: making a house shape by a square and triangle) . Can you suggest me the best solution, please?

At first, I thought about overfitting issue so I reduced the learning rate and the epochs. However, It didn’t help.

I tried transfer learning but It gave me bad score btw so I want to use bounding boxes.

I can try the transfer learning again if you need!

*Sorry for my poor English.

Please help me! Thank you a lot!

Hello @ShapeSpeak thanks for your detailed message.

First i have some questions for you:

- did you train using the auto-split (80-20) so the samples are randomly selected for testing or training?

- did you try to swith to grayscale in the Impulse design to try to reduce noise from color differences?

- did you try to collect more images under other conditions with the camera that you are using? (different light, backgrounds, etc?)

Let us know if testing these things and re-training improve the situation!

1 Like

Thanks for replying!

I will try these!

I have a question: What should I choose: capturing images in RGB or Grayscale? I see that the example codes looks strange (Arduino), I cant see any lines of code that convert RGB into Grayscale.

That’s a very good question!

AFAIK when you select Grayscale in your Impulse’s image block, Edge Impulse automatically convert any RGB image into grayscale during the preprocessing.

That means that you might not need to manually write the code in Arduino to convert RGB into Grayscale.

Let us know if that works for you!

1 Like

Thanks for your info! I have another small question.

There are around 7 colors and 2 different sizes ( big and small) for each object. But I trained with only 2 colors. Is that alright with Grayscale?

Or I should trained all of these colors?

Hey! The auto split fixed this problem. Thank you so much! But should I train all the colors?

1 Like

@ShapeSpeak glad to learn that the autosplit fixed your problem.

But should I train all the colors?

It depends! maybe you can test a second model and see which one has more accuracy!

Happy to learn more!

1 Like