Hello! I have been working on this project for over two months and have gotten absolutely no where. I have tried training my own models again and again, but nothing seems to work. Everything is very inaccurate and unusable. I have heard that it is possible to run a pre-trained tflite model through edge impulse but I have no clue how I would go about doing this. I would like to run this tensorflow lite model because I know it is accurate unlike everything else I have tried. TensorFlow Hub This is for a commercial product that I am trying to launch, but I can’t even get a fully functional prototype. I had a very rough prototype that ran on a raspberry pi, but it was very big and bulky and expensive. Now I am trying to run object detection on an ESP32-cam. I can’t disclose what exactly the product is, but the main component is object running object detection for a specific purpose. I’ve tried everything that I’ve found online, but am getting nothing. The most success that I have had is on edgeimpulse where I was able to deploy a ML model, but the accuracy was so bad, that it made virtually no difference what it was looking at. I am getting very frustrated by the lack of results and would really appreciate some help. Also I have absolutely virtually no clue what I’m doing so you might have to explain more in depth than you normally would have to. Thanks!

Regarding the accuracy of the model:

-

The sweet spot for FOMO is detecting items that are in an output cell 1/8th of the input size.

-

Make sure to have a balanced dataset in both the training and testing datasets. For example, if you are looking to find chips, resistors and capacitors on a PCB, then the training set needs to have about the same number of the sampled photos, e.g., 100 photos of chips, 100 photos resistors, 100 photos of capacitors and 100 photos of things that are not chips, resistors and capacitors, i.e., maybe photos of inductors, relays, solder blobs or other items that might be on a PCB.

Regarding a Pre-Trained Model:

- In EI Studio look at the

Image Design-Object Detection. You must select FOMO MobileNetv2.0 0.35 or FOMO MobileNetv2.0 0.10. Switch to Keras mode. In the code you can see how EI is importing weights from ahdf5file. I do not know if the EI API exposes documentation on the format of thishdf5file ;(

I’ve tried all of this for the FOMO detection, but nothings working. As far as the pretrained model goes, I’m a little confused what you’re trying to say  I’m sorry I really have no clue how any of this stuff works.

I’m sorry I really have no clue how any of this stuff works.

Please create an example project without your proprietary data that reproduces the issues you are seeing and post it publicly so we can see what you are seeing.

Well, here you can see it, but this really isn’t what I want. I want to run a pre-trained tflite model, not a custom-trained one. I was only trying to create my own because it wasn’t working to run the pre-trained one. PetCV_V8 - Dashboard - Edge Impulse

Hello @shawnm1,

Feel free to reach out directly to me on DM if needed.

Just out of curiosity when I look at the public project you created, do you really need object detection instead of a simple binary image classifier?

Usually I am asking myself the following question before determining which approach I need to take.

- Do I need to count multiple objects in an image?

Yes → Object Detection

No → Image classification - How many different objects do I need to detect/classify (number of distinct classes)?

Both can work - Do I need the location of the object in the image?

Yes → Object Detection

No → Image classification - Do I need the size of the objects in the image?

Yes → MobileNet SSD or YOLO

No → MobileNet SSD, YOLO or FOMO

The ESP32-CAM will only support the following models:

- Image classification using MobileNetv2 transfer learning with 64x64px input images

- Image classification using MobileNetv1 transfer learning up to 96x96px input images (probably a bit more with pre-trained models with a small alpha but the accuracy will probably be impacted)

- FOMO with 64x64px input images

I hope that helps.

Regards,

Louis

Thank you for the reply! I’m really unsure whether I want to do object detection or classification. I have gone back and forth and have tried several of each with no results. I simply want to detect if a pet is within the FOV of the camera for the ai part of my project. I don’t need to count how many, just accurately know whether or not there is a pet. As far as the pretrained model goes, could you elaborate on how I would go about doing this? I’ve kind of given up trying to train my own model.

Since this project did not need FOMO I decided to use a tested Image Classification Impulse. I used @jlutzwpi project Solar Powered TinyML Bird Feeder as a guide.

See here

and here

I started by trying to chang the project to a X-fer Learning Impulse and intended to give all of the unknown images a label of "NotPet".

Since a bounding box (BB) file existed, I assume the EI Studio would not let me rename a group of samples’ labels even though those samples were unlabeled. I moved all the test data to the training data, exported the data, and then deleted all data in EI Studio. I deleted the bounding box file, and re-upload all the JPGs. (This is not AWS cost efficient and un-respectful of my time. Hopefully I am unaware of the "delete bounding boxes" button.) I still could not group-edit labels.

I started a new project, imported all the data without the BB file, and let EI studio infer the Label from the filename and let EI Studio Importer split the data into training and test datasets. This did not work because the filename inferrer picked up the "_###", e.g. "_001" so a Pug dog had multiple labels not just a single label Pug. (This was disappointing since the Impulse could have declared the species of the pet. Trying to do a group rename resulted in "Failed to load page" probably due to having hundreds of labels.)

Then I tried importing all JPGs again, giving them all a label of "Pet" assuming once a sample has a Label, then EI Studio will have no issues renaming a label (versus renaming a non-existent or null (not an empty) label to an actual label of non-zero length). Then I was able to Filter by "unknown" and renaming those labels as "NotPet". Then I performed a train / test split via the Dashboard.

The Impulse Design is:

- Image Data 96x96, fit shortest

- Image: check in checkbox

- X-fer Learning: check in checkbox

- Output Features: 2 (Pet,NotPet)

- Image Color Depth: RGB

- MobileNetV1 96x96 0.35

- Cycles: 20

- Learning Rate: 0.0005

- Validation: 20%

- Auto-Balance: Yes

- Data Aug: Yes

The results are fantastic:

- 98.7% NotPet=NotPet, F1=0.99

- 98.5% Pet=Pet, F1=0.99

EON Tuner did not run all models (maybe I reached the 20 minute time limit?) but I selected the best one (rgb-mobilenetv1-827) and re-trained the model.

I then built the firmware for the Portenta, downloaded it, and flashed the Portenta with the flash_windows.bat file.

Live Classification shows good results on random images from Google Image search when I pointed the Portenta camera at the computer monitor.

@shawnm1 I added you as a collaborator on my project.

The project is also public here.

Conclusion

I think you got stuck because EI Studio did not allow you a way to delete the bounding box file. I only realized this when I tried to change a FOMO project to an Image Classification project and miserably failed. The only solution was to *punt* and start a new project from scratch.

The Portenta camera is only grayscale but still the inference results were great even though the model was trained on RGB.

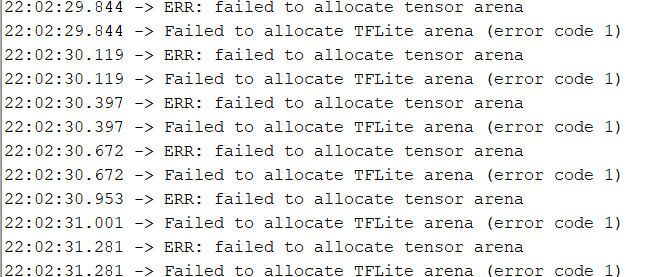

Wow. I can’t thank you enough for all this effort you’ve put into me. Thank you so much. You mentioned you got it working for a Portenta. Well, when I try to run it on an ESP32-Cam I get this error is the serial monitor.

I believe this might have something to do with size. do you know how I could shrink it without losing accuracy? Again Thank you so much.

I used 48x48 RGB for my model and that worked on the ESP32-CAM

I was able to shrink it down with still pretty great accuracy, but when I deploy it on the esp32, despite the fact that the accuracy on edge impulse is very good, the esp32 accuracy is very bad. it barely increases when a pet is shown in the picture above its average of 50% despite the fact that it should be way lower, to begin with. Did you ever run into this problem? if so, do you know how I could fix it?

Hello @shawnm1,

Can you try with the standalone inferencing in here: https://github.com/edgeimpulse/example-standalone-inferencing-espressif-esp32

And put your static features here before compiling and uploading your firmware to the board:

Alternatively, you can use the static_buffer example in the Arduino IDE provided example when you import your generated Arduino library.

Best,

Louis

Please excuse my lack of knowledge, but what do you mean by static_buffer and static features?

@shawnm1 I can answer your questions about static_buffer and static features but I could not get either experiment @louis suggested. Maybe you’ll have better luck than me.

RE: What is static_buffer?

static_buffer refers to static_buffer.ino an example program created by EI Studio and comes with the ZIP file created when you deploy your Impulse to an Arduino Library.

I have an ESP-EYE so I tried using the Arduino IDE compiling for board=ESP32 WRover Module.

I included the ZIP file as a Library using the Ardy IDE. During compile I could not get this solution to work due to ERROR:

C:\...Arduino\libraries\PetDetect_inferencing\src\edge-impulse-sdk\porting\espressif/ei_classifier_porting.cpp:56: multiple definition of ei_putchar(char);

collect2.exe: error: ld returned 1 exit status

RE: standalone inferencing

static features refers to this line in a Edge Impulse C file:

static const float features[]

Using the project at Edge Impulse Example: standalone inferencing (Espressif ESP32)

- I cloned the project on the GitHub page

- Renamed the ‘CMakeLists.txt’ to

CMakeLists_ORG.txt. - Downloaded

petdetect-v3.zipfrom EI Studio- Unzipped to the project root folder.

- Now in the root folder is a

CMakeLists.txt- I copied the contents of

CMakeLists_ORG.txtand - Pasted into the existing root folder

CMakeLists.txt

- I copied the contents of

I followed the Readme.md on the GitHub page.

Before compiling, I changed the features[] in main.cpp.

In EI Studio goto:

-

Live Classification, - on the right in

Classify existing test sample- select a

Sampleof a Pet

- select a

- Click the

Load Samplebutton - Click the

Raw Dataicon

and past into main.cpp:

static const float features[] = {

// copy raw features here (for example from the 'Live classification' page)

0x539cce, 0x559dcf, 0x559dcf, 0x549ecf, 0x559dcf, 0x549dcf, 0x559ecf, 0x549cce, 0x549cce, 0x549cce, 0x549cce, 0x549cce, 0x559dcf, 0x559dcf, 0x549dce, 0x539dcf, 0x549bce, 0x559cce, 0x559ccf, 0x549cce, 0x549cce,

…many more numbers exist here...

0x5c4d17, 0x5a4e17, 0x594d16, 0x5a4c16, 0x554a12, 0x524512, 0x504312, 0x4c4110, 0x4c4011, 0x4e4212, 0x50461a };

Instead of executing “get_idf” to initialize the ESP IDF as described on the GitHub page, on my system I executed:

C:\WINDOWS\system32\cmd.exe /k ""C:\Espressif\idf_cmd_init.bat" esp-idf-8de2bd0d9cffd2eca3d3f8442939a034"

that opened a command prompt window.

Then executing idf.py build

But Error: CMake Error at edge-impulse-sdk/cmake/zephyr/CMakeLists.txt:14 (target_include_directories):

target_include_directories called with non-compilable target type

@shawnm1 perhaps the bad inferencing is due to a mis-aligned camera.

Please try:

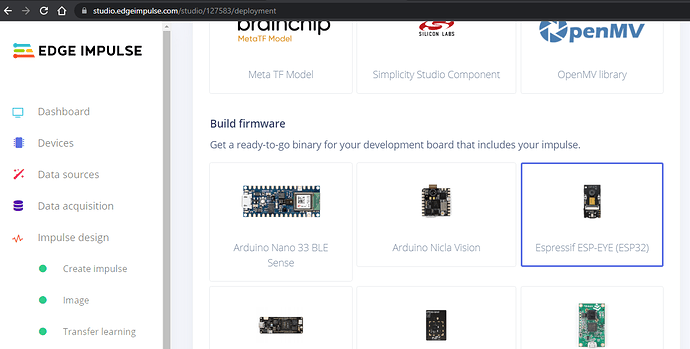

-

Deploying

Expressif ESP-EYE (ESP32)

-

Flash your ESP32

-

Execute:

edge-impulse-daemon -

Select the

PetDetectproject -

Open EI Studio

- Goto the

Devicesto make sure EI Studio is communicating with your ESP32 - Goto

Data Acq- In the

Record new dataframe on the right-side you should see a live camera view of your ESP32 - Adjust the position of your camera for optimal photo capture

- In the

- Goto the

-

Kill the running

edge-impulse-daemon -

Power cycle your ESP32

-

Execute:

edge-impulse-run-impulse -

Present images to the ESP32 camera and see how it inferences.

Whenever I try to connect my esp 32 to edge impulse it comes back with errors and just doesn’t work no matter what I do. What do you mean by adjusting the position of the camera?

What I meant by “adjusting the position of the camera” is nothing technical but simply aligning the camera lens with the sample photo as well as having a good resolution of the captured image.

If you have never gotten the ESP32 connected to EI Studio, then how are you seeing what the camera is seeing. Even if the EI Feature Explorer shows very good results, many things can affect low inference confidence rates such as:

- the camera may be seeing a very high contrast image (This happed to me: the pet face was a white blob surrounded by black color. Even I, a perfect human, could not make out what the image was, so there is no way a Model could predict what the image is.)

- the camera is seeing only the top half of the pet

- due to lighting, the camera may see the photo as washed out

- perhaps the camera is upside down and the Model is having trouble with that type of image. Therefore, you will need to add more pet samples that are rotated at various angles.

I once had a Model deployed and working well on a microcontroller. Then it seemed suddenly the inference results took a turn for the worse and yet nothing changed: the camera had not moved, the objects I was photographing did not change. What did happen was the afternoon light coming thru the windows changed since the sun went down, I pulled the curtains shut, and turned on the inside rooms lights. So my Model could not handle such an extreme change in lighting due to my Model being trained with afternoon sunlight. The low resolution cameras on microcontrollers can be very sensitive to ambient lighting conditions. If the resolution is good and you, a human, can make out what the image is when viewed thru the ESP32 camera, then you may need to add more pet samples that vary by lighting conditions. Someone who knows Adobe Photoshop or the like should be able to enhance your photo samples with various lighting schemes.

Alright this is great info. Thanks! Am I the only one struggling to get my esp32 connected directly to edge impulse? All I’ve been able to do is run edge impulse code through the Arduino ide so I have no clue what my esp32 is actually seeing.