Hi!

I want to know if there a way that impulse edge can detect motion and voice at the same time,

I have voice data and motion data in the “Data acquisition”,

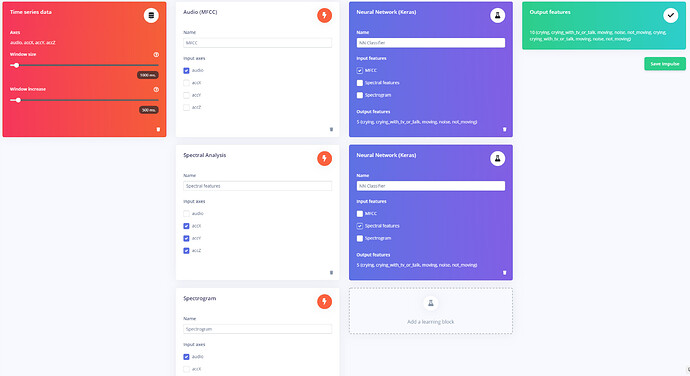

I set the impulse like this

i think the nn classifier should output split audio and motion features, but it outputs 5 classes including voice and motion.

@zhongrui Two neural network blocks will indeed give you the output features twice. What do you want to do here: 1) use both audio+accelerometer data feeding into one neural network? Or 2) have two networks, one based on audio, one based on accelerometer? 2) seems to be implied based on the screenshot.

In the case of 1) you’ll need to combine audio+accelerometer data into a single file (see the Data acquisition format) that has four axes (accX,accY,accZ,audio) and upsample the accelerometer data to the same resolution as the audio. In case of 2) I’d put it in two different projects.

Note that we don’t have a super easy way of deploying two projects onto one device at the moment.

1 Like

Thank you for your reply !. I am currently using Arduino Nano 33 BLE. I want it to recognize sound and acceleration at the same time, but collecting these two data at the same time will cause frequency problems, and the NN classfier will also recognize the Label incorrectly. Does that mean I have to create two projects if I want to realize the function, Then merge into one in arduino IDE? I’m a novice, sorry for not understanding, please advise!

Hi @zhongrui,

but collecting these two data at the same time will cause frequency problems

So you upsample the accelerometer data to be the same resolution as the audio data (e.g. 16KHz). Not really a problem once you run feature extraction over it again at a later point.

However, as you might guess this requires a bunch of manual work at the moment on the firmware side, so if you can split the project up in separate audio classifier and separate accelerometer classifier that would make life easier.

1 Like