Hello everyone,

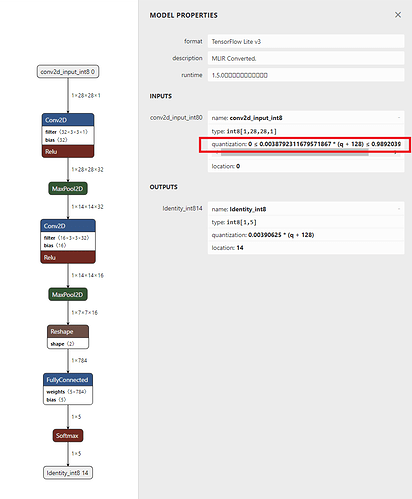

I have developed a simple image classification quantized model, taking advantage of transfer learning. I’d like to use the .lite file, not the SDK. By netron I see that the required input format is int8. Is there a simple way to modify the model to have uint8 instead?

Hi @Tex76,

To my knowledge, there is no easy way to modify a .lite file. You might be able to modify the model file by manipulating the FlatBuffer, as shown in this thread. In theory, you might be able to change the quantization layer to remove the “+128” step and accept uint8 values directly. This is not something I have tried, though. If you do get it to work, please let us know how!

@Tex76 TensorFlow has switched to int8 quantization by default (some older versions of TFLite used uint8), and we’re following this (uint8 support is actively being removed from TFLite Micro kernels as well).

Thank you very much for your answers!

@janjongboom I’d like to use TFLite Support Library, that requires uint8 type for its TensorImage input:

Process input and output data with the TensorFlow Lite Support Library

@shawn_edgeimpulse I apologize for my bad English: I don’t need to modify the .lite file, changing the model in EdgeImpulse (and so the .lite output) would be perfect!

@Tex76 Can’t you just change this:

// Create a TensorImage object. This creates the tensor of the corresponding

// tensor type (uint8 in this case) that the TensorFlow Lite interpreter needs.

TensorImage tensorImage = new TensorImage(DataType.UINT8);

to:

// Create a TensorImage object. This creates the tensor of the corresponding

// tensor type (uint8 in this case) that the TensorFlow Lite interpreter needs.

TensorImage tensorImage = new TensorImage(DataType.INT8);

?

Unfortunately no, otherwise you get an exception: https://tensorflow.google.cn/lite/api_docs/java/org/tensorflow/lite/support/image/TensorImage?hl=en#throws