I am trying to create an audio classification model to specifically identify a “door chime” sound effect and keep false positives as close to zero as possible. The sound effect is a very typical/standard door chime sound and it lasts about 5 seconds total duration. Sounds kind of like a small bell ringing twice in a row (ex: ding pitch1, ding pitch2). Currently my model falsely identifies all kinds of background sounds as the door chime even though they aren’t even close to sounding like it. I have tried all kinds of variations on the training and variations on the training data samples, but I’m not making any real progress. How can I efficiently eliminate false positives (falsely identifying the door chime within random background noise)? Slower learning rate? More Epochs? MFCC instead of MFE?

How did you collect your data?

Laptop microphone from the data collection menu

Okay, I see, did you try to mix the data with a different noise from the background? or you have only registered the door chime sound?

2 background noise samples with no chimes and one sample of chimes only

The issue is mainly with the data collection step, if the door chime sounds look similar after the spectro-analysis to the other sounds then the training step will fail. Could you share the results of the processing block that you are using?

Also it can be helpful if you can share the results of the training and the validation steps.

Did the model fails on the testing data too?

Thanks,

Omar

Will do as soon as i get a chance

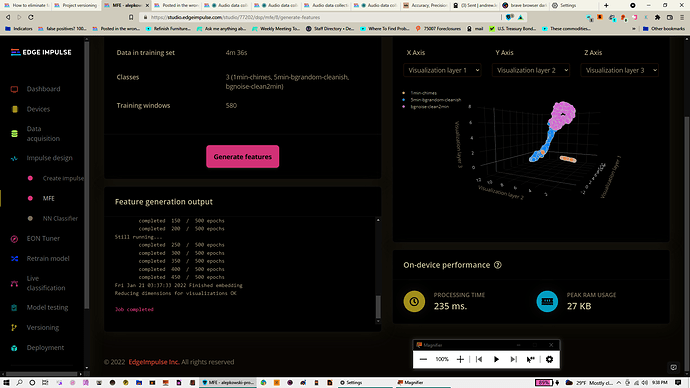

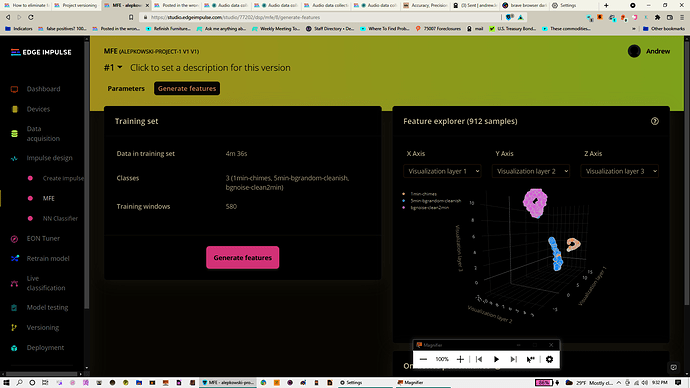

MFE output: Not sure why the 3D Visualization drastically changed on the 2nd time I “generated features”. I uploaded both the original 3D visualization and the visualization from the 2nd “feature generation” for comparison.

MFE:

Feature generation output

Creating job… OK (ID: 2021261)

Scheduling job in cluster…

Job started

Creating windows from 3 files…

[1/3] Creating windows from files…

[2/3] Creating windows from files…

[2/3] Creating windows from files…

[3/3] Creating windows from files…

[ 1/912] Resampling windows…

[912/912] Resampling windows…

Resampled 912 windows

Created 912 windows: 1min-chimes: 97, 5min-bgrandom-cleanish: 417, bgnoise-clean2min: 398

Creating features

[ 1/912] Creating features…

[141/912] Creating features…

[293/912] Creating features…

[455/912] Creating features…

[606/912] Creating features…

[762/912] Creating features…

[899/912] Creating features…

[912/912] Creating features…

Created features

Scheduling job in cluster…

Job started

Attached to job 2021261…

Attached to job 2021261…

Reducing dimensions for visualizations…

UMAP(n_components=3, verbose=True)

Construct fuzzy simplicial set

Fri Jan 21 03:37:23 2022 Finding Nearest Neighbors

Fri Jan 21 03:37:25 2022 Finished Nearest Neighbor Search

Still running…

Fri Jan 21 03:37:28 2022 Construct embedding

completed 0 / 500 epochs

completed 50 / 500 epochs

completed 100 / 500 epochs

completed 150 / 500 epochs

completed 200 / 500 epochs

Still running…

completed 250 / 500 epochs

completed 300 / 500 epochs

completed 350 / 500 epochs

completed 400 / 500 epochs

completed 450 / 500 epochs

Fri Jan 21 03:37:33 2022 Finished embedding

Reducing dimensions for visualizations OK

Job completed

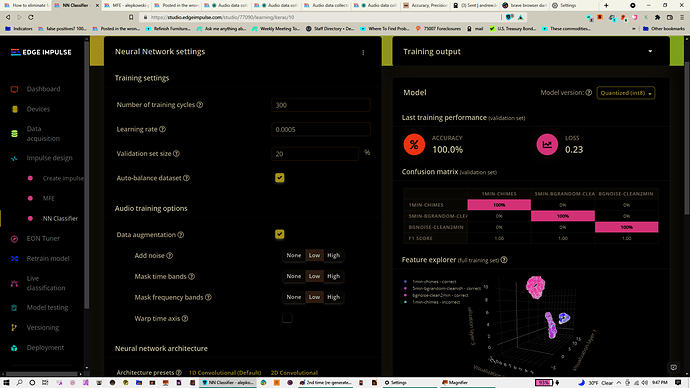

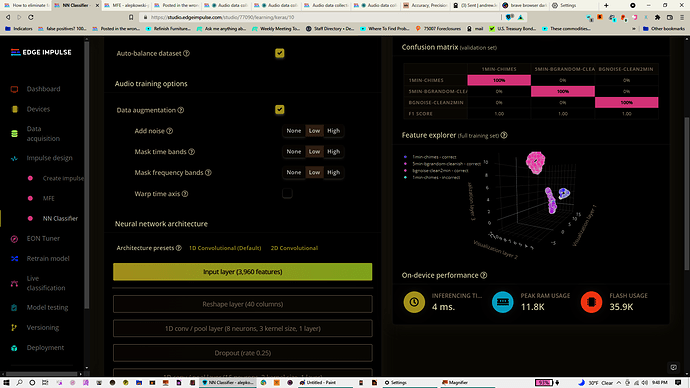

NN Classifier Training Output:

Creating job… OK (ID: 2021298)

Job started

Splitting data into training and validation sets…

Splitting data into training and validation sets OK

Training model…

Training on 729 inputs, validating on 183 inputs

Epoch 1/300

23/23 - 2s - loss: 1.1384 - accuracy: 0.4170 - val_loss: 1.0914 - val_accuracy: 0.4317

Epoch 2/300

23/23 - 1s - loss: 1.0698 - accuracy: 0.4033 - val_loss: 1.0789 - val_accuracy: 0.4863

Epoch 3/300

23/23 - 1s - loss: 1.0511 - accuracy: 0.4444 - val_loss: 1.0629 - val_accuracy: 0.5628

Epoch 4/300

23/23 - 1s - loss: 0.9857 - accuracy: 0.5514 - val_loss: 1.0347 - val_accuracy: 0.6339

Epoch 5/300

23/23 - 1s - loss: 0.9431 - accuracy: 0.5885 - val_loss: 0.9967 - val_accuracy: 0.8197

Epoch 6/300

23/23 - 1s - loss: 0.8420 - accuracy: 0.6749 - val_loss: 0.9374 - val_accuracy: 0.9016

Epoch 7/300

23/23 - 1s - loss: 0.7880 - accuracy: 0.7531 - val_loss: 0.8699 - val_accuracy: 0.9781

Epoch 8/300

23/23 - 1s - loss: 0.6931 - accuracy: 0.8313 - val_loss: 0.7942 - val_accuracy: 0.9781

Epoch 9/300

23/23 - 1s - loss: 0.6259 - accuracy: 0.8560 - val_loss: 0.7052 - val_accuracy: 0.9781

Epoch 10/300

23/23 - 1s - loss: 0.5444 - accuracy: 0.8848 - val_loss: 0.6170 - val_accuracy: 0.9781

Epoch 11/300

23/23 - 1s - loss: 0.5179 - accuracy: 0.9081 - val_loss: 0.5432 - val_accuracy: 0.9781

Epoch 12/300

23/23 - 1s - loss: 0.4513 - accuracy: 0.9259 - val_loss: 0.4781 - val_accuracy: 0.9836

Epoch 13/300

23/23 - 1s - loss: 0.3944 - accuracy: 0.9355 - val_loss: 0.4161 - val_accuracy: 0.9836

Epoch 14/300

23/23 - 1s - loss: 0.3651 - accuracy: 0.9328 - val_loss: 0.3670 - val_accuracy: 0.9836

Epoch 15/300

23/23 - 1s - loss: 0.3269 - accuracy: 0.9643 - val_loss: 0.3224 - val_accuracy: 0.9836

Epoch 16/300

23/23 - 1s - loss: 0.3094 - accuracy: 0.9588 - val_loss: 0.2950 - val_accuracy: 0.9891

Epoch 17/300

23/23 - 1s - loss: 0.2886 - accuracy: 0.9588 - val_loss: 0.2561 - val_accuracy: 0.9891

Epoch 18/300

23/23 - 1s - loss: 0.2481 - accuracy: 0.9671 - val_loss: 0.2385 - val_accuracy: 0.9891

Epoch 19/300

23/23 - 1s - loss: 0.2508 - accuracy: 0.9712 - val_loss: 0.2210 - val_accuracy: 0.9891

Epoch 20/300

23/23 - 1s - loss: 0.2581 - accuracy: 0.9671 - val_loss: 0.2096 - val_accuracy: 0.9891

Epoch 21/300

23/23 - 1s - loss: 0.2402 - accuracy: 0.9657 - val_loss: 0.1900 - val_accuracy: 0.9891

Epoch 22/300

23/23 - 1s - loss: 0.2155 - accuracy: 0.9684 - val_loss: 0.1862 - val_accuracy: 0.9891

Epoch 23/300

23/23 - 1s - loss: 0.2140 - accuracy: 0.9726 - val_loss: 0.1773 - val_accuracy: 0.9891

Epoch 24/300

23/23 - 1s - loss: 0.2095 - accuracy: 0.9712 - val_loss: 0.1595 - val_accuracy: 0.9891

Epoch 25/300

23/23 - 1s - loss: 0.1967 - accuracy: 0.9808 - val_loss: 0.1614 - val_accuracy: 0.9891

Epoch 26/300

23/23 - 1s - loss: 0.1880 - accuracy: 0.9753 - val_loss: 0.1537 - val_accuracy: 0.9891

Epoch 27/300

23/23 - 1s - loss: 0.1948 - accuracy: 0.9671 - val_loss: 0.1498 - val_accuracy: 0.9891

Epoch 28/300

23/23 - 1s - loss: 0.2005 - accuracy: 0.9643 - val_loss: 0.1457 - val_accuracy: 0.9891

Epoch 29/300

23/23 - 1s - loss: 0.1868 - accuracy: 0.9781 - val_loss: 0.1457 - val_accuracy: 0.9891

Epoch 30/300

23/23 - 1s - loss: 0.1759 - accuracy: 0.9726 - val_loss: 0.1402 - val_accuracy: 0.9891

Epoch 31/300

23/23 - 1s - loss: 0.1888 - accuracy: 0.9781 - val_loss: 0.1318 - val_accuracy: 0.9891

Epoch 32/300

23/23 - 1s - loss: 0.1683 - accuracy: 0.9739 - val_loss: 0.1265 - val_accuracy: 0.9891

Epoch 33/300

23/23 - 1s - loss: 0.1503 - accuracy: 0.9739 - val_loss: 0.1200 - val_accuracy: 0.9891

Epoch 34/300

23/23 - 1s - loss: 0.1686 - accuracy: 0.9794 - val_loss: 0.1274 - val_accuracy: 0.9891

Epoch 35/300

23/23 - 1s - loss: 0.1767 - accuracy: 0.9739 - val_loss: 0.1297 - val_accuracy: 0.9891

Epoch 36/300

23/23 - 1s - loss: 0.1685 - accuracy: 0.9753 - val_loss: 0.1242 - val_accuracy: 0.9891

Epoch 37/300

23/23 - 1s - loss: 0.1382 - accuracy: 0.9808 - val_loss: 0.1228 - val_accuracy: 0.9891

Epoch 38/300

23/23 - 1s - loss: 0.1663 - accuracy: 0.9753 - val_loss: 0.1220 - val_accuracy: 0.9945

Epoch 39/300

23/23 - 1s - loss: 0.1424 - accuracy: 0.9849 - val_loss: 0.1076 - val_accuracy: 0.9945

Epoch 40/300

23/23 - 1s - loss: 0.1411 - accuracy: 0.9822 - val_loss: 0.1167 - val_accuracy: 0.9945

Epoch 41/300

23/23 - 1s - loss: 0.1566 - accuracy: 0.9726 - val_loss: 0.1177 - val_accuracy: 0.9945

Epoch 42/300

23/23 - 1s - loss: 0.1629 - accuracy: 0.9753 - val_loss: 0.1084 - val_accuracy: 0.9945

Epoch 43/300

23/23 - 1s - loss: 0.1430 - accuracy: 0.9767 - val_loss: 0.1092 - val_accuracy: 0.9945

Epoch 44/300

23/23 - 1s - loss: 0.1422 - accuracy: 0.9794 - val_loss: 0.1087 - val_accuracy: 0.9945

Epoch 45/300

23/23 - 1s - loss: 0.1454 - accuracy: 0.9781 - val_loss: 0.1083 - val_accuracy: 0.9945

Epoch 46/300

23/23 - 1s - loss: 0.1661 - accuracy: 0.9808 - val_loss: 0.1075 - val_accuracy: 0.9945

Epoch 47/300

23/23 - 1s - loss: 0.1492 - accuracy: 0.9794 - val_loss: 0.1151 - val_accuracy: 0.9945

Epoch 48/300

23/23 - 1s - loss: 0.1451 - accuracy: 0.9767 - val_loss: 0.1090 - val_accuracy: 0.9945

Epoch 49/300

23/23 - 1s - loss: 0.1453 - accuracy: 0.9739 - val_loss: 0.1065 - val_accuracy: 0.9945

Epoch 50/300

23/23 - 1s - loss: 0.1374 - accuracy: 0.9835 - val_loss: 0.1084 - val_accuracy: 0.9945

Epoch 51/300

23/23 - 1s - loss: 0.1479 - accuracy: 0.9808 - val_loss: 0.1091 - val_accuracy: 0.9945

Epoch 52/300

23/23 - 1s - loss: 0.1521 - accuracy: 0.9781 - val_loss: 0.1028 - val_accuracy: 0.9945

Epoch 53/300

23/23 - 1s - loss: 0.1400 - accuracy: 0.9835 - val_loss: 0.1078 - val_accuracy: 0.9945

Epoch 54/300

23/23 - 1s - loss: 0.1291 - accuracy: 0.9822 - val_loss: 0.1034 - val_accuracy: 0.9945

Epoch 55/300

23/23 - 1s - loss: 0.1674 - accuracy: 0.9767 - val_loss: 0.1105 - val_accuracy: 0.9945

Epoch 56/300

23/23 - 1s - loss: 0.1358 - accuracy: 0.9794 - val_loss: 0.1073 - val_accuracy: 0.9945

Epoch 57/300

23/23 - 1s - loss: 0.1407 - accuracy: 0.9726 - val_loss: 0.1067 - val_accuracy: 0.9945

Epoch 58/300

23/23 - 1s - loss: 0.1368 - accuracy: 0.9781 - val_loss: 0.1092 - val_accuracy: 0.9945

Epoch 59/300

23/23 - 1s - loss: 0.1219 - accuracy: 0.9822 - val_loss: 0.1066 - val_accuracy: 0.9945

Epoch 60/300

23/23 - 1s - loss: 0.1434 - accuracy: 0.9726 - val_loss: 0.1018 - val_accuracy: 0.9945

Epoch 61/300

23/23 - 1s - loss: 0.1319 - accuracy: 0.9822 - val_loss: 0.1037 - val_accuracy: 0.9945

Epoch 62/300

23/23 - 1s - loss: 0.1400 - accuracy: 0.9835 - val_loss: 0.1081 - val_accuracy: 0.9945

Epoch 63/300

23/23 - 1s - loss: 0.1353 - accuracy: 0.9808 - val_loss: 0.1186 - val_accuracy: 0.9945

Epoch 64/300

23/23 - 1s - loss: 0.1448 - accuracy: 0.9781 - val_loss: 0.1118 - val_accuracy: 0.9945

Epoch 65/300

23/23 - 1s - loss: 0.1399 - accuracy: 0.9835 - val_loss: 0.1102 - val_accuracy: 0.9945

Epoch 66/300

23/23 - 1s - loss: 0.1420 - accuracy: 0.9822 - val_loss: 0.1222 - val_accuracy: 0.9945

Epoch 67/300

23/23 - 1s - loss: 0.1323 - accuracy: 0.9767 - val_loss: 0.1163 - val_accuracy: 0.9945

Epoch 68/300

23/23 - 1s - loss: 0.1209 - accuracy: 0.9877 - val_loss: 0.1113 - val_accuracy: 0.9945

Epoch 69/300

23/23 - 1s - loss: 0.1358 - accuracy: 0.9781 - val_loss: 0.1182 - val_accuracy: 0.9945

Epoch 70/300

23/23 - 1s - loss: 0.1369 - accuracy: 0.9767 - val_loss: 0.1196 - val_accuracy: 0.9945

Epoch 71/300

23/23 - 1s - loss: 0.1281 - accuracy: 0.9822 - val_loss: 0.1205 - val_accuracy: 0.9945

Epoch 72/300

23/23 - 1s - loss: 0.1459 - accuracy: 0.9781 - val_loss: 0.1200 - val_accuracy: 0.9945

Epoch 73/300

23/23 - 1s - loss: 0.1338 - accuracy: 0.9808 - val_loss: 0.1275 - val_accuracy: 0.9891

Epoch 74/300

23/23 - 1s - loss: 0.1381 - accuracy: 0.9808 - val_loss: 0.1239 - val_accuracy: 0.9891

Epoch 75/300

23/23 - 1s - loss: 0.1296 - accuracy: 0.9835 - val_loss: 0.1215 - val_accuracy: 0.9945

Epoch 76/300

23/23 - 1s - loss: 0.1104 - accuracy: 0.9849 - val_loss: 0.1224 - val_accuracy: 0.9891

Epoch 77/300

23/23 - 1s - loss: 0.1163 - accuracy: 0.9849 - val_loss: 0.1196 - val_accuracy: 0.9891

Epoch 78/300

23/23 - 1s - loss: 0.1203 - accuracy: 0.9849 - val_loss: 0.1261 - val_accuracy: 0.9945

Epoch 79/300

23/23 - 1s - loss: 0.1474 - accuracy: 0.9808 - val_loss: 0.1263 - val_accuracy: 0.9945

Epoch 80/300

23/23 - 1s - loss: 0.1253 - accuracy: 0.9863 - val_loss: 0.1221 - val_accuracy: 0.9945

Epoch 81/300

23/23 - 1s - loss: 0.1212 - accuracy: 0.9877 - val_loss: 0.1186 - val_accuracy: 0.9945

Epoch 82/300

23/23 - 1s - loss: 0.1432 - accuracy: 0.9767 - val_loss: 0.1221 - val_accuracy: 0.9945

Epoch 83/300

23/23 - 1s - loss: 0.1451 - accuracy: 0.9781 - val_loss: 0.1204 - val_accuracy: 0.9945

Epoch 84/300

23/23 - 1s - loss: 0.1254 - accuracy: 0.9753 - val_loss: 0.1130 - val_accuracy: 0.9945

Epoch 85/300

23/23 - 1s - loss: 0.1252 - accuracy: 0.9835 - val_loss: 0.1136 - val_accuracy: 0.9945

Epoch 86/300

23/23 - 1s - loss: 0.1265 - accuracy: 0.9849 - val_loss: 0.1115 - val_accuracy: 0.9945

Epoch 87/300

23/23 - 1s - loss: 0.1103 - accuracy: 0.9890 - val_loss: 0.1165 - val_accuracy: 0.9945

Epoch 88/300

23/23 - 1s - loss: 0.1365 - accuracy: 0.9863 - val_loss: 0.1235 - val_accuracy: 0.9945

Epoch 89/300

23/23 - 1s - loss: 0.1209 - accuracy: 0.9849 - val_loss: 0.1150 - val_accuracy: 0.9945

Epoch 90/300

23/23 - 1s - loss: 0.1198 - accuracy: 0.9808 - val_loss: 0.1118 - val_accuracy: 0.9945

Epoch 91/300

23/23 - 1s - loss: 0.1290 - accuracy: 0.9835 - val_loss: 0.1199 - val_accuracy: 0.9945

Epoch 92/300

23/23 - 1s - loss: 0.1142 - accuracy: 0.9849 - val_loss: 0.1162 - val_accuracy: 0.9945

Epoch 93/300

23/23 - 1s - loss: 0.1268 - accuracy: 0.9863 - val_loss: 0.1156 - val_accuracy: 0.9945

Epoch 94/300

23/23 - 1s - loss: 0.1320 - accuracy: 0.9877 - val_loss: 0.1150 - val_accuracy: 0.9945

Epoch 95/300

23/23 - 1s - loss: 0.1297 - accuracy: 0.9808 - val_loss: 0.1140 - val_accuracy: 0.9945

Epoch 96/300

23/23 - 1s - loss: 0.1220 - accuracy: 0.9822 - val_loss: 0.1180 - val_accuracy: 0.9945

Epoch 97/300

23/23 - 1s - loss: 0.1321 - accuracy: 0.9835 - val_loss: 0.1174 - val_accuracy: 0.9945

Epoch 98/300

23/23 - 1s - loss: 0.1250 - accuracy: 0.9822 - val_loss: 0.1187 - val_accuracy: 0.9945

Epoch 99/300

23/23 - 1s - loss: 0.1150 - accuracy: 0.9849 - val_loss: 0.1248 - val_accuracy: 0.9945

Epoch 100/300

23/23 - 1s - loss: 0.1104 - accuracy: 0.9808 - val_loss: 0.1238 - val_accuracy: 0.9945

Epoch 101/300

23/23 - 1s - loss: 0.1423 - accuracy: 0.9822 - val_loss: 0.1238 - val_accuracy: 0.9945

Epoch 102/300

23/23 - 1s - loss: 0.1195 - accuracy: 0.9849 - val_loss: 0.1174 - val_accuracy: 0.9945

Epoch 103/300

23/23 - 1s - loss: 0.1138 - accuracy: 0.9849 - val_loss: 0.1171 - val_accuracy: 0.9945

Epoch 104/300

23/23 - 1s - loss: 0.1442 - accuracy: 0.9835 - val_loss: 0.1156 - val_accuracy: 0.9945

Epoch 105/300

23/23 - 1s - loss: 0.1141 - accuracy: 0.9822 - val_loss: 0.1168 - val_accuracy: 0.9945

Epoch 106/300

23/23 - 1s - loss: 0.1111 - accuracy: 0.9863 - val_loss: 0.1194 - val_accuracy: 0.9945

Epoch 107/300

23/23 - 1s - loss: 0.1459 - accuracy: 0.9794 - val_loss: 0.1229 - val_accuracy: 0.9945

Epoch 108/300

23/23 - 1s - loss: 0.1279 - accuracy: 0.9808 - val_loss: 0.1316 - val_accuracy: 0.9945

Epoch 109/300

23/23 - 1s - loss: 0.1213 - accuracy: 0.9849 - val_loss: 0.1279 - val_accuracy: 0.9945

Epoch 110/300

23/23 - 1s - loss: 0.1207 - accuracy: 0.9849 - val_loss: 0.1250 - val_accuracy: 0.9945

Epoch 111/300

23/23 - 1s - loss: 0.1285 - accuracy: 0.9863 - val_loss: 0.1353 - val_accuracy: 0.9945

Epoch 112/300

23/23 - 1s - loss: 0.1264 - accuracy: 0.9835 - val_loss: 0.1294 - val_accuracy: 0.9945

Epoch 113/300

23/23 - 1s - loss: 0.1216 - accuracy: 0.9835 - val_loss: 0.1303 - val_accuracy: 0.9945

Epoch 114/300

23/23 - 1s - loss: 0.1154 - accuracy: 0.9863 - val_loss: 0.1286 - val_accuracy: 0.9945

Epoch 115/300

23/23 - 1s - loss: 0.1242 - accuracy: 0.9877 - val_loss: 0.1336 - val_accuracy: 0.9945

Epoch 116/300

23/23 - 1s - loss: 0.1069 - accuracy: 0.9835 - val_loss: 0.1270 - val_accuracy: 0.9945

Epoch 117/300

23/23 - 1s - loss: 0.1179 - accuracy: 0.9849 - val_loss: 0.1307 - val_accuracy: 0.9945

Epoch 118/300

23/23 - 1s - loss: 0.1247 - accuracy: 0.9822 - val_loss: 0.1324 - val_accuracy: 0.9945

Epoch 119/300

23/23 - 1s - loss: 0.1136 - accuracy: 0.9835 - val_loss: 0.1300 - val_accuracy: 0.9945

Epoch 120/300

23/23 - 1s - loss: 0.1185 - accuracy: 0.9877 - val_loss: 0.1311 - val_accuracy: 0.9945

Epoch 121/300

23/23 - 1s - loss: 0.1111 - accuracy: 0.9863 - val_loss: 0.1263 - val_accuracy: 0.9945

Epoch 122/300

23/23 - 1s - loss: 0.1103 - accuracy: 0.9877 - val_loss: 0.1229 - val_accuracy: 0.9945

Epoch 123/300

23/23 - 1s - loss: 0.0952 - accuracy: 0.9890 - val_loss: 0.1209 - val_accuracy: 1.0000

Epoch 124/300

23/23 - 1s - loss: 0.1183 - accuracy: 0.9877 - val_loss: 0.1260 - val_accuracy: 1.0000

Epoch 125/300

23/23 - 1s - loss: 0.0973 - accuracy: 0.9877 - val_loss: 0.1237 - val_accuracy: 0.9945

Epoch 126/300

23/23 - 1s - loss: 0.1173 - accuracy: 0.9849 - val_loss: 0.1270 - val_accuracy: 0.9945

Epoch 127/300

23/23 - 1s - loss: 0.0990 - accuracy: 0.9835 - val_loss: 0.1211 - val_accuracy: 1.0000

Epoch 128/300

23/23 - 1s - loss: 0.1026 - accuracy: 0.9849 - val_loss: 0.1191 - val_accuracy: 1.0000

Epoch 129/300

23/23 - 1s - loss: 0.1154 - accuracy: 0.9863 - val_loss: 0.1160 - val_accuracy: 1.0000

Epoch 130/300

23/23 - 1s - loss: 0.1183 - accuracy: 0.9849 - val_loss: 0.1215 - val_accuracy: 0.9945

Epoch 131/300

23/23 - 1s - loss: 0.1104 - accuracy: 0.9808 - val_loss: 0.1252 - val_accuracy: 0.9945

Epoch 132/300

23/23 - 1s - loss: 0.1304 - accuracy: 0.9835 - val_loss: 0.1270 - val_accuracy: 0.9945

Epoch 133/300

23/23 - 1s - loss: 0.1059 - accuracy: 0.9849 - val_loss: 0.1254 - val_accuracy: 0.9945

Epoch 134/300

23/23 - 1s - loss: 0.1162 - accuracy: 0.9822 - val_loss: 0.1165 - val_accuracy: 1.0000

Epoch 135/300

23/23 - 1s - loss: 0.1079 - accuracy: 0.9822 - val_loss: 0.1255 - val_accuracy: 0.9945

Epoch 136/300

23/23 - 1s - loss: 0.1128 - accuracy: 0.9835 - val_loss: 0.1267 - val_accuracy: 0.9945

Epoch 137/300

23/23 - 1s - loss: 0.1080 - accuracy: 0.9808 - val_loss: 0.1233 - val_accuracy: 1.0000

Epoch 138/300

23/23 - 1s - loss: 0.1049 - accuracy: 0.9835 - val_loss: 0.1168 - val_accuracy: 1.0000

Epoch 139/300

23/23 - 1s - loss: 0.1420 - accuracy: 0.9849 - val_loss: 0.1310 - val_accuracy: 0.9945

Epoch 140/300

23/23 - 1s - loss: 0.1032 - accuracy: 0.9863 - val_loss: 0.1324 - val_accuracy: 0.9945

Epoch 141/300

23/23 - 1s - loss: 0.1117 - accuracy: 0.9849 - val_loss: 0.1333 - val_accuracy: 0.9945

Epoch 142/300

23/23 - 1s - loss: 0.0913 - accuracy: 0.9863 - val_loss: 0.1311 - val_accuracy: 0.9945

Epoch 143/300

23/23 - 1s - loss: 0.1150 - accuracy: 0.9863 - val_loss: 0.1246 - val_accuracy: 1.0000

Epoch 144/300

23/23 - 1s - loss: 0.0992 - accuracy: 0.9890 - val_loss: 0.1276 - val_accuracy: 0.9945

Epoch 145/300

23/23 - 1s - loss: 0.1015 - accuracy: 0.9863 - val_loss: 0.1324 - val_accuracy: 0.9945

Epoch 146/300

23/23 - 1s - loss: 0.1044 - accuracy: 0.9849 - val_loss: 0.1301 - val_accuracy: 0.9945

Epoch 147/300

23/23 - 1s - loss: 0.1088 - accuracy: 0.9877 - val_loss: 0.1310 - val_accuracy: 1.0000

Epoch 148/300

23/23 - 1s - loss: 0.1108 - accuracy: 0.9877 - val_loss: 0.1290 - val_accuracy: 1.0000

Epoch 149/300

23/23 - 1s - loss: 0.1066 - accuracy: 0.9835 - val_loss: 0.1298 - val_accuracy: 1.0000

Epoch 150/300

23/23 - 1s - loss: 0.1120 - accuracy: 0.9877 - val_loss: 0.1377 - val_accuracy: 1.0000

Epoch 151/300

23/23 - 1s - loss: 0.0942 - accuracy: 0.9890 - val_loss: 0.1295 - val_accuracy: 0.9945

Epoch 152/300

23/23 - 1s - loss: 0.0923 - accuracy: 0.9890 - val_loss: 0.1290 - val_accuracy: 0.9945

Epoch 153/300

23/23 - 1s - loss: 0.1031 - accuracy: 0.9849 - val_loss: 0.1335 - val_accuracy: 0.9945

Epoch 154/300

23/23 - 1s - loss: 0.1185 - accuracy: 0.9863 - val_loss: 0.1333 - val_accuracy: 0.9945

Epoch 155/300

23/23 - 1s - loss: 0.1209 - accuracy: 0.9877 - val_loss: 0.1250 - val_accuracy: 1.0000

Epoch 156/300

23/23 - 1s - loss: 0.1015 - accuracy: 0.9849 - val_loss: 0.1245 - val_accuracy: 1.0000

Epoch 157/300

23/23 - 1s - loss: 0.1299 - accuracy: 0.9877 - val_loss: 0.1324 - val_accuracy: 0.9945

Epoch 158/300

23/23 - 1s - loss: 0.1008 - accuracy: 0.9835 - val_loss: 0.1296 - val_accuracy: 1.0000

Epoch 159/300

23/23 - 1s - loss: 0.1191 - accuracy: 0.9890 - val_loss: 0.1359 - val_accuracy: 1.0000

Epoch 160/300

23/23 - 1s - loss: 0.0921 - accuracy: 0.9890 - val_loss: 0.1367 - val_accuracy: 1.0000

Epoch 161/300

23/23 - 1s - loss: 0.1147 - accuracy: 0.9835 - val_loss: 0.1375 - val_accuracy: 0.9945

Epoch 162/300

23/23 - 1s - loss: 0.1015 - accuracy: 0.9877 - val_loss: 0.1283 - val_accuracy: 1.0000

Epoch 163/300

23/23 - 1s - loss: 0.1134 - accuracy: 0.9849 - val_loss: 0.1304 - val_accuracy: 1.0000

Epoch 164/300

23/23 - 1s - loss: 0.1142 - accuracy: 0.9890 - val_loss: 0.1316 - val_accuracy: 1.0000

Epoch 165/300

23/23 - 1s - loss: 0.1070 - accuracy: 0.9877 - val_loss: 0.1333 - val_accuracy: 0.9945

Epoch 166/300

23/23 - 1s - loss: 0.1234 - accuracy: 0.9863 - val_loss: 0.1399 - val_accuracy: 0.9945

Epoch 167/300

23/23 - 1s - loss: 0.1149 - accuracy: 0.9863 - val_loss: 0.1356 - val_accuracy: 0.9945

Epoch 168/300

23/23 - 1s - loss: 0.1189 - accuracy: 0.9904 - val_loss: 0.1433 - val_accuracy: 0.9945

Epoch 169/300

23/23 - 1s - loss: 0.1215 - accuracy: 0.9822 - val_loss: 0.1439 - val_accuracy: 0.9945

Epoch 170/300

23/23 - 1s - loss: 0.1072 - accuracy: 0.9890 - val_loss: 0.1395 - val_accuracy: 0.9945

Epoch 171/300

23/23 - 1s - loss: 0.0973 - accuracy: 0.9877 - val_loss: 0.1356 - val_accuracy: 0.9945

Epoch 172/300

23/23 - 1s - loss: 0.1072 - accuracy: 0.9904 - val_loss: 0.1396 - val_accuracy: 0.9945

Epoch 173/300

23/23 - 1s - loss: 0.1069 - accuracy: 0.9863 - val_loss: 0.1401 - val_accuracy: 1.0000

Epoch 174/300

23/23 - 1s - loss: 0.1019 - accuracy: 0.9849 - val_loss: 0.1399 - val_accuracy: 0.9945

Epoch 175/300

23/23 - 1s - loss: 0.1079 - accuracy: 0.9904 - val_loss: 0.1396 - val_accuracy: 0.9945

Epoch 176/300

23/23 - 1s - loss: 0.1007 - accuracy: 0.9863 - val_loss: 0.1366 - val_accuracy: 1.0000

Epoch 177/300

23/23 - 1s - loss: 0.1122 - accuracy: 0.9863 - val_loss: 0.1344 - val_accuracy: 1.0000

Epoch 178/300

23/23 - 1s - loss: 0.1069 - accuracy: 0.9890 - val_loss: 0.1354 - val_accuracy: 1.0000

Epoch 179/300

23/23 - 1s - loss: 0.1116 - accuracy: 0.9863 - val_loss: 0.1405 - val_accuracy: 1.0000

Epoch 180/300

23/23 - 1s - loss: 0.1027 - accuracy: 0.9863 - val_loss: 0.1341 - val_accuracy: 1.0000

Epoch 181/300

23/23 - 1s - loss: 0.1207 - accuracy: 0.9863 - val_loss: 0.1433 - val_accuracy: 0.9945

Epoch 182/300

23/23 - 1s - loss: 0.1095 - accuracy: 0.9863 - val_loss: 0.1379 - val_accuracy: 0.9945

Epoch 183/300

23/23 - 1s - loss: 0.1045 - accuracy: 0.9877 - val_loss: 0.1392 - val_accuracy: 0.9945

Epoch 184/300

23/23 - 1s - loss: 0.1065 - accuracy: 0.9890 - val_loss: 0.1471 - val_accuracy: 0.9945

Epoch 185/300

23/23 - 1s - loss: 0.1144 - accuracy: 0.9863 - val_loss: 0.1491 - val_accuracy: 0.9945

Epoch 186/300

23/23 - 1s - loss: 0.1036 - accuracy: 0.9877 - val_loss: 0.1424 - val_accuracy: 0.9945

Epoch 187/300

23/23 - 1s - loss: 0.1104 - accuracy: 0.9877 - val_loss: 0.1443 - val_accuracy: 0.9945

Epoch 188/300

23/23 - 1s - loss: 0.0867 - accuracy: 0.9877 - val_loss: 0.1434 - val_accuracy: 0.9945

Epoch 189/300

23/23 - 1s - loss: 0.0987 - accuracy: 0.9904 - val_loss: 0.1442 - val_accuracy: 0.9945

Epoch 190/300

23/23 - 1s - loss: 0.1112 - accuracy: 0.9877 - val_loss: 0.1469 - val_accuracy: 0.9945

Epoch 191/300

23/23 - 1s - loss: 0.1126 - accuracy: 0.9877 - val_loss: 0.1386 - val_accuracy: 1.0000

Epoch 192/300

23/23 - 1s - loss: 0.1134 - accuracy: 0.9890 - val_loss: 0.1461 - val_accuracy: 1.0000

Epoch 193/300

23/23 - 1s - loss: 0.1051 - accuracy: 0.9863 - val_loss: 0.1401 - val_accuracy: 1.0000

Epoch 194/300

23/23 - 1s - loss: 0.0945 - accuracy: 0.9849 - val_loss: 0.1372 - val_accuracy: 1.0000

Epoch 195/300

23/23 - 1s - loss: 0.1108 - accuracy: 0.9863 - val_loss: 0.1341 - val_accuracy: 1.0000

Epoch 196/300

23/23 - 1s - loss: 0.1063 - accuracy: 0.9904 - val_loss: 0.1404 - val_accuracy: 1.0000

Epoch 197/300

23/23 - 1s - loss: 0.1030 - accuracy: 0.9877 - val_loss: 0.1401 - val_accuracy: 1.0000

Epoch 198/300

23/23 - 1s - loss: 0.1039 - accuracy: 0.9849 - val_loss: 0.1410 - val_accuracy: 1.0000

Epoch 199/300

23/23 - 1s - loss: 0.1033 - accuracy: 0.9877 - val_loss: 0.1357 - val_accuracy: 1.0000

Epoch 200/300

23/23 - 1s - loss: 0.1015 - accuracy: 0.9877 - val_loss: 0.1498 - val_accuracy: 1.0000

Epoch 201/300

23/23 - 1s - loss: 0.0949 - accuracy: 0.9877 - val_loss: 0.1430 - val_accuracy: 1.0000

Epoch 202/300

23/23 - 1s - loss: 0.1065 - accuracy: 0.9849 - val_loss: 0.1410 - val_accuracy: 1.0000

Epoch 203/300

23/23 - 1s - loss: 0.1022 - accuracy: 0.9877 - val_loss: 0.1443 - val_accuracy: 0.9945

Epoch 204/300

23/23 - 1s - loss: 0.0908 - accuracy: 0.9904 - val_loss: 0.1434 - val_accuracy: 0.9945

Epoch 205/300

23/23 - 1s - loss: 0.1130 - accuracy: 0.9890 - val_loss: 0.1427 - val_accuracy: 1.0000

Epoch 206/300

23/23 - 1s - loss: 0.0954 - accuracy: 0.9890 - val_loss: 0.1448 - val_accuracy: 1.0000

Epoch 207/300

23/23 - 1s - loss: 0.1086 - accuracy: 0.9877 - val_loss: 0.1435 - val_accuracy: 1.0000

Epoch 208/300

23/23 - 1s - loss: 0.0963 - accuracy: 0.9890 - val_loss: 0.1461 - val_accuracy: 1.0000

Epoch 209/300

23/23 - 1s - loss: 0.1104 - accuracy: 0.9904 - val_loss: 0.1446 - val_accuracy: 1.0000

Epoch 210/300

23/23 - 1s - loss: 0.0985 - accuracy: 0.9890 - val_loss: 0.1468 - val_accuracy: 1.0000

Epoch 211/300

23/23 - 1s - loss: 0.1089 - accuracy: 0.9849 - val_loss: 0.1554 - val_accuracy: 1.0000

Epoch 212/300

23/23 - 1s - loss: 0.1060 - accuracy: 0.9877 - val_loss: 0.1553 - val_accuracy: 0.9945

Epoch 213/300

23/23 - 1s - loss: 0.1167 - accuracy: 0.9849 - val_loss: 0.1519 - val_accuracy: 1.0000

Epoch 214/300

23/23 - 1s - loss: 0.1002 - accuracy: 0.9890 - val_loss: 0.1491 - val_accuracy: 1.0000

Epoch 215/300

23/23 - 1s - loss: 0.1183 - accuracy: 0.9877 - val_loss: 0.1519 - val_accuracy: 1.0000

Epoch 216/300

23/23 - 1s - loss: 0.0983 - accuracy: 0.9849 - val_loss: 0.1559 - val_accuracy: 0.9945

Epoch 217/300

23/23 - 1s - loss: 0.1125 - accuracy: 0.9890 - val_loss: 0.1551 - val_accuracy: 1.0000

Epoch 218/300

23/23 - 1s - loss: 0.1088 - accuracy: 0.9863 - val_loss: 0.1507 - val_accuracy: 1.0000

Epoch 219/300

23/23 - 1s - loss: 0.0870 - accuracy: 0.9890 - val_loss: 0.1510 - val_accuracy: 0.9945

Epoch 220/300

23/23 - 1s - loss: 0.1078 - accuracy: 0.9877 - val_loss: 0.1477 - val_accuracy: 1.0000

Epoch 221/300

23/23 - 1s - loss: 0.1167 - accuracy: 0.9890 - val_loss: 0.1476 - val_accuracy: 1.0000

Epoch 222/300

23/23 - 1s - loss: 0.1071 - accuracy: 0.9877 - val_loss: 0.1534 - val_accuracy: 1.0000

Epoch 223/300

23/23 - 1s - loss: 0.1101 - accuracy: 0.9863 - val_loss: 0.1562 - val_accuracy: 1.0000

Epoch 224/300

23/23 - 1s - loss: 0.1075 - accuracy: 0.9877 - val_loss: 0.1532 - val_accuracy: 1.0000

Epoch 225/300

23/23 - 1s - loss: 0.0986 - accuracy: 0.9863 - val_loss: 0.1483 - val_accuracy: 1.0000

Epoch 226/300

23/23 - 1s - loss: 0.1140 - accuracy: 0.9890 - val_loss: 0.1502 - val_accuracy: 1.0000

Epoch 227/300

23/23 - 1s - loss: 0.1162 - accuracy: 0.9877 - val_loss: 0.1502 - val_accuracy: 1.0000

Epoch 228/300

23/23 - 1s - loss: 0.1134 - accuracy: 0.9890 - val_loss: 0.1482 - val_accuracy: 1.0000

Epoch 229/300

23/23 - 1s - loss: 0.1174 - accuracy: 0.9904 - val_loss: 0.1471 - val_accuracy: 1.0000

Epoch 230/300

23/23 - 1s - loss: 0.1112 - accuracy: 0.9849 - val_loss: 0.1565 - val_accuracy: 1.0000

Epoch 231/300

23/23 - 1s - loss: 0.1211 - accuracy: 0.9835 - val_loss: 0.1557 - val_accuracy: 1.0000

Epoch 232/300

23/23 - 1s - loss: 0.1016 - accuracy: 0.9918 - val_loss: 0.1506 - val_accuracy: 1.0000

Epoch 233/300

23/23 - 1s - loss: 0.1137 - accuracy: 0.9863 - val_loss: 0.1526 - val_accuracy: 1.0000

Epoch 234/300

23/23 - 1s - loss: 0.1086 - accuracy: 0.9890 - val_loss: 0.1582 - val_accuracy: 1.0000

Epoch 235/300

23/23 - 1s - loss: 0.1137 - accuracy: 0.9877 - val_loss: 0.1627 - val_accuracy: 1.0000

Epoch 236/300

23/23 - 1s - loss: 0.0911 - accuracy: 0.9877 - val_loss: 0.1553 - val_accuracy: 1.0000

Epoch 237/300

23/23 - 1s - loss: 0.1097 - accuracy: 0.9890 - val_loss: 0.1603 - val_accuracy: 1.0000

Epoch 238/300

23/23 - 1s - loss: 0.1106 - accuracy: 0.9890 - val_loss: 0.1555 - val_accuracy: 1.0000

Epoch 239/300

23/23 - 1s - loss: 0.1109 - accuracy: 0.9904 - val_loss: 0.1600 - val_accuracy: 1.0000

Epoch 240/300

23/23 - 1s - loss: 0.1066 - accuracy: 0.9904 - val_loss: 0.1633 - val_accuracy: 1.0000

Epoch 241/300

23/23 - 1s - loss: 0.1040 - accuracy: 0.9849 - val_loss: 0.1665 - val_accuracy: 1.0000

Epoch 242/300

23/23 - 1s - loss: 0.0916 - accuracy: 0.9890 - val_loss: 0.1559 - val_accuracy: 1.0000

Epoch 243/300

23/23 - 1s - loss: 0.0950 - accuracy: 0.9890 - val_loss: 0.1575 - val_accuracy: 1.0000

Epoch 244/300

23/23 - 1s - loss: 0.0899 - accuracy: 0.9890 - val_loss: 0.1593 - val_accuracy: 1.0000

Epoch 245/300

23/23 - 1s - loss: 0.0946 - accuracy: 0.9877 - val_loss: 0.1611 - val_accuracy: 1.0000

Epoch 246/300

23/23 - 1s - loss: 0.1142 - accuracy: 0.9877 - val_loss: 0.1633 - val_accuracy: 1.0000

Epoch 247/300

23/23 - 1s - loss: 0.1155 - accuracy: 0.9863 - val_loss: 0.1647 - val_accuracy: 1.0000

Epoch 248/300

23/23 - 1s - loss: 0.1090 - accuracy: 0.9890 - val_loss: 0.1697 - val_accuracy: 1.0000

Epoch 249/300

23/23 - 1s - loss: 0.1145 - accuracy: 0.9849 - val_loss: 0.1701 - val_accuracy: 1.0000

Epoch 250/300

23/23 - 1s - loss: 0.1110 - accuracy: 0.9877 - val_loss: 0.1731 - val_accuracy: 0.9945

Epoch 251/300

23/23 - 1s - loss: 0.0980 - accuracy: 0.9877 - val_loss: 0.1649 - val_accuracy: 0.9945

Epoch 252/300

23/23 - 1s - loss: 0.1098 - accuracy: 0.9890 - val_loss: 0.1655 - val_accuracy: 1.0000

Epoch 253/300

23/23 - 1s - loss: 0.1001 - accuracy: 0.9863 - val_loss: 0.1579 - val_accuracy: 1.0000

Epoch 254/300

23/23 - 1s - loss: 0.1030 - accuracy: 0.9890 - val_loss: 0.1597 - val_accuracy: 1.0000

Epoch 255/300

23/23 - 1s - loss: 0.0978 - accuracy: 0.9863 - val_loss: 0.1602 - val_accuracy: 1.0000

Epoch 256/300

23/23 - 1s - loss: 0.0913 - accuracy: 0.9904 - val_loss: 0.1610 - val_accuracy: 1.0000

Epoch 257/300

23/23 - 1s - loss: 0.1166 - accuracy: 0.9890 - val_loss: 0.1665 - val_accuracy: 1.0000

Epoch 258/300

23/23 - 1s - loss: 0.1178 - accuracy: 0.9877 - val_loss: 0.1668 - val_accuracy: 1.0000

Epoch 259/300

23/23 - 1s - loss: 0.1090 - accuracy: 0.9835 - val_loss: 0.1740 - val_accuracy: 1.0000

Epoch 260/300

23/23 - 1s - loss: 0.1038 - accuracy: 0.9890 - val_loss: 0.1734 - val_accuracy: 1.0000

Epoch 261/300

23/23 - 1s - loss: 0.1026 - accuracy: 0.9904 - val_loss: 0.1702 - val_accuracy: 1.0000

Epoch 262/300

23/23 - 1s - loss: 0.0945 - accuracy: 0.9890 - val_loss: 0.1659 - val_accuracy: 1.0000

Epoch 263/300

23/23 - 1s - loss: 0.1229 - accuracy: 0.9863 - val_loss: 0.1721 - val_accuracy: 0.9945

Epoch 264/300

23/23 - 1s - loss: 0.1002 - accuracy: 0.9890 - val_loss: 0.1629 - val_accuracy: 1.0000

Epoch 265/300

23/23 - 1s - loss: 0.1062 - accuracy: 0.9890 - val_loss: 0.1638 - val_accuracy: 1.0000

Epoch 266/300

23/23 - 1s - loss: 0.1035 - accuracy: 0.9877 - val_loss: 0.1671 - val_accuracy: 1.0000

Epoch 267/300

23/23 - 1s - loss: 0.0984 - accuracy: 0.9877 - val_loss: 0.1675 - val_accuracy: 1.0000

Epoch 268/300

23/23 - 1s - loss: 0.1111 - accuracy: 0.9835 - val_loss: 0.1751 - val_accuracy: 1.0000

Epoch 269/300

23/23 - 1s - loss: 0.1005 - accuracy: 0.9849 - val_loss: 0.1769 - val_accuracy: 1.0000

Epoch 270/300

23/23 - 1s - loss: 0.1111 - accuracy: 0.9877 - val_loss: 0.1825 - val_accuracy: 1.0000

Epoch 271/300

23/23 - 1s - loss: 0.0984 - accuracy: 0.9890 - val_loss: 0.1787 - val_accuracy: 1.0000

Epoch 272/300

23/23 - 1s - loss: 0.1128 - accuracy: 0.9904 - val_loss: 0.1744 - val_accuracy: 1.0000

Epoch 273/300

23/23 - 1s - loss: 0.1025 - accuracy: 0.9877 - val_loss: 0.1743 - val_accuracy: 1.0000

Epoch 274/300

23/23 - 1s - loss: 0.0965 - accuracy: 0.9904 - val_loss: 0.1712 - val_accuracy: 1.0000

Epoch 275/300

23/23 - 1s - loss: 0.1066 - accuracy: 0.9890 - val_loss: 0.1762 - val_accuracy: 1.0000

Epoch 276/300

23/23 - 1s - loss: 0.1035 - accuracy: 0.9863 - val_loss: 0.1763 - val_accuracy: 1.0000

Epoch 277/300

23/23 - 1s - loss: 0.1118 - accuracy: 0.9835 - val_loss: 0.1770 - val_accuracy: 1.0000

Epoch 278/300

23/23 - 1s - loss: 0.1264 - accuracy: 0.9863 - val_loss: 0.1762 - val_accuracy: 1.0000

Epoch 279/300

23/23 - 1s - loss: 0.0974 - accuracy: 0.9890 - val_loss: 0.1746 - val_accuracy: 1.0000

Epoch 280/300

23/23 - 1s - loss: 0.0995 - accuracy: 0.9835 - val_loss: 0.1706 - val_accuracy: 1.0000

Epoch 281/300

23/23 - 1s - loss: 0.1092 - accuracy: 0.9890 - val_loss: 0.1696 - val_accuracy: 1.0000

Epoch 282/300

23/23 - 1s - loss: 0.0991 - accuracy: 0.9863 - val_loss: 0.1704 - val_accuracy: 1.0000

Epoch 283/300

23/23 - 1s - loss: 0.1022 - accuracy: 0.9890 - val_loss: 0.1762 - val_accuracy: 1.0000

Epoch 284/300

23/23 - 1s - loss: 0.1049 - accuracy: 0.9877 - val_loss: 0.1776 - val_accuracy: 1.0000

Epoch 285/300

23/23 - 1s - loss: 0.0955 - accuracy: 0.9904 - val_loss: 0.1789 - val_accuracy: 1.0000

Epoch 286/300

23/23 - 1s - loss: 0.1111 - accuracy: 0.9822 - val_loss: 0.1801 - val_accuracy: 1.0000

Epoch 287/300

23/23 - 1s - loss: 0.0976 - accuracy: 0.9835 - val_loss: 0.1766 - val_accuracy: 1.0000

Epoch 288/300

23/23 - 1s - loss: 0.1106 - accuracy: 0.9835 - val_loss: 0.1733 - val_accuracy: 1.0000

Epoch 289/300

23/23 - 1s - loss: 0.0868 - accuracy: 0.9904 - val_loss: 0.1726 - val_accuracy: 1.0000

Epoch 290/300

23/23 - 1s - loss: 0.1025 - accuracy: 0.9877 - val_loss: 0.1771 - val_accuracy: 1.0000

Epoch 291/300

23/23 - 1s - loss: 0.0940 - accuracy: 0.9890 - val_loss: 0.1765 - val_accuracy: 1.0000

Epoch 292/300

23/23 - 1s - loss: 0.0885 - accuracy: 0.9890 - val_loss: 0.1720 - val_accuracy: 1.0000

Epoch 293/300

23/23 - 1s - loss: 0.0891 - accuracy: 0.9890 - val_loss: 0.1758 - val_accuracy: 1.0000

Epoch 294/300

23/23 - 1s - loss: 0.1085 - accuracy: 0.9863 - val_loss: 0.1804 - val_accuracy: 1.0000

Epoch 295/300

23/23 - 1s - loss: 0.0989 - accuracy: 0.9849 - val_loss: 0.1769 - val_accuracy: 1.0000

Epoch 296/300

23/23 - 1s - loss: 0.0966 - accuracy: 0.9890 - val_loss: 0.1761 - val_accuracy: 1.0000

Epoch 297/300

23/23 - 1s - loss: 0.0940 - accuracy: 0.9877 - val_loss: 0.1785 - val_accuracy: 1.0000

Epoch 298/300

23/23 - 1s - loss: 0.1068 - accuracy: 0.9890 - val_loss: 0.1822 - val_accuracy: 1.0000

Epoch 299/300

23/23 - 1s - loss: 0.1052 - accuracy: 0.9877 - val_loss: 0.1854 - val_accuracy: 1.0000

Epoch 300/300

23/23 - 1s - loss: 0.1122 - accuracy: 0.9904 - val_loss: 0.1781 - val_accuracy: 1.0000

Finished training

Saving best performing model…

Converting TensorFlow Lite float32 model…

Converting TensorFlow Lite int8 quantized model with int8 input and output…

Calculating performance metrics…

Profiling float32 model…

Profiling int8 model…

Model training complete

Job completed

I would say that the model is overfitting.

Would you add datasets to the model testing step and let us know about the results?

Thanks,

Omar

I’m using EON tuner to attempt to sort ML models by precision for the “chimes” audio class, but the sort function appears to not do anything. Regardless of what I click the list of models does not re-sort in any way.

I see what you mean, I am saying is that, the model is very trained so it is fitting too well on the training datasets only. Therefore it is overfitting.

I would highly recommend to share the model testing steps so we can help you further.

Thanks,

Omar

Hi @OmarShrit , I have similar question. I have been working with Edge Impulse to detect water faucet sounds and deployed it on a Esp32 Wroom board with Inmp441 external mic. It works great and does water flow predictions really well. However, it turns ON falsely for vacuum cleaner sounds or loud fan sounds or if you talked really loudly. Although I have tried to include these sounds as noise category in Edge Impulse, it somehow does not work in real-world i.e. at the board level. But Edge Impulse classifier works correctly when you test the model online with an accuracy of 97%. I have played around with MFCC, MFE and Spectrogram methods but they all are similar when it comes to real-world situations. Do you have any insights on this? Thanks and much appreciated.

Hi Akash,

You can add (use) an Anomaly detection block after the classification and train this block, it will detect all other sounds and classify them as anomalies.

Regards,

Omar