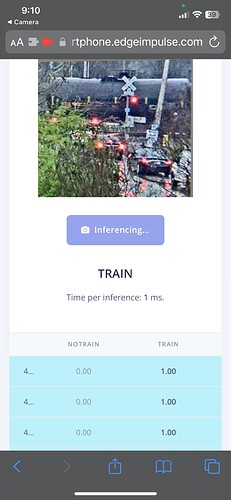

I managed to get a working model that can classify a specific webcam image as being active (train crossing arm down / lights activated), vs. no train. If I understand EI’s use model correctly, I now need to take the generated code and place elsewhere for live classification. Now I want to determine a method / device to compare an regularly captured snapshot to the model and classify accordingly - with the ultimate goal of displaying alerts on a website. The simpler, the better.

Raspberry Pi? I’d welcome any ideas.

Perhaps I’m not asking the right questions, but ask for patience and guidance as I’m not sure where else to turn.

- The run on mobile phone feature appears to work well.

- What I’d rather do is classify images (same size /source as what I used to train the model).

- I don’t have working code to do so in a WASM deployment.

- The provided examples show how to copy/paste raw features into the JavaScript to confirm it matches the EI dashboard classification. That works.

- If a webcam (or camera on a device) works, it would seem possible that still images could be classified.

Hi @Gigcity,

It sounds like you wish to deploy your model as a library and write an application around that library. Please see this guide on the possible ways to deploy your model as a library for a variety of languages and targets.

Possibly.

I have already started with that guide, and chose WASM.

I assumed working examples using webcam/streaming could be modified to instead classify still images.

The line between ‘ready to run’ and ‘start from scratch’ isn’t clear to me.

Thanks for the response.