Question/Issue:

Hi everyone! I have a sk-am62 from Texas instrunents and a mobilenetv2 model in Linux(AARCH64) format. How can I find out the model runs on one core? Or is there any parallelization for working on several armas?

Project ID:

327535

Context/Use case:

Maybe there are any commands or bash scripts?

Good question @kira9k

Can you post the output of htop when you are running your Impulse. / application? Hopefully we can answer based on the output.

It would depend on how you deploy the Impulse / model:

Edge Impulse Linux Cores can be set manually, I will need to check with @jbuckEI for recommended cores / steps.

C++ library which can be integrated into your ARM-based TI processor application.

Integration on TI Processor: Using TI’s SDK or tools like TIDL (Texas Instruments Deep Learning) library for model integration.

I will differ to our TI expert @jbuckEI for an answer on what the recommended deployment method uses. Do you know how many cores are used for our Linux / C++ / TI SDK? deployment on this device?

Best

Eoin

Are you using the AM62 with no AI accelerator or AM62A with accelerator? For the non-accelerated portion TI has documentation on how to use with Edge Impulse here.

For mobilenetv2 models you will need to use the Linux AARCH62 deployment. If you need accelerated support that will need to be added a feature request for new development into Edge Impulse.

Either way, only 1-core is used - no parallelize code is used.

Hello, @jbuckEI. I’m using am62 without acceleration. Also, the Python sdk was also used for prediction.

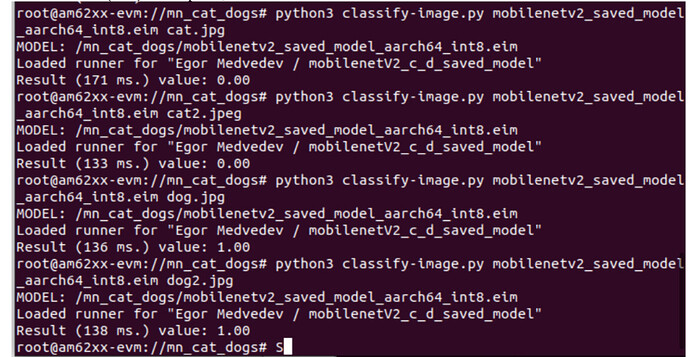

Hello, @Eoin! The Python sdk was using for prediction. The format of the model is Linux(AARCH 64). Also, I am sending a photo of the command line.

Hi @kira9k

As Josh stated the model’s inference is running sequentially on one core rather than being parallelised across multiple cores.

Hope that clarifies,

Best

Eoin