Hi team,

I have doubt regarding how EON tuner works? Just curious about model selection though.

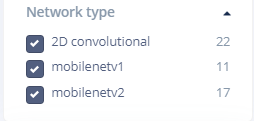

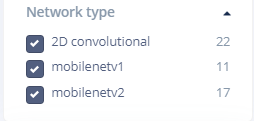

I could see 22 model in 2D convolution network type.

Are these 22 models predefined? If so how these models were selected?

Thanks,

Ramson Jehu K

Hi team,

I have doubt regarding how EON tuner works? Just curious about model selection though.

I could see 22 model in 2D convolution network type.

Are these 22 models predefined? If so how these models were selected?

Thanks,

Ramson Jehu K

Hi @Ramson,

The EON tuner selects models by varying the following parameters based on your selected target device and latency:

In the near future we’ll be releasing an API that will allow you to customize the EON Tuner search space: using this API you could for example exclude all ‘RGB’ models from the search space.

I hope this answers your question, please let me know if anything is unclear…

Best regards,

Mathijs

This would be very helpful. I really like the EON feature as it makes trial and error much faster, but it would be really good to filter its generated models based not only on inference time and system but also in metrics like window size (for audio classification), frame lenght etc. Glad you are working on it!