Hello,

I have been researching on this platform for a few days now and I actually love it. I just have one request. In the model deployment tab we can download our model in a C++ Library (for Arduino and other boards) or a binary file for the STM boards.

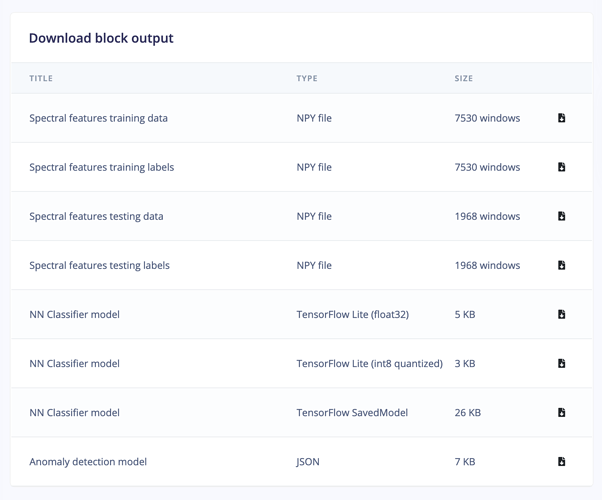

But in the backend you are using tensorflow lite, so could you please also include an option to export the .tflite file of the model as then it would be very easy for us to run these models on android phones or even the low cost computers like the Pi.

I would just like to appreciate the effort that has been put in developing this site. I simply love the user interface and the ease of access.