When my training has completed the final Validation Accuracy is much lower than anything I was seeing in the logs during training. Are they calculated differently? Is the final one the actual value and the logs are just an estimate?

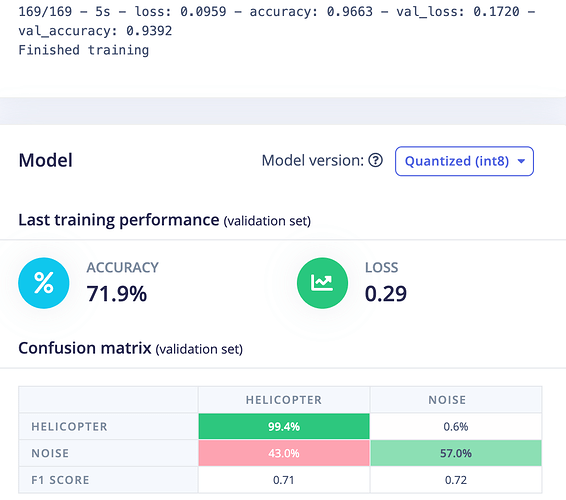

In the logs I am seeing a val_accuracy of 0.9 but in the summary it is 0.71. The Loss is also 0.17 vs 0.29

This is using a Spectrogram and a 1d Conv NN.

Never mind… It looks like that is the difference between the Float and the Int8 version!! I am so used to them having about the same performance that I never switch between them. Plus side - I just found a way to improve my model performance 20 points!

2 Likes

@Robotastic yep, quantization accuracy loss is pretty high on spectrogram blocks right now (I guess you’re using that). We’re looking at fixing this by using another normalization step.