How to interpret the results from EdgeImpulse?

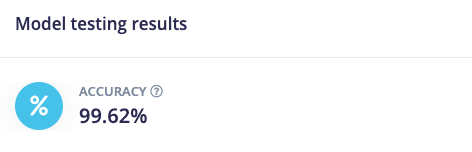

I trained a YoloV5-medium model on approximately 2700 images. After testing it on the 20% of the images that is reserved for testing, i get an accuracy of 99.62%. That seems awesome.

However, when I open the logs of the test run it shows the following:

calculate_object_detection_metrics

{“support”: 533, “coco_map”: 0.9371042251586914, “coco_metrics”:

{“MaP”: 0.02786771954277207,

“MaP@[IoU=50]”: 0.12573883545581718,

“MaP@[IoU=75]”: 0.004394394864889173,

“MaP@[area=small]”: 0.02786771954277207,

“MaP@[area=medium]”: -1.0,

“MaP@[area=large]”: -1.0,

“Recall@[max_detections=1]”: 0.10357815442561205,

“Recall@[max_detections=10]”: 0.10357815442561205,

“Recall@[max_detections=100]”: 0.10357815442561205,

“Recall@[area=small]”: 0.10357815442561205,

“Recall@[area=medium]”: -1.0,

“Recall@[area=large]”: -1.0,

“support”: {“images”: 533, “annotations”: 533, “detections”: 531}},

“class_names”: [“queen”]}

I can see that:

“MaP@[IoU=50]”: 0.12573883545581718,

“MaP@[IoU=75]”: 0.004394394864889173,

These are very low scores and I am trying to understand why the accuracy given by EdgeImpulse (see picture below) is of 99.62% while the MaP (IoU 50 and 75) listed above is so low. In fact, it seems like the model was actually able to detect the test images correctly, I am just struggling to understand why those other scores are so low.

Is there a guide on how to interpret the results given by EdgeImpulse?

I can also see that “coco_map” (i assume coco mean average precision) is quite high. I was also wondering if anyone can interpret this, and why the rest is so low.

Project ID:

388357

Context/Use case:

Interpretation of results