Question/Issue:

I want to evaluate my int8 model performance on test set. However, when I went to “Model Testing” section and click ‘classify all’, I can see ‘Classifying data for float32 model…’. Is there a way to evaluate int8 model for different target boards?

Project ID:

123665

Context/Use case:

1 Like

Hi @chinya07

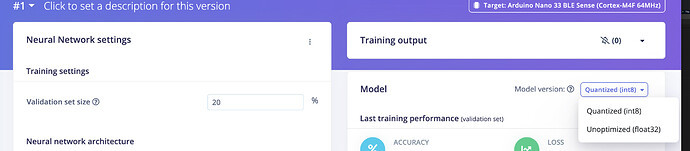

For this case I believe you will need to set it in the NN Classifier step, and rerun the training:

Best

Eoin

From what I have experienced the Testing page always uses float32.

Also a PR was submitted last year

2 Likes

You can download the int8 Tensorflow model and perform the inference locally. Check TensorFlow Lite (TFLite) Python Inference Example with Quantization from @shawn_edgeimpulse and Post-training quantization - Representation for quantized tensors

Suppose you like to verify the performance of different devices. In that case, I think the only correct way is to compile and perform the inference for each board separately and perform some statistical analysis to compare the performance between the different devices.

4 Likes