@louis @janjongboom & all others, Thanks a lot for this entire thread. I had the same issues and resolved almost everything with your help.

@gransasso Yeah we have some ideas on fixing quantization error on smaller image models (such as using a different dataset to find quantization parameters, and quantization-aware training) that @dansitu is working on.

First, @Louis thanks for the work you shared, for the ESP32 cam!

I’m a teacher and rather new to ML, but I hope to bring ML to my classes.

The ESP32 cam would be a great tool as our budget is rather small and our students are only familiar with the Arduino environment.

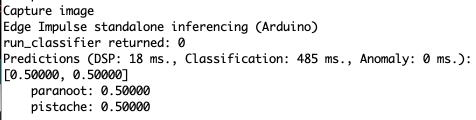

To get familiar with it, I trained a model for classifying nuts as pistachio nuts or as brazil nuts and tried to keep it as small as possible. When I test the model in EI, it gives normal results, but when I run inference on the ESP32 cam, I always get the same result: 0.5000 vs 0.5000

I tried to run the model with both the basic and the advanced image classification example. I tried it on another ESP32 cam, but always with the same result.

Any idea what’s going wrong? Thanks a lot!

Hello @Bewegendbeeld,

Could you try to add an “unknown” class to see if it changes something?

Also I would try the MobileNet v1 with a larger image size (maybe 96x96). You might obtain better results with this model architecture.

Regards,

Louis

Thanks, @louis!

I tried with a larger image size, but this gives this error:

ERR: failed to allocate tensor arena

Failed to allocate TFLite arena (error code 1)

Maybe because of the limited memory of the ESP32?

So I went back to image size 48x48.

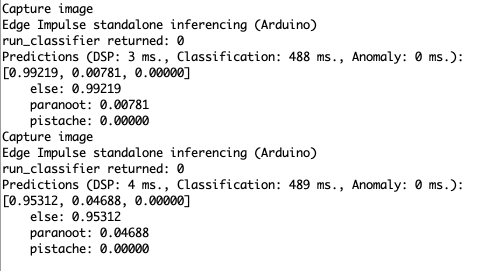

I also added an ‘else’ class with some random objects and trained again.

When doing live classification on Edge Impulse, everything seems to work as expected, but when I run inference on the ESP32 cam, with basic example I have different results. Pistache remains 0.00000, and every images is classified as ‘else’.

When I try the advanced example, I get 100% else and the other categories remain 0.0000.

Any idea what’s going wrong?

Thanks a lot!

Hello @Bewegendbeeld,

ERR: failed to allocate tensor arena Failed to allocate TFLite arena (error code 1) is indeed a memory issue.

Were you trying with larger images using the MobileNet v1 or v2?

v2 with 96x96 is definitely too big but on v1 it should fit.

Depending on which camera / esp version you are using, some boards have the possibility to set the image size to config.frame_size = FRAMESIZE_96X96;

In this case you won’t need to resize your image using the image_resize_linear(ei_buf, out_buf, EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT, 3, out_width, out_height); and I think the issue comes from this function in the advanced example and from the cutout_get_data in the basic example.

Regards,

Louis

Merci, @louis!

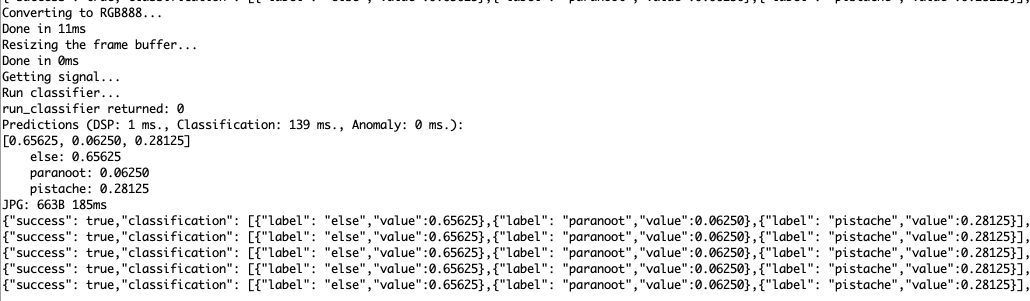

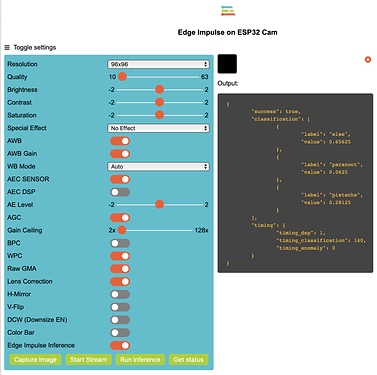

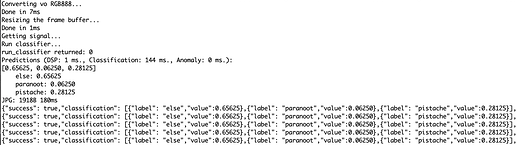

Indeed, I was trying with MobileNetV2. When I change to V1, I’m able to use 96X96. After this I tried again with the new Arduino library.

In the advanced example, I changed the lines with FRAMESIZE_240X240 to FRAMESIZE_96X96

And I removed:

image_resize_linear(ei_buf, out_buf, EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT, 3, out_width, out_height);

But even then I always get the same classification.

I noticed the output image after running inference remains black, which isn’t the case when streaming.

Any idea where something goes wrong?

Hello @Bewegendbeeld,

Could you try to replace the ei_buf by the out_buf in the raw_feature_get_data function to see if it works? I do not have a ESP32 CAM with me at the moment so I cannot test.

int raw_feature_get_data(size_t offset, size_t length, float *signal_ptr)

{

memcpy(signal_ptr, ei_buf + offset, length * sizeof(float));

return 0;

}

And if you want the output image, you’ll also need to change ei_buf by out_buf here:

s = fmt2jpg_cb(ei_buf, ei_len, EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT, PIXFORMAT_RGB888, 90, jpg_encode_stream, &jchunk);

From what I see in the logs, it seems that the request’s response is displayed more than once. I’ll have a deeper look at the code when I have access to an ESP32 CAM.

Regards,

Louis

Actually you could only replace

ei_buf = ei_matrix->item;

by

ei_buf = image_matrix->item;

It is a bit messy, I’ll clean the code to only display functions used by Edge Impulse instead of keeping the code from the base project like described in the the resources.

This would only work when you set the FRAMESIZE_96X96

Hi @louis, thanks for all your suggestions.

I tried everything from your answers, but still get the same result every time.

I hoped to find a very basic Arduino-based workflow to bring ML to our STEM-classes but maybe at this moment, the ESP-32 cam is not the way to go for us…

But, on the EI-blog, I just read the TinyML-kit is supported now.

I think I’ll try to build a new model and see if this combination works better for us.

Regards,

Patrick

hey guys,

I´ve been tinkering with the EI Platform for quite a while now and i have to say: great great product - no better way to learn and experiment!

However, I´ve been trying to build a small network that distinguishes between only two states based on a camera image to determine if a parking spot is occupied or unoccupied… so far so good. I recently discovered WAY better training results after I switched from transfer learning to classification, but I can’t get the thing to work on the ESP32CAM board(not enough memory “failed to allocate TFLite area…”). And now I´m having a hard time to find an answer to my questions:

- Is it even possible in general to get the classification-version to work on the ESP32Cam? After training, I get no information about predicted Flash or RAM Usage(says only “retrain to eval” but that doesn’t change anything)

- Is there a way to bring down the size of the Library? How would I do that?

- Is it maybe already a stupid idea in general to rely on classification instead of transition?

Any help or comments are appreciated - thank you

Hello @vitruvo,

Could you share your project ID or your project name please so I can have a deeper look? I see that you have several on your account

Regards,

Louis

Hey @louis,

thanks for looking into this!

This was actually about the “vitruvo-project-1” project. I created two others in the meantime where the performance prediction is displayed again…

Hi @louis Louis, Thank you for the documentation for the esp32. I tried your code shared in the edge impulise official repository. But iam recieving the error given below. Can you help me, i dont know why iam recieving this error!

{"success": false,"timing": {"timing_dsp":0,"timing_classification":0,"timing_anomaly":0}}

Hi @suhailjr,

it could be that your model is too large for the target. The code was tested with 48x48 images and use the MobileNetV2 0.05.

Could you enable the debug flag in the run_classifier function? (change false to true)

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, true /* debug */);

And then open a serial terminal to check if any error is being printed out.

I confirmed its using 48x48. This is the error iam getting in serial monitor!

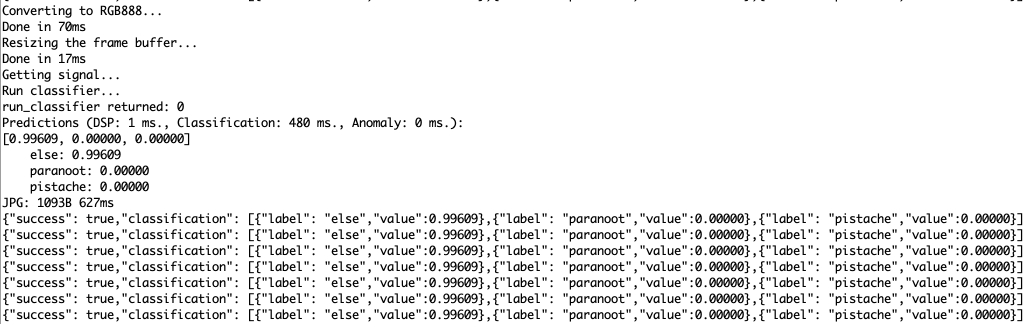

Converting to RGB888...

Done in 162ms

Resizing the frame buffer...

Done in 20ms

Getting signal...

Run classifier...

ERR: failed to allocate tensor arena

Failed to allocate TFLite arena (error code 1)

run_classifier returned: -6

JPG: 1872B 241ms

{"success": false,"timing": {"timing_dsp":0,"timing_classification":0,"timing_anomaly":0}}

{"success": false,"timing": {"timing_dsp":0,"timing_classification":0,"timing_anomaly":0}}This is a memory issue, could you try retraining your model with a 96x96 image and chosing a MobileNetV1 (they have a smaller footprint).

Regards,

Louis

I can see on your account that you have two projects.

One name test and another one call test2.

Your test2 model is too big (96x96 images with MobileNetv2 0.05) but your test project should be fine I think. Can you make sure you are using the right project?

Also can you make sure you selected the quantized version (int8) of the model on the deployment tab please?

Regards,

Louis