#include “esp_camera.h”

#include <WiFi.h>

#include “soc/soc.h”

#include “soc/rtc_cntl_reg.h”

#include “img_converters.h”

#include <HTTPClient.h>

#include “image_util.h”

#include <edge7-project-1_inferencing.h>

//

// WARNING!!! Make sure that you have either selected ESP32 Wrover Module,

// or another board which has PSRAM enabled

//this edit code doesn’t use a gzipped html source code

//the html -code is open and is easy to update or modify on the other tab page = app_httpd.cpp

// Select camera model

//#define CAMERA_MODEL_WROVER_KIT

//#define CAMERA_MODEL_M5STACK_PSRAM

#define CAMERA_MODEL_AI_THINKER

#include “camera_pins.h”

const char* ssid = “FASTWEB-952LR7”;

const char* password = “LXUUATZ6CL”;

int channels = 1;

uint8_t *out_buf;

uint8_t *ei_buf;

static int8_t ei_activate = 0;

ei_impulse_result_t result = {0};

typedef struct

{

size_t size; //number of values used for filtering

size_t index; //current value index

size_t count; //value count

int sum;

int *values; //array to be filled with values

} ra_filter_t;

void setup() {

//WRITE_PERI_REG(RTC_CNTL_BROWN_OUT_REG, 0); //disable brownout detector

Serial.begin(115200);

Serial.setDebugOutput(true);

Serial.println();

camera_config_t config;

config.ledc_channel = LEDC_CHANNEL_0;

config.ledc_timer = LEDC_TIMER_0;

config.pin_d0 = Y2_GPIO_NUM;

config.pin_d1 = Y3_GPIO_NUM;

config.pin_d2 = Y4_GPIO_NUM;

config.pin_d3 = Y5_GPIO_NUM;

config.pin_d4 = Y6_GPIO_NUM;

config.pin_d5 = Y7_GPIO_NUM;

config.pin_d6 = Y8_GPIO_NUM;

config.pin_d7 = Y9_GPIO_NUM;

config.pin_xclk = XCLK_GPIO_NUM;

config.pin_pclk = PCLK_GPIO_NUM;

config.pin_vsync = VSYNC_GPIO_NUM;

config.pin_href = HREF_GPIO_NUM;

config.pin_sscb_sda = SIOD_GPIO_NUM;

config.pin_sscb_scl = SIOC_GPIO_NUM;

config.pin_pwdn = PWDN_GPIO_NUM;

config.pin_reset = RESET_GPIO_NUM;

config.xclk_freq_hz = 20000000;

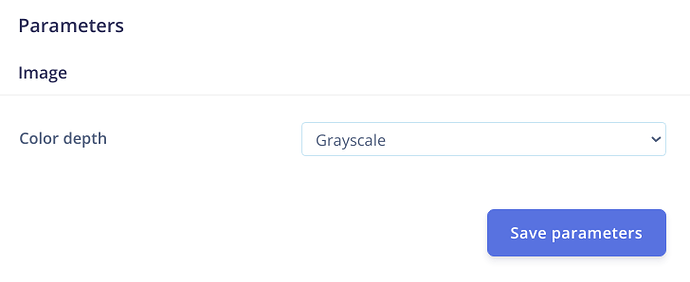

config.pixel_format = PIXFORMAT_GRAYSCALE;

//init with high specs to pre-allocate larger buffers

if(psramFound()){

config.frame_size = FRAMESIZE_240X240;

config.jpeg_quality = 10;

config.fb_count = 2;

} else {

config.frame_size = FRAMESIZE_240X240;

config.jpeg_quality = 12;

config.fb_count = 1;

}

// camera init

esp_err_t err = esp_camera_init(&config);

if (err != ESP_OK) {

Serial.printf(“Camera init failed with error 0x%x”, err);

return;

}

//drop down frame size for higher initial frame rate

sensor_t * s = esp_camera_sensor_get();

s->set_framesize(s, FRAMESIZE_240X240);

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED) {

delay(500);

Serial.print(".");

}

Serial.println("");

Serial.println(“WiFi connected”);

//startCameraServer();

Serial.print(“Camera Ready! Use 'http://”);

Serial.print(WiFi.localIP());

Serial.println("’ to connect, the stream is on a different port channel 9601 “);

Serial.print(“stream Ready! Use 'http://”);

Serial.print(WiFi.localIP());

Serial.println(”:9601/stream “);

Serial.print(“image Ready! Use 'http://”);

Serial.print(WiFi.localIP());

Serial.println(”/capture ");

}

void inference_handler()

{

camera_fb_t *fb = NULL;

int64_t fr_start = esp_timer_get_time();

size_t out_len, out_width, out_height;

size_t ei_len;

fb = esp_camera_fb_get();

if (!fb)

{

Serial.println("Camera capture failed");

return;

}

bool s;

bool detected = false;

dl_matrix3du_t *image_matrix = dl_matrix3du_alloc(1, fb->width, fb->height, channels);

if (!image_matrix)

{

esp_camera_fb_return(fb);

Serial.println("dl_matrix3du_alloc failed");

return ;

}

out_buf = image_matrix->item;

out_len = fb->width * fb->height * channels;

out_width = fb->width;

out_height = fb->height;

Serial.println("Converting to RGB888...");

int64_t time_start = esp_timer_get_time();

// s = fmt2rgb888(fb->buf, fb->len, fb->format, out_buf);

int64_t time_end = esp_timer_get_time();

Serial.printf("Done in %ums\n", (uint32_t)((time_end - time_start) / 1000));

esp_camera_fb_return(fb);

/*if (!s)

{

dl_matrix3du_free(image_matrix);

Serial.println("to rgb888 failed");

return ;

}*/

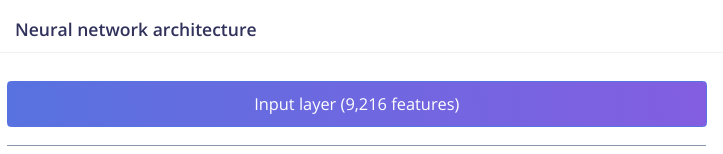

dl_matrix3du_t *ei_matrix = dl_matrix3du_alloc(1, EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT, channels);

if (!ei_matrix)

{

esp_camera_fb_return(fb);

Serial.println("dl_matrix3du_alloc failed");

return ;

}

ei_buf = ei_matrix->item;

ei_len = EI_CLASSIFIER_INPUT_WIDTH * EI_CLASSIFIER_INPUT_HEIGHT * channels;

Serial.println("Resizing the frame buffer...");

time_start = esp_timer_get_time();

image_resize_linear(ei_buf, out_buf, EI_CLASSIFIER_INPUT_WIDTH, EI_CLASSIFIER_INPUT_HEIGHT, channels, out_width, out_height);

time_end = esp_timer_get_time();

Serial.printf("Done in %ums\n", (uint32_t)((time_end - time_start) / 1000));

dl_matrix3du_free(image_matrix);

classify();

dl_matrix3du_free(ei_matrix);

int64_t fr_end = esp_timer_get_time();

//Serial.printf("JPG: %uB %ums\n", (uint32_t)(jchunk.len), (uint32_t)((fr_end - fr_start) / 1000));

return;

}

int raw_feature_get_data(size_t offset, size_t length, float *signal_ptr)

{

memcpy(signal_ptr, ei_buf + offset, length * sizeof(float));

return 0;

}

void classify()

{

Serial.println(“Getting signal…”);

// Set up pointer to look after data, crop it and convert it to RGB888

signal_t signal;

signal.total_length = EI_CLASSIFIER_INPUT_WIDTH * EI_CLASSIFIER_INPUT_WIDTH;

signal.get_data = &raw_feature_get_data;

Serial.println("Run classifier...");

// Feed signal to the classifier

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, false /* debug */);

// Returned error variable "res" while data object.array in "result"

ei_printf("run_classifier returned: %d\n", res);

if (res != 0)

return;

// print the predictions

ei_printf("Predictions ");

ei_printf("(DSP: %d ms., Classification: %d ms., Anomaly: %d ms.)", result.timing.dsp, result.timing.classification, result.timing.anomaly);

ei_printf(": \n");

// Print short form result data

ei_printf("[");

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++)

{

ei_printf("%.5f", result.classification[ix].value);

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(", “);

#else

if (ix != EI_CLASSIFIER_LABEL_COUNT - 1)

{

ei_printf(”, ");

}

#endif

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf("%.3f", result.anomaly);

#endif

ei_printf("]\n");

double max_class = -1;

String value = “”;

// human-readable predictions

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++)

{

ei_printf(" %s: %.5f\n", result.classification[ix].label, result.classification[ix].value);

if (result.classification[ix].value > max_class){

value = result.classification[ix].label;

max_class = result.classification[ix].value;

}

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(" anomaly score: %.3f\n", result.anomaly);

#endif

Serial.println(value);

Serial.println(max_class);

HTTPClient http;

http.begin("http://192.168.1.56:8080/api/data/recognise");

http.addHeader("Content-Type", "application/json");

String jsonToSend = String("{\"recognised\":\"_TMP1_\",\"prob\":_TMP_}");

jsonToSend.replace("_TMP1_", value);

jsonToSend.replace("_TMP_", String(max_class));

Serial.println((jsonToSend));

http.POST(jsonToSend);

//free(ei_buf);

}

void loop() {

// put your main code here, to run repeatedly:

inference_handler();

delay(10000);

}