Hello,

We are trying to deploy the example model from the tutorial from the GitHub README (https://www.survivingwithandroid.com/tinyml-esp32-cam-edge-image-classification-with-edge-impulse/)

on our ESP-EYE device.

When using the most basic model (MobileNetV2 96x96 0.05) in Edge-Impulse the deployment works but the model is not accurate. Every other model fails with the following errors:

-

When deploying the model with the default partitions scheme we are getting the following error:

WiFi connected

Starting web server on port: ‘80’

Starting stream server on port: ‘81’

Camera Ready! Use http:// 192.168.1.158 to connect

Capture image

Edge Impulse standalone inferencing (Arduino)

ERR: Failed to run DSP process (-1002)

run_classifier returned: -5 -

When deploying the model in arduino IDE using the “Huge APP” partition scheme we are getting the following error:

WiFi connected

Starting web server on port: ‘80’

Starting stream server on port: ‘81’

Camera Ready! Use ‘http:// 192.168.1.158’ to connect

Capture image

Edge Impulse standalone inferencing (Arduino)

ERR: failed to allocate tensor arena

Failed to allocate TFLite arena (error code 1)

run_classifier returned: -6

The ESP-EYE has 4MB of memory available.

According to the arduino IDE, the code itself takes ~1.2MB of memory.

According to the Edge-Impulse website, all models do not need more than 1MB of additional memory. However, it seems that the memory is the issue here.

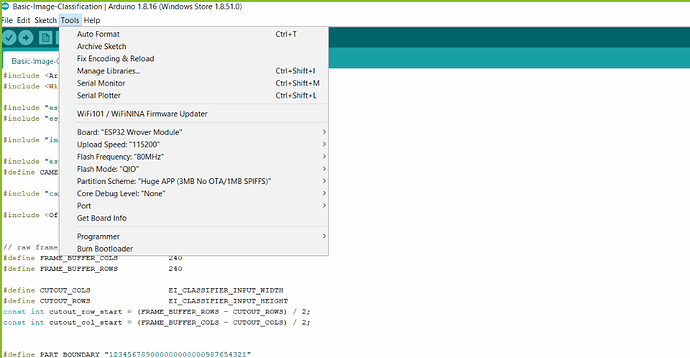

Adding a screenshot of our board settings in arduino IDE:

Can you please advise on how can we make the more complicated models work on our device?

Thank you!