Hi @Eoin,

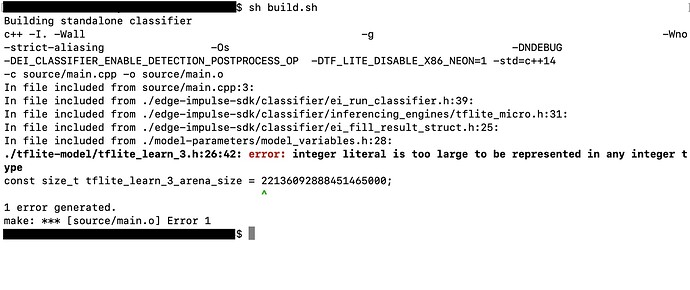

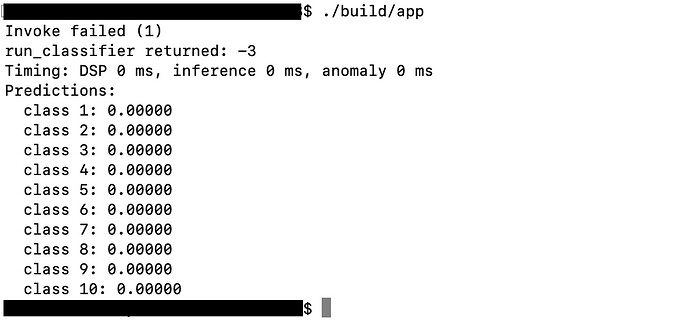

I deleted the old project and created new one, but the issue remains. Here’s the screenshot of how the error appears:

Note that when I do the same (BYOM and deploy as a C++ library) with a smaller model (of size 90 KB), the error does not appear then and the standalone executable runs as expected.

Below are the codes in the main.cpp file, where I pasted my features in:

#include <stdio.h>

#include “edge-impulse-sdk/classifier/ei_run_classifier.h”

// Callback function declaration

static int get_signal_data(size_t offset, size_t length, float *out_ptr);

// Raw features copied from test sample

static const float features[] = {

0.1055, 0.1172, 0.1367, 0.2539, … (4096 features in total)

};

int main(int argc, char **argv) {

signal_t signal; // Wrapper for raw input buffer

ei_impulse_result_t result; // Used to store inference output

EI_IMPULSE_ERROR res; // Return code from inference

// Calculate the length of the buffer

size_t buf_len = sizeof(features) / sizeof(features[0]);

// Make sure that the length of the buffer matches expected input length

if (buf_len != EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE) {

ei_printf("ERROR: The size of the input buffer is not correct.\r\n");

ei_printf("Expected %d items, but got %d\r\n",

EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE,

(int)buf_len);

return 1;

}

// Assign callback function to fill buffer used for preprocessing/inference

signal.total_length = EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE;

signal.get_data = &get_signal_data;

// Perform DSP pre-processing and inference

res = run_classifier(&signal, &result, false);

// Print return code and how long it took to perform inference

ei_printf("run_classifier returned: %d\r\n", res);

ei_printf("Timing: DSP %d ms, inference %d ms, anomaly %d ms\r\n",

result.timing.dsp,

result.timing.classification,

result.timing.anomaly);

// Print the prediction results (object detection)

#if EI_CLASSIFIER_OBJECT_DETECTION == 1

ei_printf(“Object detection bounding boxes:\r\n”);

for (uint32_t i = 0; i < result.bounding_boxes_count; i++) {

ei_impulse_result_bounding_box_t bb = result.bounding_boxes[i];

if (bb.value == 0) {

continue;

}

ei_printf(" %s (%f) [ x: %u, y: %u, width: %u, height: %u ]\r\n",

bb.label,

bb.value,

bb.x,

bb.y,

bb.width,

bb.height);

}

// Print the prediction results (classification)

#else

ei_printf(“Predictions:\r\n”);

for (uint16_t i = 0; i < EI_CLASSIFIER_LABEL_COUNT; i++) {

ei_printf(" %s: “, ei_classifier_inferencing_categories[i]);

ei_printf(”%.5f\r\n", result.classification[i].value);

}

#endif

// Print anomaly result (if it exists)

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf(“Anomaly prediction: %.3f\r\n”, result.anomaly);

#endif

return 0;

}

// Callback: fill a section of the out_ptr buffer when requested

static int get_signal_data(size_t offset, size_t length, float *out_ptr) {

for (size_t i = 0; i < length; i++) {

out_ptr[i] = (features + offset)[i];

}

return EIDSP_OK;

}