Hi marcpous,

first of all thanks for your support.

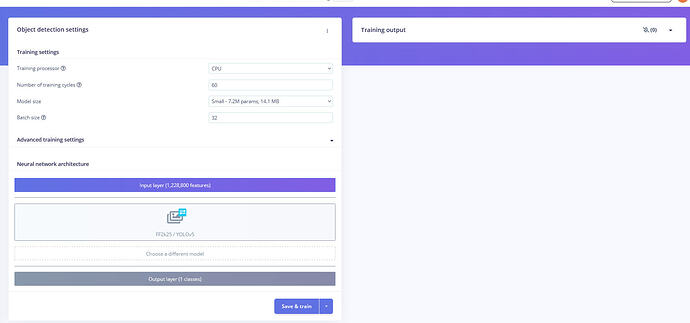

I’ve this error into the training output window after some time I’ve press the Save & train button.

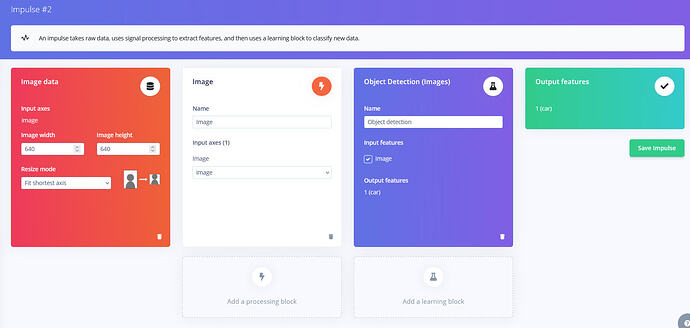

As model I’m using YOLOv5 imported as per instruction on GitHub.

Model used Small - 7.2M params.

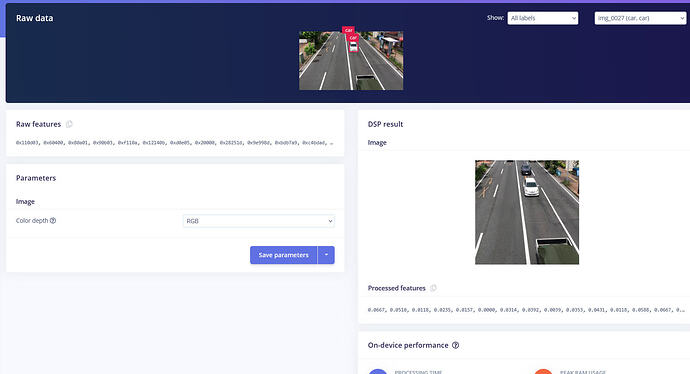

My input layer is about 1,228,800 features, I’m using a images training set made of image with size 640x640 and Color depth RGB.

I’ve enclosed also the log for your reference.

[spinner-done] Job scheduled e[0;37mat 03 Sep 2025 11:41:42e[0m

[spinner-done] Job started e[0;37mat 03 Sep 2025 11:41:45e[0m

[spinner-done] Job scheduled e[0;37mat 03 Sep 2025 11:41:54e[0m

[spinner-done] Job started e[0;37mat 03 Sep 2025 11:41:55e[0m

Transforming Edge Impulse data format into something compatible with YOLOv5

[ 1/360] Converting images...

[289/360] Converting images...

[360/360] Converting images...

Transforming Edge Impulse data format into something compatible with YOLOv5 OK

e[34me[1mtrain: e[0mweights=/app/yolov5s.pt, cfg=, data=/tmp/data/data.yaml, hyp=data/hyps/hyp.scratch-low.yaml, epochs=60, batch_size=32, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=ram, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs/train, name=yolov5_results, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[10], save_period=-1, seed=0, local_rank=-1, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

e[34me[1mgithub: e[0mskipping check (not a git repository), for updates see https://github.com/ultralytics/yolov5

/usr/local/lib/python3.8/dist-packages/torch/cuda/__init__.py:88: UserWarning: CUDA initialization: Unexpected error from cudaGetDeviceCount(). Did you run some cuda functions before calling NumCudaDevices() that might have already set an error? Error 34: CUDA driver is a stub library (Triggered internally at ../c10/cuda/CUDAFunctions.cpp:109.)

return torch._C._cuda_getDeviceCount() > 0

YOLOv5 🚀 2022-9-11 Python-3.8.10 torch-1.13.1+cu117 CPU

e[34me[1mhyperparameters: e[0mlr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0

e[34me[1mWeights & Biases: e[0mrun 'pip install wandb' to automatically track and visualize YOLOv5 🚀 runs in Weights & Biases

e[34me[1mClearML: e[0mrun 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 🚀 in ClearML

e[34me[1mComet: e[0mrun 'pip install comet_ml' to automatically track and visualize YOLOv5 🚀 runs in Comet

e[34me[1mTensorBoard: e[0mStart with 'tensorboard --logdir runs/train', view at http://localhost:6006/

Overriding model.yaml nc=80 with nc=1

from n params module arguments

0 -1 1 3520 models.common.Conv [3, 32, 6, 2, 2]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 18816 models.common.C3 [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 2 115712 models.common.C3 [128, 128, 2]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 3 625152 models.common.C3 [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 1182720 models.common.C3 [512, 512, 1]

9 -1 1 656896 models.common.SPPF [512, 512, 5]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 361984 models.common.C3 [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 90880 models.common.C3 [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 296448 models.common.C3 [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 16182 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 16182 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 16182 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

Model summary: 270 layers, 7022326 parameters, 7022326 gradients, 15.9 GFLOPs

Model summary: 270 layers, 7022326 parameters, 7022326 gradients, 15.9 GFLOPs

Model summary: 270 layers, 7022326 parameters, 7022326 gradients, 15.9 GFLOPs

Transferred 343/349 items from /app/yolov5s.pt

freezing model.0.conv.weight

freezing model.0.bn.weight

freezing model.0.bn.bias

freezing model.1.conv.weight

freezing model.1.bn.weight

freezing model.1.bn.bias

freezing model.2.cv1.conv.weight

freezing model.2.cv1.bn.weight

freezing model.2.cv1.bn.bias

freezing model.2.cv2.conv.weight

freezing model.2.cv2.bn.weight

freezing model.2.cv2.bn.bias

freezing model.2.cv3.conv.weight

freezing model.2.cv3.bn.weight

freezing model.2.cv3.bn.bias

freezing model.2.m.0.cv1.conv.weight

freezing model.2.m.0.cv1.bn.weight

freezing model.2.m.0.cv1.bn.bias

freezing model.2.m.0.cv2.conv.weight

freezing model.2.m.0.cv2.bn.weight

freezing model.0.bn.bias

freezing model.1.conv.weight

freezing model.1.bn.weight

freezing model.1.bn.bias

freezing model.2.cv1.conv.weight

freezing model.2.cv1.bn.weight

freezing model.2.cv1.bn.bias

freezing model.2.cv2.conv.weight

freezing model.2.cv2.bn.weight

freezing model.2.cv2.bn.bias

freezing model.2.cv3.conv.weight

freezing model.2.cv3.bn.weight

freezing model.2.cv3.bn.bias

freezing model.2.m.0.cv1.conv.weight

freezing model.2.m.0.cv1.bn.weight

freezing model.2.m.0.cv1.bn.bias

freezing model.2.m.0.cv2.conv.weight

freezing model.2.m.0.cv2.bn.weight

freezing model.2.m.0.cv2.bn.bias

freezing model.3.conv.weight

freezing model.3.bn.weight

freezing model.3.bn.bias

freezing model.4.cv1.conv.weight

freezing model.4.cv1.bn.weight

freezing model.4.cv1.bn.bias

freezing model.4.cv2.conv.weight

freezing model.4.cv2.bn.weight

freezing model.4.cv2.bn.bias

freezing model.4.cv3.conv.weight

freezing model.4.cv3.bn.weight

freezing model.4.cv3.bn.bias

freezing model.4.m.0.cv1.conv.weight

freezing model.4.m.0.cv1.bn.weight

freezing model.4.m.0.cv1.bn.bias

freezing model.4.m.0.cv2.conv.weight

freezing model.4.m.0.cv2.bn.weight

freezing model.4.m.0.cv2.bn.bias

freezing model.4.m.1.cv1.conv.weight

freezing model.4.m.1.cv1.bn.weight

freezing model.4.m.1.cv1.bn.bias

Transferred 343/349 items from /app/yolov5s.pt

freezing model.0.conv.weight

freezing model.0.bn.weight

freezing model.0.bn.bias

freezing model.1.conv.weight

freezing model.1.bn.weight

freezing model.1.bn.bias

freezing model.2.cv1.conv.weight

freezing model.2.cv1.bn.weight

freezing model.2.cv1.bn.bias

freezing model.2.cv2.conv.weight

freezing model.2.cv2.bn.weight

freezing model.2.cv2.bn.bias

freezing model.2.cv3.conv.weight

freezing model.2.cv3.bn.weight

freezing model.2.cv3.bn.bias

freezing model.2.m.0.cv1.conv.weight

freezing model.2.m.0.cv1.bn.weight

freezing model.2.m.0.cv1.bn.bias

freezing model.2.m.0.cv2.conv.weight

freezing model.2.m.0.cv2.bn.weight

freezing model.2.m.0.cv2.bn.bias

freezing model.3.conv.weight

freezing model.3.bn.weight

freezing model.3.bn.bias

freezing model.4.cv1.conv.weight

freezing model.4.cv1.bn.weight

freezing model.4.cv1.bn.bias

freezing model.4.cv2.conv.weight

freezing model.4.cv2.bn.weight

freezing model.4.cv2.bn.bias

freezing model.4.cv3.conv.weight

freezing model.4.cv3.bn.weight

freezing model.4.cv3.bn.bias

freezing model.4.m.0.cv1.conv.weight

freezing model.4.m.0.cv1.bn.weight

freezing model.4.m.0.cv1.bn.bias

freezing model.4.m.0.cv2.conv.weight

freezing model.4.m.0.cv2.bn.weight

freezing model.4.m.0.cv2.bn.bias

freezing model.4.m.1.cv1.conv.weight

freezing model.4.m.1.cv1.bn.weight

freezing model.4.m.1.cv1.bn.bias

freezing model.4.m.1.cv2.conv.weight

freezing model.4.m.1.cv2.bn.weight

freezing model.4.m.1.cv2.bn.bias

freezing model.5.conv.weight

freezing model.5.bn.weight

freezing model.5.bn.bias

freezing model.6.cv1.conv.weight

freezing model.6.cv1.bn.weight

freezing model.6.cv1.bn.bias

freezing model.6.cv2.conv.weight

freezing model.6.cv2.bn.weight

freezing model.6.cv2.bn.bias

freezing model.2.m.0.cv2.bn.bias

freezing model.3.conv.weight

freezing model.3.bn.weight

freezing model.3.bn.bias

freezing model.4.cv1.conv.weight

freezing model.4.cv1.bn.weight

freezing model.4.cv1.bn.bias

freezing model.4.cv2.conv.weight

freezing model.4.cv2.bn.weight

freezing model.4.cv2.bn.bias

freezing model.4.cv3.conv.weight

freezing model.4.cv3.bn.weight

freezing model.4.cv3.bn.bias

freezing model.4.m.0.cv1.conv.weight

freezing model.4.m.0.cv1.bn.weight

freezing model.4.m.0.cv1.bn.bias

freezing model.4.m.0.cv2.conv.weight

freezing model.4.m.0.cv2.bn.weight

freezing model.4.m.0.cv2.bn.bias

freezing model.4.m.1.cv1.conv.weight

freezing model.4.m.1.cv1.bn.weight

freezing model.4.m.1.cv1.bn.bias

Transferred 343/349 items from /app/yolov5s.pt

freezing model.0.conv.weight

freezing model.0.bn.weight

freezing model.0.bn.bias

freezing model.1.conv.weight

freezing model.1.bn.weight

freezing model.1.bn.bias

freezing model.2.cv1.conv.weight

freezing model.2.cv1.bn.weight

freezing model.2.cv1.bn.bias

freezing model.2.cv2.conv.weight

freezing model.2.cv2.bn.weight

freezing model.2.cv2.bn.bias

freezing model.2.cv3.conv.weight

freezing model.2.cv3.bn.weight

freezing model.2.cv3.bn.bias

freezing model.2.m.0.cv1.conv.weight

freezing model.2.m.0.cv1.bn.weight

freezing model.2.m.0.cv1.bn.bias

freezing model.2.m.0.cv2.conv.weight

freezing model.2.m.0.cv2.bn.weight

freezing model.2.m.0.cv2.bn.bias

freezing model.3.conv.weight

freezing model.3.bn.weight

freezing model.3.bn.bias

freezing model.4.cv1.conv.weight

freezing model.4.cv1.bn.weight

freezing model.4.cv1.bn.bias

freezing model.4.cv2.conv.weight

freezing model.4.cv2.bn.weight

freezing model.4.cv2.bn.bias

freezing model.4.cv3.conv.weight

freezing model.4.cv3.bn.weight

freezing model.4.cv3.bn.bias

freezing model.4.m.0.cv1.conv.weight

freezing model.4.m.0.cv1.bn.weight

freezing model.4.m.0.cv1.bn.bias

freezing model.4.m.0.cv2.conv.weight

freezing model.4.m.0.cv2.bn.weight

freezing model.4.m.0.cv2.bn.bias

freezing model.4.m.1.cv1.conv.weight

freezing model.4.m.1.cv1.bn.weight

freezing model.4.m.1.cv1.bn.bias

freezing model.4.m.1.cv2.conv.weight

freezing model.4.m.1.cv2.bn.weight

freezing model.4.m.1.cv2.bn.bias

freezing model.5.conv.weight

freezing model.5.bn.weight

freezing model.5.bn.bias

freezing model.6.cv1.conv.weight

freezing model.6.cv1.bn.weight

freezing model.6.cv1.bn.bias

freezing model.6.cv2.conv.weight

freezing model.6.cv2.bn.weight

freezing model.6.cv2.bn.bias

freezing model.6.cv3.conv.weight

freezing model.6.cv3.bn.weight

freezing model.6.cv3.bn.bias

freezing model.6.m.0.cv1.conv.weight

freezing model.6.m.0.cv1.bn.weight

freezing model.6.m.0.cv1.bn.bias

freezing model.6.m.0.cv2.conv.weight

freezing model.6.m.0.cv2.bn.weight

freezing model.6.m.0.cv2.bn.bias

freezing model.6.m.1.cv1.conv.weight

freezing model.4.m.1.cv2.bn.weight

freezing model.4.m.1.cv2.bn.bias

freezing model.5.conv.weight

freezing model.5.bn.weight

freezing model.5.bn.bias

freezing model.6.cv1.conv.weight

freezing model.6.cv1.bn.weight

freezing model.6.cv1.bn.bias

freezing model.6.cv2.conv.weight

freezing model.6.cv2.bn.weight

freezing model.6.cv2.bn.bias

freezing model.6.cv3.conv.weight

freezing model.6.cv3.bn.weight

freezing model.6.cv3.bn.bias

freezing model.6.m.0.cv1.conv.weight

freezing model.6.m.0.cv1.bn.weight

freezing model.6.m.0.cv1.bn.bias

freezing model.6.m.0.cv2.conv.weight

freezing model.6.m.0.cv2.bn.bias

freezing model.6.m.1.cv1.conv.weight

freezing model.6.m.1.cv1.bn.weight

freezing model.6.m.1.cv1.bn.bias

freezing model.6.m.1.cv2.conv.weight

freezing model.6.m.1.cv2.bn.weight

freezing model.6.m.1.cv2.bn.bias

freezing model.6.m.2.cv1.conv.weight

freezing model.6.m.2.cv1.bn.weight

freezing model.6.m.2.cv1.bn.bias

freezing model.6.m.2.cv2.conv.weight

freezing model.6.m.2.cv2.bn.weight

freezing model.6.m.2.cv2.bn.bias

freezing model.7.conv.weight

freezing model.7.bn.weight

freezing model.7.bn.bias

freezing model.8.cv1.conv.weight

freezing model.8.cv1.bn.weight

freezing model.8.cv1.bn.bias

freezing model.8.cv2.conv.weight

freezing model.8.cv2.bn.weight

freezing model.8.cv2.bn.bias

freezing model.8.cv3.conv.weight

freezing model.8.cv3.bn.weight

freezing model.8.cv3.bn.bias

freezing model.8.m.0.cv1.conv.weight

freezing model.8.m.0.cv1.bn.weight

freezing model.8.m.0.cv1.bn.bias

freezing model.8.m.0.cv2.conv.weight

freezing model.8.m.0.cv2.bn.weight

freezing model.8.m.0.cv2.bn.bias

freezing model.9.cv1.conv.weight

freezing model.9.cv1.bn.weight

freezing model.9.cv1.bn.bias

freezing model.9.cv2.conv.weight

freezing model.9.cv2.bn.weight

freezing model.9.cv2.bn.bias

freezing model.6.m.1.cv1.bn.weight

freezing model.6.m.1.cv1.bn.bias

freezing model.6.m.1.cv2.conv.weight

freezing model.6.m.1.cv2.bn.weight

freezing model.6.m.1.cv2.bn.bias

freezing model.6.m.2.cv1.conv.weight

freezing model.6.m.2.cv1.bn.weight

freezing model.6.m.2.cv1.bn.bias

freezing model.6.m.2.cv2.conv.weight

freezing model.6.m.2.cv2.bn.weight

freezing model.6.m.2.cv2.bn.bias

freezing model.7.conv.weight

freezing model.7.bn.weight

freezing model.7.bn.bias

freezing model.8.cv1.conv.weight

freezing model.8.cv1.bn.weight

freezing model.8.cv1.bn.bias

freezing model.8.cv2.conv.weight

freezing model.8.cv2.bn.weight

freezing model.8.cv2.bn.bias

freezing model.8.cv3.conv.weight

freezing model.8.cv3.bn.weight

freezing model.8.cv3.bn.bias

freezing model.8.m.0.cv1.conv.weight

freezing model.8.m.0.cv2.conv.weight

freezing model.8.m.0.cv2.bn.weight

freezing model.8.m.0.cv2.bn.bias

freezing model.9.cv1.conv.weight

freezing model.9.cv1.bn.weight

freezing model.9.cv1.bn.bias

freezing model.9.cv2.conv.weight

e[34me[1moptimizer:e[0m SGD(lr=0.01) with parameter groups 57 weight(decay=0.0), 60 weight(decay=0.0005), 60 bias

freezing model.6.m.0.cv1.conv.weight

freezing model.6.m.0.cv1.bn.weight

freezing model.6.m.0.cv1.bn.bias

freezing model.6.m.0.cv2.conv.weight

freezing model.6.m.0.cv2.bn.weight

freezing model.6.m.0.cv2.bn.bias

freezing model.6.m.1.cv1.conv.weight

freezing model.4.m.1.cv2.bn.weight

freezing model.4.m.1.cv2.bn.bias

freezing model.5.conv.weight

freezing model.5.bn.weight

freezing model.5.bn.bias

freezing model.6.cv1.conv.weight

freezing model.6.cv1.bn.weight

freezing model.6.cv1.bn.bias

freezing model.6.cv2.conv.weight

freezing model.6.cv2.bn.weight

freezing model.6.cv2.bn.bias

freezing model.6.cv3.conv.weight

freezing model.6.cv3.bn.weight

freezing model.6.cv3.bn.bias

freezing model.6.m.0.cv1.conv.weight

freezing model.6.m.0.cv1.bn.weight

freezing model.6.m.0.cv1.bn.bias

freezing model.6.m.0.cv2.conv.weight

freezing model.6.m.0.cv2.bn.bias

freezing model.6.m.1.cv1.conv.weight

freezing model.6.m.1.cv1.bn.weight

freezing model.6.m.1.cv1.bn.bias

freezing model.6.m.1.cv2.conv.weight

freezing model.6.m.1.cv2.bn.weight

freezing model.6.m.1.cv2.bn.bias

freezing model.6.m.2.cv1.conv.weight

freezing model.6.m.2.cv1.bn.weight

freezing model.6.m.2.cv2.bn.bias

freezing model.7.conv.weight

freezing model.7.bn.weight

freezing model.7.bn.bias

freezing model.8.cv1.conv.weight

freezing model.8.cv1.bn.weight

freezing model.8.cv1.bn.bias

freezing model.8.cv2.conv.weight

freezing model.8.cv2.bn.weight

freezing model.8.cv2.bn.bias

freezing model.8.cv3.conv.weight

freezing model.8.cv3.bn.weight

freezing model.8.cv3.bn.bias

freezing model.8.m.0.cv1.conv.weight

freezing model.8.m.0.cv1.bn.weight

freezing model.9.cv2.bn.weight

freezing model.9.cv2.bn.bias

freezing model.6.m.1.cv1.bn.weight

freezing model.6.m.1.cv1.bn.bias

freezing model.6.m.1.cv2.conv.weight

freezing model.6.m.1.cv2.bn.weight

freezing model.6.m.1.cv2.bn.bias

freezing model.6.m.2.cv1.conv.weight

freezing model.6.m.2.cv1.bn.weight

freezing model.6.m.2.cv1.bn.bias

freezing model.6.m.2.cv2.conv.weight

freezing model.6.m.2.cv2.bn.weight

freezing model.6.m.2.cv2.bn.bias

freezing model.7.conv.weight

freezing model.7.bn.weight

freezing model.7.bn.bias

freezing model.8.cv1.conv.weight

freezing model.8.cv1.bn.weight

freezing model.8.cv1.bn.bias

freezing model.8.cv2.conv.weight

freezing model.8.cv2.bn.weight

freezing model.8.cv2.bn.bias

freezing model.8.cv3.conv.weight

freezing model.8.cv3.bn.weight

freezing model.8.cv3.bn.bias

freezing model.8.m.0.cv1.conv.weight

freezing model.8.m.0.cv2.conv.weight

freezing model.8.m.0.cv2.bn.weight

freezing model.8.m.0.cv2.bn.bias

freezing model.9.cv1.conv.weight

freezing model.9.cv1.bn.weight

freezing model.9.cv1.bn.bias

freezing model.9.cv2.conv.weight

e[34me[1moptimizer:e[0m SGD(lr=0.01) with parameter groups 57 weight(decay=0.0), 60 weight(decay=0.0005), 60 bias

e[34me[1malbumentations: e[0mBlur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...: 0% 0/288 [00:00<?, ?it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...12 found, 0 missing, 0 empty, 0 corrupt: 4% 12/288 [00:00<00:02, 94.60it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...183 found, 0 missing, 6 empty, 0 corrupt: 64% 183/288 [00:00<00:00, 950.19it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...288 found, 0 missing, 10 empty, 0 corrupt: 100% 288/288 [00:00<00:00, 1245.83it/s]

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00031.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00092.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...: 0% 0/288 [00:00<?, ?it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...12 found, 0 missing, 0 empty, 0 corrupt: 4% 12/288 [00:00<00:02, 94.60it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...183 found, 0 missing, 6 empty, 0 corrupt: 64% 183/288 [00:00<00:00, 950.19it/s]

e[34me[1mtrain: e[0mScanning '/tmp/data/train/labels' images and labels...288 found, 0 missing, 10 empty, 0 corrupt: 100% 288/288 [00:00<00:00, 1245.83it/s]

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00031.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00092.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00169.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00197.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00217.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00246.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00031.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00092.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00169.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00197.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00217.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00246.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mNew cache created: /tmp/data/train/labels.cache

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00169.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00197.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00217.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00246.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00031.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00092.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00169.jpg: 1 duplicate labels removed

e[34me[1mtrain: e[0mWARNING: /tmp/data/train/images/image00197.jpg: 1 duplicate labels removed

0% 0/288 [00:00<?, ?it/s]

e[34me[1mtrain: e[0mCaching images (0.0GB ram): 7% 20/288 [00:00<00:02, 108.16it/s]

e[34me[1mtrain: e[0mCaching images (0.0GB ram): 14% 40/288 [00:00<00:01, 147.67it/s]

e[34me[1mtrain: e[0mCaching images (0.1GB ram): 20% 57/288 [00:00<00:01, 153.71it/s]

e[34me[1mtrain: e[0mCaching images (0.1GB ram): 27% 77/288 [00:00<00:01, 128.21it/s]

e[34me[1mtrain: e[0mCaching images (0.1GB ram): 33% 94/288 [00:00<00:01, 139.19it/s]

e[34me[1mtrain: e[0mCaching images (0.1GB ram): 38% 109/288 [00:00<00:01, 141.59it/s]

e[34me[1mtrain: e[0mCaching images (0.2GB ram): 43% 124/288 [00:00<00:01, 143.50it/s]

e[34me[1mtrain: e[0mCaching images (0.2GB ram): 50% 144/288 [00:00<00:00, 159.32it/s]

e[34me[1mtrain: e[0mCaching images (0.2GB ram): 56% 161/288 [00:01<00:00, 160.13it/s]

e[34me[1mtrain: e[0mCaching images (0.2GB ram): 62% 178/288 [00:01<00:00, 128.66it/s]

e[34me[1mtrain: e[0mCaching images (0.2GB ram): 67% 193/288 [00:01<00:00, 133.42it/s]

e[34me[1mtrain: e[0mCaching images (0.3GB ram): 73% 210/288 [00:01<00:00, 141.91it/s]

e[34me[1mtrain: e[0mCaching images (0.3GB ram): 80% 231/288 [00:01<00:00, 128.48it/s]

e[34me[1mtrain: e[0mCaching images (0.3GB ram): 87% 250/288 [00:01<00:00, 141.32it/s]

e[34me[1mtrain: e[0mCaching images (0.3GB ram): 94% 270/288 [00:01<00:00, 155.78it/s]

e[34me[1mtrain: e[0mCaching images (0.4GB ram): 100% 287/288 [00:01<00:00, 157.26it/s]

e[34me[1mtrain: e[0mCaching images (0.4GB ram): 100% 288/288 [00:01<00:00, 144.57it/s]

e[34me[1mval: e[0mScanning '/tmp/data/valid/labels' images and labels...: 0% 0/72 [00:00<?, ?it/s]

e[34me[1mval: e[0mScanning '/tmp/data/valid/labels' images and labels...1 found, 0 missing, 0 empty, 0 corrupt: 1% 1/72 [00:00<00:14, 4.96it/s]

e[34me[1mval: e[0mScanning '/tmp/data/valid/labels' images and labels...72 found, 0 missing, 3 empty, 0 corrupt: 100% 72/72 [00:00<00:00, 240.00it/s]

e[34me[1mval: e[0mWARNING: /tmp/data/valid/images/image00026.jpg: 2 duplicate labels removed

e[34me[1mval: e[0mNew cache created: /tmp/data/valid/labels.cache

0% 0/72 [00:00<?, ?it/s]

e[34me[1mval: e[0mCaching images (0.0GB ram): 1% 1/72 [00:00<00:07, 9.92it/s]

e[34me[1mval: e[0mCaching images (0.0GB ram): 3% 2/72 [00:00<00:11, 6.23it/s]

e[34me[1mval: e[0mCaching images (0.0GB ram): 26% 19/72 [00:00<00:00, 62.38it/s]

e[34me[1mval: e[0mCaching images (0.0GB ram): 39% 28/72 [00:00<00:00, 54.27it/s]

e[34me[1mval: e[0mCaching images (0.0GB ram): 49% 35/72 [00:00<00:00, 46.12it/s]

e[34me[1mval: e[0mCaching images (0.1GB ram): 57% 41/72 [00:00<00:00, 49.08it/s]

e[34me[1mval: e[0mCaching images (0.1GB ram): 76% 55/72 [00:01<00:00, 71.28it/s]

e[34me[1mval: e[0mCaching images (0.1GB ram): 89% 64/72 [00:01<00:00, 60.75it/s]

e[34me[1mval: e[0mCaching images (0.1GB ram): 100% 72/72 [00:01<00:00, 55.32it/s]

e[34me[1mAutoAnchor: e[0m5.45 anchors/target, 1.000 Best Possible Recall (BPR). Current anchors are a good fit to dataset ✅

Plotting labels to runs/train/yolov5_results/labels.jpg...

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to e[1mruns/train/yolov5_resultse[0m

Starting training for 60 epochs...

Image sizes 640 train, 640 val

Using 8 dataloader workers

Logging results to e[1mruns/train/yolov5_resultse[0m

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

Logging results to e[1mruns/train/yolov5_resultse[0m

Starting training for 60 epochs...

Image sizes 640 train, 640 val

0% 0/9 [00:00<?, ?it/s]

0/59 0G 0.1252 0.04494 0 191 640: 0% 0/9 [04:20<?, ?it/s]

0/59 0G 0.1252 0.04494 0 191 640: 11% 1/9 [07:07<56:57, 427.18s/it]

0/59 0G 0.1246 0.04416 0 171 640: 11% 1/9 [09:16<56:57, 427.18s/it]

0/59 0G 0.1246 0.04416 0 171 640: 22% 2/9 [09:16<29:24, 252.01s/it]

0/59 0G 0.1244 0.04441 0 188 640: 22% 2/9 [11:30<29:24, 252.01s/it]

0/59 0G 0.1244 0.04441 0 188 640: 33% 3/9 [11:31<19:50, 198.35s/it]

0/59 0G 0.1235 0.04465 0 183 640: 33% 3/9 [14:04<19:50, 198.35s/it]

0/59 0G 0.1235 0.04465 0 183 640: 44% 4/9 [14:04<15:03, 180.65s/it]

0/59 0G 0.1229 0.04407 0 159 640: 44% 4/9 [16:36<15:03, 180.65s/it]

0/59 0G 0.1229 0.04407 0 159 640: 56% 5/9 [16:36<11:20, 170.21s/it]

0/59 0G 0.1222 0.04404 0 173 640: 56% 5/9 [19:32<11:20, 170.21s/it]

0/59 0G 0.1222 0.04404 0 173 640: 67% 6/9 [19:32<08:37, 172.38s/it]

0/59 0G 0.1212 0.04419 0 165 640: 67% 6/9 [22:07<08:37, 172.38s/it]

0/59 0G 0.1212 0.04419 0 165 640: 78% 7/9 [22:07<05:32, 166.47s/it]

0/59 0G 0.1202 0.04457 0 177 640: 78% 7/9 [24:51<05:32, 166.47s/it]

0/59 0G 0.1202 0.04457 0 177 640: 89% 8/9 [24:51<02:45, 165.81s/it]

0/59 0G 0.1189 0.04435 0 139 640: 89% 8/9 [26:57<02:45, 165.81s/it]

0/59 0G 0.1189 0.04435 0 139 640: 100% 9/9 [26:57<00:00, 153.49s/it]

0/59 0G 0.1189 0.04435 0 139 640: 100% 9/9 [26:58<00:00, 179.79s/it]

Traceback (most recent call last):

File "train.py", line 630, in <module>

main(opt)

File "train.py", line 526, in main

main(opt)

File "train.py", line 526, in main

train(opt.hyp, opt, device, callbacks)

File "train.py", line 349, in train

results, maps, _ = validate.run(data_dict,

File "/usr/local/lib/python3.8/dist-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/app/yolov5/val.py", line 208, in run

out, train_out = model(im) if compute_loss else (model(im, augment=augment), None)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/app/yolov5/models/yolo.py", line 189, in forward

return self._forward_once(x, profile, visualize) # single-scale inference, train

File "/app/yolov5/models/yolo.py", line 102, in _forward_once

x = m(x) # run

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

train(opt.hyp, opt, device, callbacks)

File "train.py", line 349, in train

results, maps, _ = validate.run(data_dict,

File "/usr/local/lib/python3.8/dist-packages/torch/autograd/grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "/app/yolov5/val.py", line 208, in run

return forward_call(*input, **kwargs)

File "/app/yolov5/models/yolo.py", line 189, in forward

return self._forward_once(x, profile, visualize) # single-scale inference, train

File "/app/yolov5/models/yolo.py", line 102, in _forward_once

x = m(x) # run

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/app/yolov5/models/common.py", line 160, in forward

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/container.py", line 204, in forward

input = module(input)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/app/yolov5/models/common.py", line 113, in forward

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

File "/usr/local/lib/python3.8/dist-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 1488) is killed by signal: Bus error. It is possible that dataloader's workers are out of shared memory. Please try to raise your shared memory limit.

return forward_call(*input, **kwargs)

File "/app/yolov5/models/common.py", line 160, in forward

return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), 1))

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/container.py", line 204, in forward

input = module(input)

File "/usr/local/lib/python3.8/dist-packages/torch/nn/modules/module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "/app/yolov5/models/common.py", line 113, in forward

return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

File "/usr/local/lib/python3.8/dist-packages/torch/utils/data/_utils/signal_handling.py", line 66, in handler

_error_if_any_worker_fails()

RuntimeError: DataLoader worker (pid 1488) is killed by signal: Bus error. It is possible that dataloader's workers are out of shared memory. Please try to raise your shared memory limit.

Application exited with code 1

Application exited with code 1

Thanks for your help.

Fabio