Question/Issue:

Hello everyone,

I am currently working on an Alif E7 (M55 + Ethos-U55) under Zephyr, and I am trying to integrate an Edge Impulse model so that it actually runs on the ARM Ethos-U NPU.

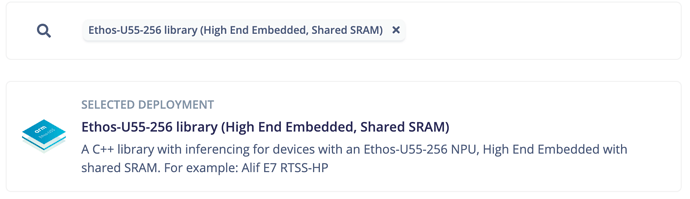

I generated the model via Deployment, selecting: ‘Zephyr Library’.

However, when I integrate this model into my Zephyr project on the Alif E7, inference always runs on the CPU (Cortex-M55) and never on the NPU.

I also don’t see the EI_CLASSIFIER_HAS_ETHOSU flag in model_metadata.h, and there is no trace of the Ethos-U driver logs at runtime.

Has anyone ever managed to run an Edge Impulse model on the Ethos-U NPU in a Zephyr project on Alif E7?

Is there a tutorial or example?

Thank you in advance for any help or feedback!

Context/Use case:

Trying to deploy an Edge Impulse model on an Alif E7 running Zephyr, with the goal of offloading inference to the ARM Ethos-U55 NPU instead of the Cortex-M55 CPU.

Steps Taken:

- Generated the model using Edge Impulse Deployment, selecting “Zephyr Library”.

- Integrated the generated files into the Alif E7 Zephyr project.

- Ran inference on-device and checked logs/model metadata to verify NPU usage.