Hi Eoin,

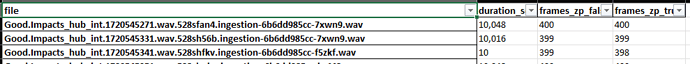

When I run the attached code, I get the following frame counts:

This is the associated project:

Using only the audio that last 10 seconds, I get 399 frames in Edge Impulse Studio (web version) for both zero_padding=False and zero_padding=True. However, when I run the code locally, I get 399 frames for zero_padding=False and 398 frames for zero_padding=True.

Is there any processing step or frontend behavior in Edge Impulse that could explain why zero_padding=True results in 399 frames on the web, but only 398 locally?

Thank you and best regards,

David

"""

Minimal script to calculate frames using the Edge Impulse (EI) method.

Includes only the necessary functions and logic for the EI path.

Usage:

python ei_mfe.py --audio your_audio.wav --zp true

"""

import argparse

import numpy as np

import soundfile as sf

import speechpy

import math

# ==== Utility ====

def ceil_unless_very_close_to_floor(v):

"""Ceil unless very close to floor (handles floating point precision)."""

if (v > np.floor(v)) and (v - np.floor(v) < 0.001):

v = np.floor(v)

else:

v = np.ceil(v)

return v

# ==== EI: Calculate number of frames ====

def calculate_number_of_frames_ei(

sig,

sampling_frequency,

implementation_version=3,

frame_length=0.020,

frame_stride=0.020,

zero_padding=True

):

"""Calculate number of frames (EI version)."""

assert sig.ndim == 1, f"Signal must be 1D, got {sig.shape}"

length_signal = sig.shape[0]

if implementation_version == 1:

frame_sample_length = int(np.round(sampling_frequency * frame_length))

else:

frame_sample_length = int(

ceil_unless_very_close_to_floor(sampling_frequency * frame_length)

)

frame_stride = float(

ceil_unless_very_close_to_floor(sampling_frequency * frame_stride)

)

if zero_padding:

numframes = int(math.ceil((length_signal - frame_sample_length) / frame_stride))

else:

if implementation_version == 1:

numframes = int(math.floor((length_signal - frame_sample_length) / frame_stride))

elif implementation_version >= 2:

x = (length_signal - (frame_sample_length - frame_stride))

numframes = int(math.floor(x / frame_stride))

else:

raise ValueError(

f"Invalid implementation_version={implementation_version}, must be 1,2,3"

)

return numframes, frame_sample_length, frame_stride

# ==== EI: Stack frames ====

def stack_frames_ei(

sig,

sampling_frequency,

implementation_version=3,

frame_length=0.020,

frame_stride=0.020,

zero_padding=True,

filter=lambda x: np.ones((x,))

):

"""Frame a signal into overlapping frames (EI version)."""

numframes, frame_sample_length, frame_stride = calculate_number_of_frames_ei(

sig, sampling_frequency, implementation_version, frame_length, frame_stride, zero_padding

)

length_signal = sig.shape[0]

if zero_padding:

len_sig = int(numframes * frame_stride + frame_sample_length)

additive_zeros = np.zeros((len_sig - length_signal,))

signal = np.concatenate((sig, additive_zeros))

else:

len_sig = int((numframes - 1) * frame_stride + frame_sample_length)

signal = sig[0:len_sig]

indices = np.tile(np.arange(0, frame_sample_length), (numframes, 1)) + \

np.tile(np.arange(0, numframes * frame_stride, frame_stride), (frame_sample_length, 1)).T

indices = np.array(indices, dtype=np.int32)

frames = signal[indices]

window = np.tile(filter(frame_sample_length), (numframes, 1))

Extracted_Frames = frames * window

return Extracted_Frames, numframes

# ==== EI: MFE Extraction ====

def sp_mfe_ei(

signal,

sampling_frequency,

frame_length,

frame_stride,

num_filters,

fft_length,

low_frequency,

high_frequency,

zero_padding=True

):

"""Extract MFE features using EI frame calculation."""

frames, numframes = stack_frames_ei(

signal,

sampling_frequency=sampling_frequency,

frame_length=frame_length,

frame_stride=frame_stride,

zero_padding=zero_padding

)

high_frequency = high_frequency or sampling_frequency / 2

power_spectrum = speechpy.processing.power_spectrum(frames, fft_length)

frame_energies = np.sum(power_spectrum, axis=1)

frame_energies = speechpy.functions.zero_handling(frame_energies)

filter_banks = speechpy.feature.filterbanks(

num_filters,

power_spectrum.shape[1],

sampling_frequency,

low_frequency,

high_frequency

)

features = np.dot(power_spectrum, filter_banks.T)

features = speechpy.functions.zero_handling(features)

return features, numframes

# ==== Main ====

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="EI MFE Feature Extraction")

parser.add_argument("--audio", type=str, required=True, help="Path to audio file")

parser.add_argument("--zp", type=str, default="true",

help="Zero padding (true/false). Default: true")

args = parser.parse_args()

zero_padding = args.zp.lower() == "true"

# Parameters

FRAME_LENGTH = 0.05 # 50 ms

FRAME_STRIDE = 0.025 # 25 ms

NUM_FILTERS = 32

FFT_LENGTH = 1024

LOW_FREQ = 50

HIGH_FREQ = 4000

# Load audio

signal, samplerate = sf.read(args.audio)

# Extract features (EI version)

features, numframes = sp_mfe_ei(

signal,

samplerate,

frame_length=FRAME_LENGTH,

frame_stride=FRAME_STRIDE,

num_filters=NUM_FILTERS,

fft_length=FFT_LENGTH,

low_frequency=LOW_FREQ,

high_frequency=HIGH_FREQ,

zero_padding=zero_padding

)

# Results

print(f"Sample rate: {samplerate} Hz")

print(f"Signal length: {len(signal)} samples")

print(f"Zero padding: {zero_padding}")

print(f"Number of frames (EI): {numframes}")

print(f"Feature shape before flatten: {features.shape}")