Thanks Jan. That worked quite well.

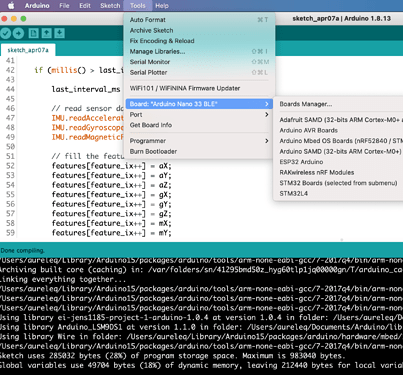

In the meantim I bought some new hardware to classify the model offline. I bought the Arduino Nano BLE 33 Sense. As suggested by you I used all 3 motion sensors (acc, gyro, mag). To record the data and upload it I wrote an own sketch, which reads all three sensors and writes them in float variables:

float aX, aY, aZ, gX, gY, gZ, mX, mY, mZ;

if (IMU.accelerationAvailable() && IMU.gyroscopeAvailable()&& IMU.magneticFieldAvailable() ) {

IMU.readAcceleration(aX, aY, aZ);

IMU.readGyroscope(gX, gY, gZ);

IMU.readMagneticField(mX, mY, mZ);

Serial.print(aX, 3);

Serial.print(’,’);

Serial.print(aY, 3);

Serial.print(’,’);

Serial.print(aZ, 3);

Serial.print(’,’);

Serial.print(gX, 3);

Serial.print(’,’);

Serial.print(gY, 3);

Serial.print(’,’);

Serial.print(gZ, 3);

Serial.print(’,’);

Serial.print(mX, 3);

Serial.print(’,’);

Serial.print(mY, 3);

Serial.print(’,’);

Serial.println(mZ, 3);

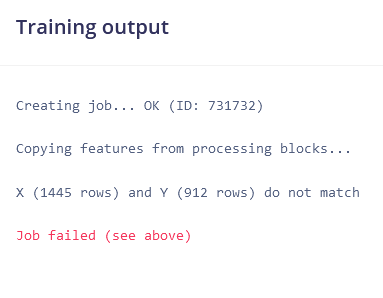

I used the data forwarder to upload the data. In the Create Impulse section I seperated them by creating three spectral feature blocks (one for each sensor). I used 1000 ms for window size and 500 ms for window increase. The data was recorded with 20 Hz which was the standard setting.

I trained my gesture and the model. Now I want to deploy the model back to my Arduino. Therefore I downloaded the zip file and integrated it in my Arduino IDE.

My problem is now that the examples, which came with the library are just for the accelerometer data and not for all 3 sensors.

So I guess I have to write my own sketch. As I want to have a continious sensor reading and classifying I think it is the best way to take the “nano_ble33_sense_accelerometer_continious” example as a start and modify it.

Could you please help me how to do that? What do I have to change? Sorry I am a real newbie to all this. I tried to find a similar topic in the forum, but with no good result. Thanks for your help!