I’m following this tutorial for deploying a model to C++ from the python sdk, and am wanting to recreate the same C++ deployment I get in edge impulse studio. One difference I see is that from edge impulse studio files are generated like tflite_learn_70_compiled.cpp whereas from the python api I see tflite_learn_3.cpp and the contents of the file are less interpretable. I haven’t noticed this difference before and wanted to see how I can recreate the more readable output seen in the studio output.

1 Like

Hi @jarnold1,

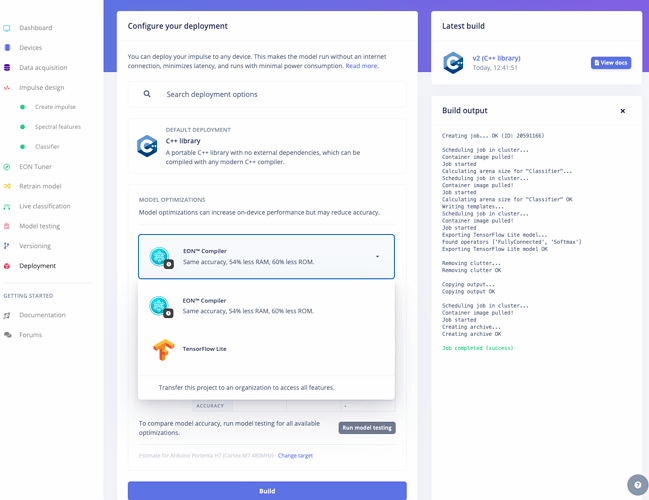

When you deploy using the Python SDK, it will default to using the TFLite Micro interpreted code, which means that the model is saved as a binary (uninterpretable hex code) and loaded in using the TFLite Micro interpreter runtime.

If you change the output type to EON Compiler, then the interpreted byte code is translated to static C++ code, which makes the model a little easier to read (in code) along with saving memory.