Question/Issue: I am currently trying to deploy this model onto the ESP Wroom 32

I came across this example GitHub - edgeimpulse/esp32-platformio-edge-impulse-standalone-example: Minimal example code for running an Edge Impulse designed neural network on an ESP32 dev kit using platformio. It is great, however, the context of my project is live activity recognition and this is static. Can you please guide me through the deployment ?

I even tried using the following code to deploy in arduino

// Include the Arduino library here (something like your_project_inference.h)

// In the Arduino IDE see **File > Examples > Your project name - Edge Impulse > Static buffer** to get the exact name

#include <SDP-_6_Class_Dataset_inferencing.h>

#include <Adafruit_MPU6050.h>

#include <Adafruit_Sensor.h>

#include <Wire.h>

#define CONVERT_G_TO_MS2 9.80665f

#define FREQUENCY_HZ EI_CLASSIFIER_FREQUENCY

#define INTERVAL_MS (1000 / (FREQUENCY_HZ + 1))

static unsigned long last_interval_ms = 0;

// to classify 1 frame of data you need EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE values

float features[EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE];

// keep track of where we are in the feature array

size_t feature_ix = 0;

Adafruit_MPU6050 mpu;

void setup() {

Serial.begin(115200);

Serial.println("Started");

if (!mpu.begin()) {

Serial.println("Failed to initialize IMU!");

while (1)

;

}

mpu.setAccelerometerRange(MPU6050_RANGE_2_G);

}

void loop() {

float x, y, z;

if (millis() > last_interval_ms + INTERVAL_MS) {

last_interval_ms = millis();

// read sensor data in exactly the same way as in the Data Forwarder example

sensors_event_t a, g, temp;

mpu.getEvent(&a, &g, &temp);

x = a.acceleration.x;

y = a.acceleration.y;

z = a.acceleration.z;

// fill the features buffer

features[feature_ix++] = x * CONVERT_G_TO_MS2;

features[feature_ix++] = y * CONVERT_G_TO_MS2;

features[feature_ix++] = z * CONVERT_G_TO_MS2;

// features buffer full? then classify!

if (feature_ix == EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE) {

ei_impulse_result_t result;

// create signal from features frame

signal_t signal;

numpy::signal_from_buffer(features, EI_CLASSIFIER_DSP_INPUT_FRAME_SIZE, &signal);

// run classifier

EI_IMPULSE_ERROR res = run_classifier(&signal, &result, false);

ei_printf("run_classifier returned: %d\n", res);

if (res != 0) return;

// print predictions

ei_printf("Predictions (DSP: %d ms., Classification: %d ms., Anomaly: %d ms.): \n",

result.timing.dsp, result.timing.classification, result.timing.anomaly);

// print the predictions

for (size_t ix = 0; ix < EI_CLASSIFIER_LABEL_COUNT; ix++) {

ei_printf("%s:\t%.5f\n", result.classification[ix].label, result.classification[ix].value);

}

#if EI_CLASSIFIER_HAS_ANOMALY == 1

ei_printf("anomaly:\t%.3f\n", result.anomaly);

#endif

// reset features frame

feature_ix = 0;

}

}

}

void ei_printf(const char *format, ...) {

static char print_buf[1024] = { 0 };

va_list args;

va_start(args, format);

int r = vsnprintf(print_buf, sizeof(print_buf), format, args);

va_end(args);

if (r > 0) {

Serial.write(print_buf);

}

}

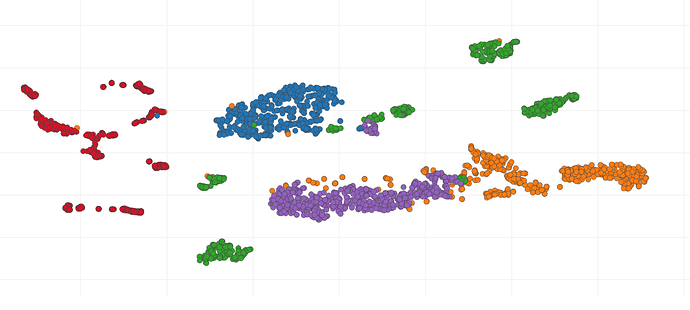

it is completely confusing the activities. For instance it reports walking instead of idle. If possible can you also guide me through the process of selecting the ideal model for my data. I am suspecting that my model is flawed.

Project ID: 214635

Context/Use case: