Hello @AIWintermuteAI.

Thank you for your response!

Sorry. I wasn’t clear with my post. I meant the object detection model should detect the multiple objects even though their bounding boxes are overlapped. Or are not occupying most of the image. Like there is a half part of a lamp or a chair on the image that the model should detect. Do you think that the models available at Edge Impulse are suitable for this application.

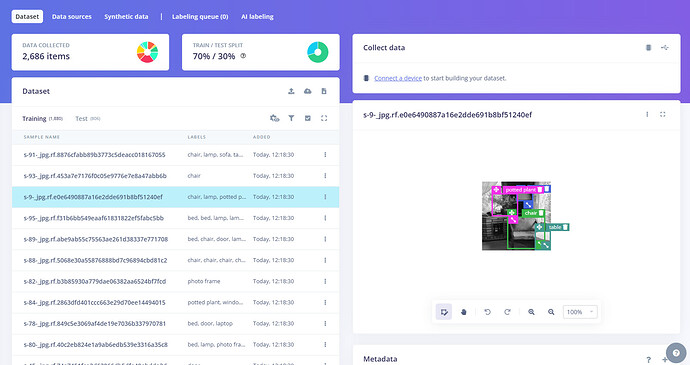

Well, even that said, I gave it a try by using the furniture dataset available at Ultralytics called HomeObjects-3K (HomeObjects-3K Dataset - Ultralytics YOLO Docs). It has 3000 images and 12 classes:

- bed

- sofa

- chair

- table

- lamp

- tv

- laptop

- wardrobe

- window

- door

- potted plant

- photo frame

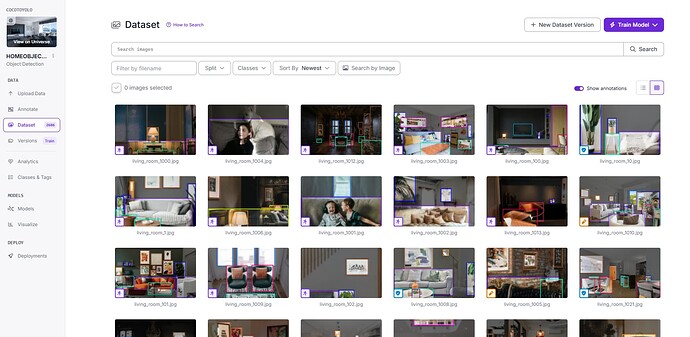

Although it doesn’t have objects like oven or fridge, it is the best dataset I found. With a good distribution of images of the objects and a decent amount of classes. Other ones had something like 1000 to 2000 chair images, but only 98 sink images. The only disappointment is that this dataset is available as YOLO .txt format.

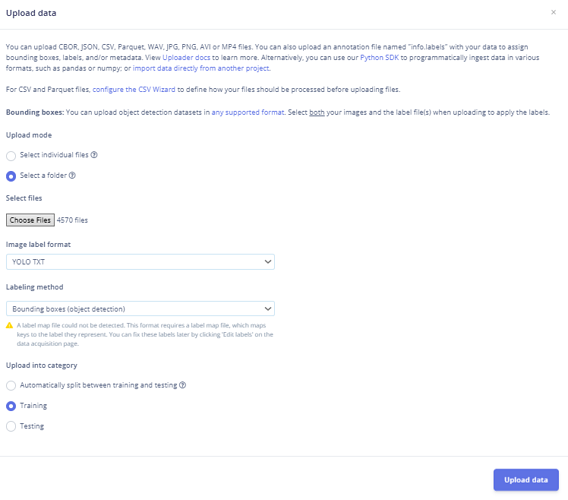

I went digging and found I can use Roboflow to convert this dataset to Pascal VOC. I imported the dataset folder along with the label files and it seemed all good.

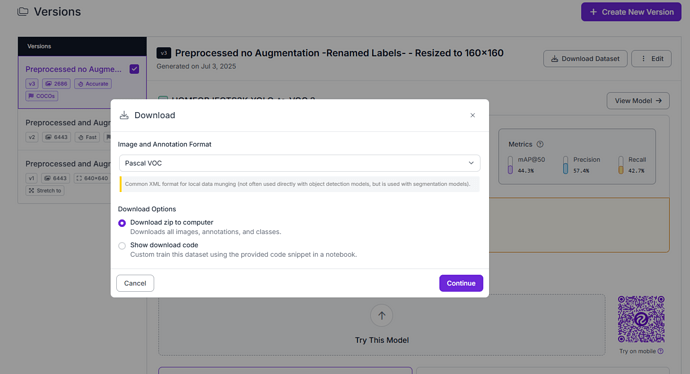

I then created three versions of the dataset. While the first one being just a test. The other two ones are one preprocessed, augmented and resized to 640x640. The other is only preprocessed and resized to 160x160 (I used these resolution based on the guides I read before. I improves the model’s latency in the ESP32S3 Sense). The augmented one has 6443 images, and the not-augmented has 2686 images. Somethin obvious, since augmented datasets feed the dataset with distorted images of the same dataset. I decided to first test with the second dataset (resized to 160x160 and not augmented).

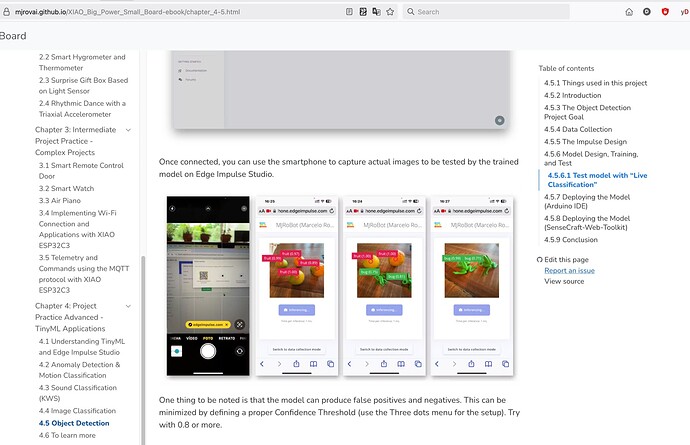

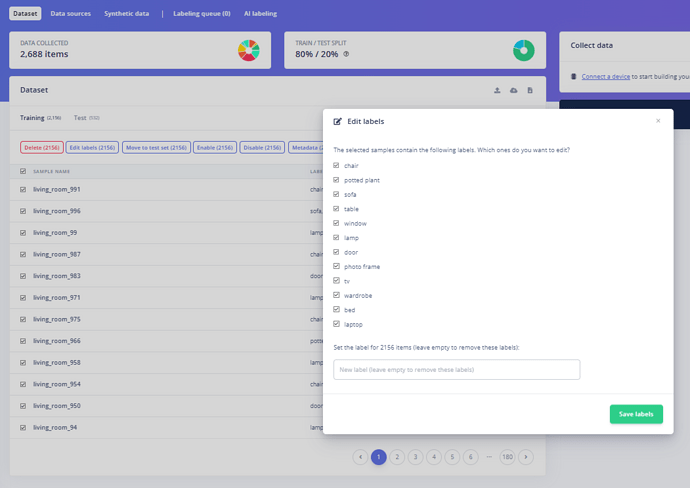

Then, I downloaded the dataset as Pascal VOC and imported it into the Edge Impulse platform. Select for automatic split train/test data. Everything went good. My only concern is that the Pascal VOC folder that was downloaded to my PC had a train, test and valid folders. I don’t know what happened to the “valid” images and how Edge interpreted it.

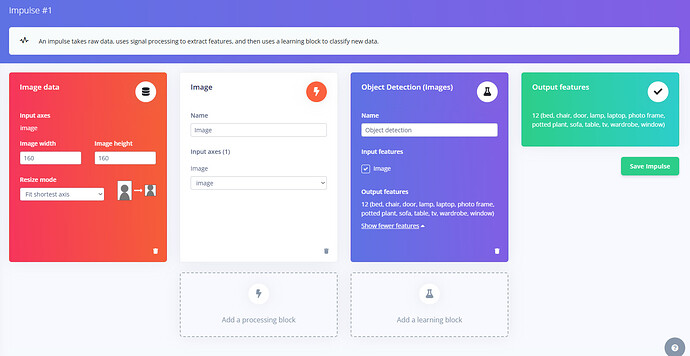

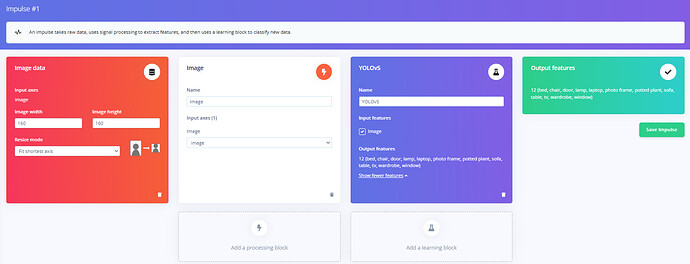

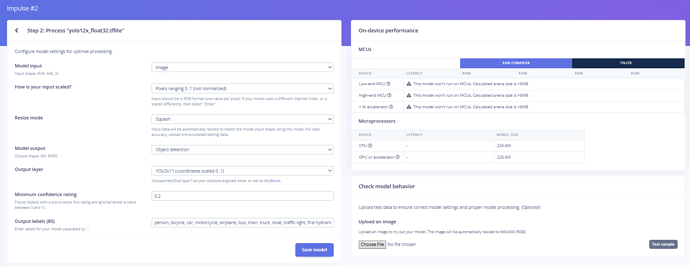

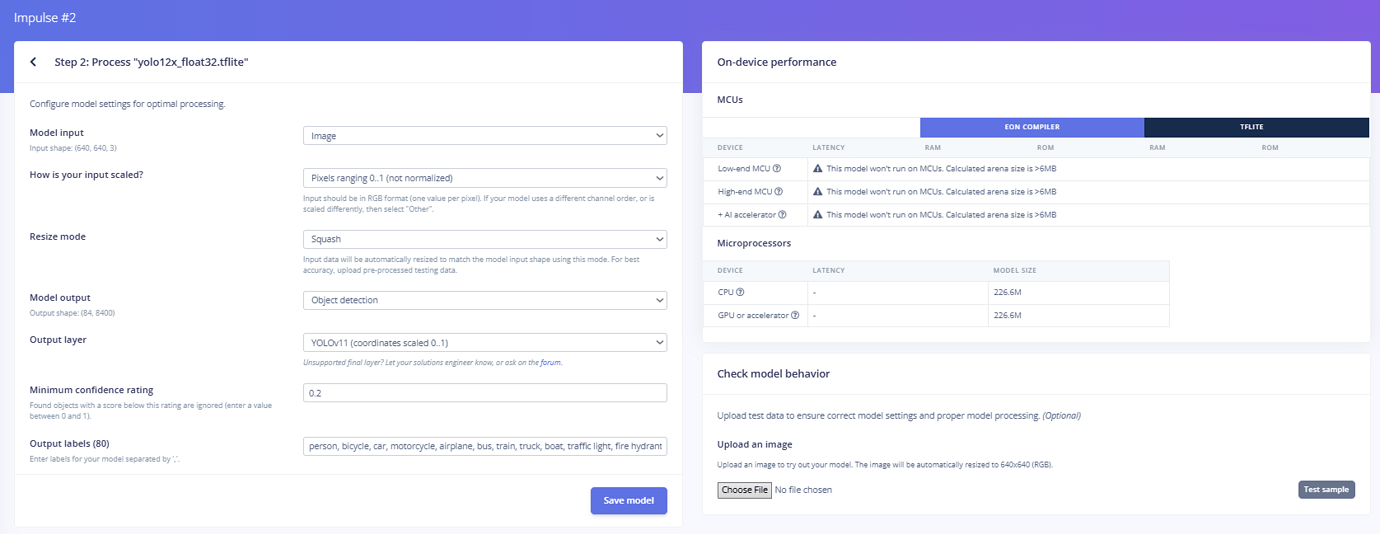

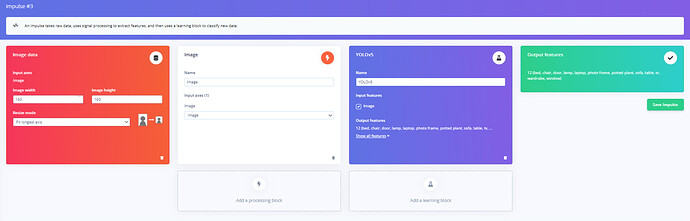

I then created an impulse. All the classes seemed to be automatically detected. Good!

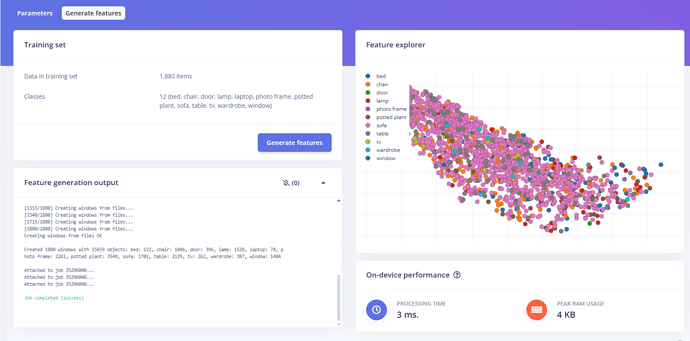

Generated the features. All seem good as well.

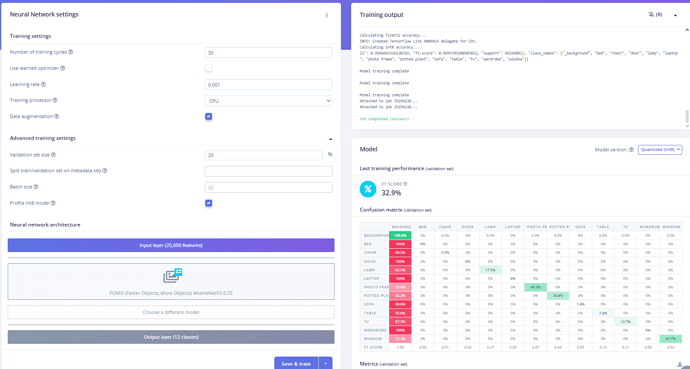

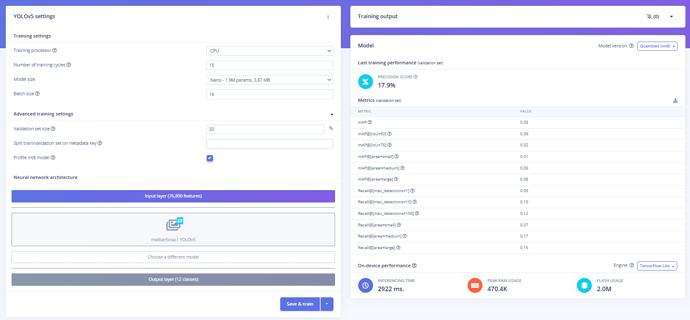

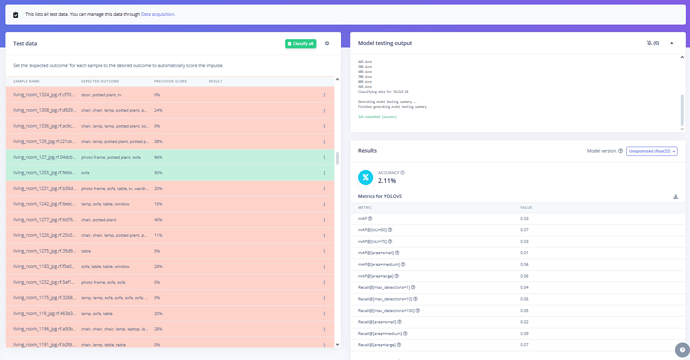

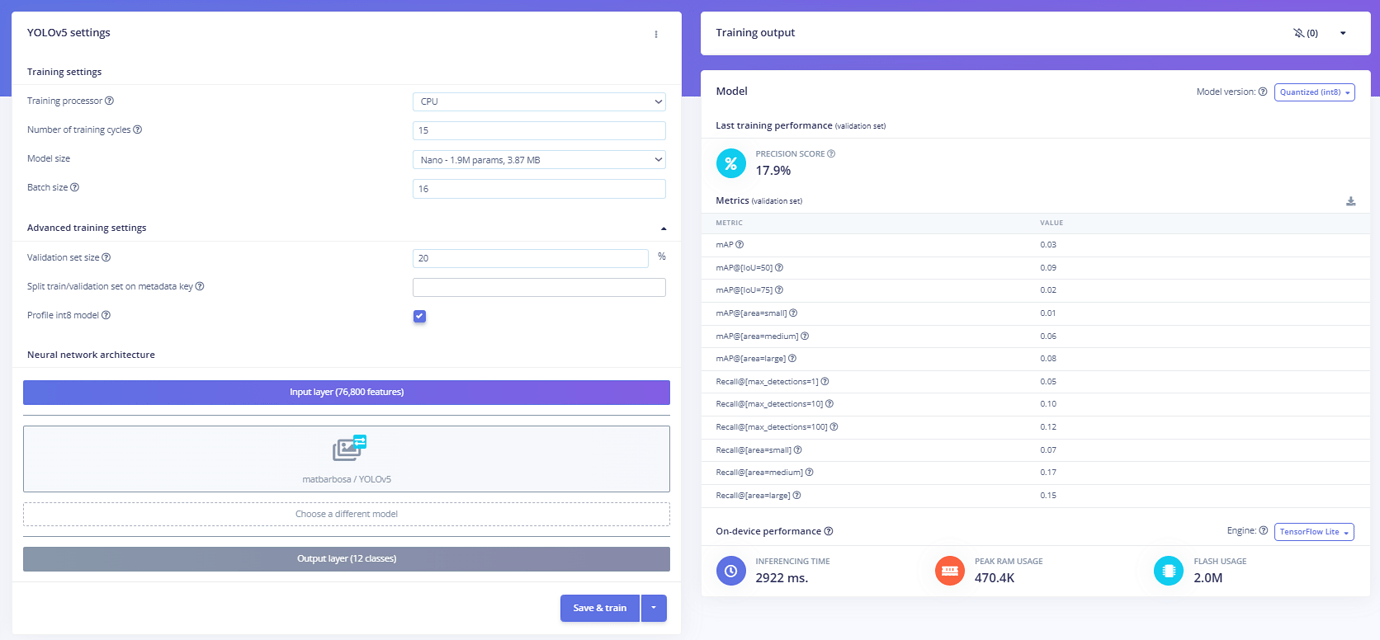

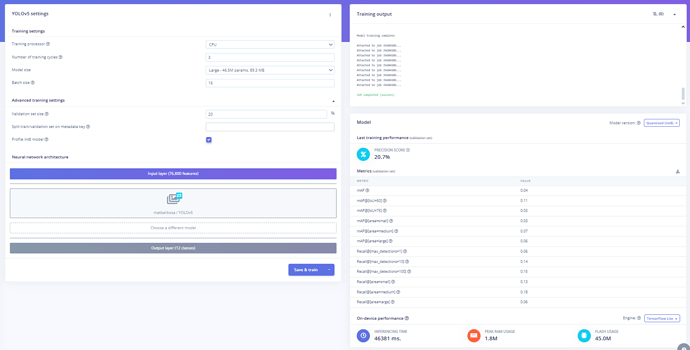

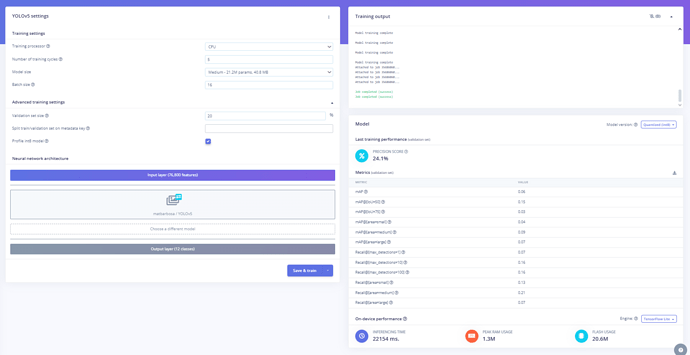

When I trained the model (FOMO with alpha as 0.35). I ended up with a really bad result. A F1 Score of 32.9% and a weird confusion matrix. Most of objects are being detected as “background”.

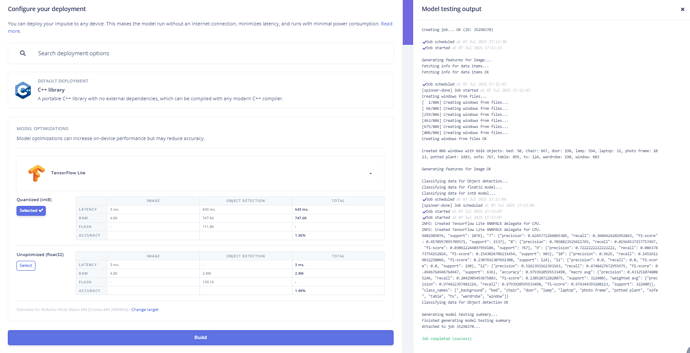

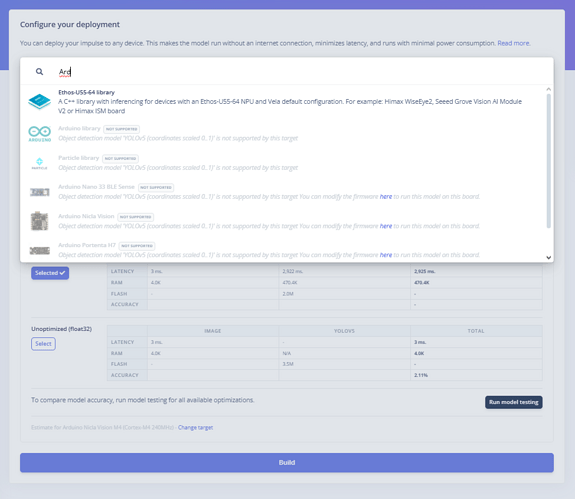

Even though not decently accurate, I decided to deploy the model into the ESP32S3 Sense. I read that using EON Compiler can result in some error with the ESP not using the pseudostatic RAM (PSRAM). But let’s try using the compiler first.

I deploy the model as a Arduino IDE library. I load the Arduino library into Arduino IDE. I enable PSRAM and I upload the code and I keep receiving this error message:

CORRUPT HEAP: Bad tail at 0x3c1ea2a4. Expected 0xbaad5678 got 0x00000000

assert failed: multi_heap_free multi_heap_poisoning.c:279 (head != NULL)

Backtrace: 0x40375b85:0x3fcebaf0 0x4037b729:0x3fcebb10 0x40381a3a:0x3fcebb30 0x403806cf:0x3fcebc70 0x403769bf:0x3fcebc90 0x40381a6d:0x3fcebcb0 0x42007d65:0x3fcebcd0 0x4200ba91:0x3fcebcf0 0x4200406d:0x3fcebd10 0x420040ab:0x3fcebe80 0x42004143:0x3fcebea0 0x42004525:0x3fcebf50 0x420045e5:0x3fcebf70 0x4200d88c:0x3fcec070 0x4037c155:0x3fcec090

ELF file SHA256: f2eefe0ac

Rebooting...

ESP-ROM:esp32s3-20210327

Build:Mar 27 2021

rst:0xc (RTC_SW_CPU_RST),boot:0x8 (SPI_FAST_FLASH_BOOT)

Saved PC:0x40378712

SPIWP:0xee

mode:DIO, clock div:1

load:0x3fce2820,len:0x11bc

load:0x403c8700,len:0xc2c

load:0x403cb700,len:0x3158

entry 0x403c88b8

Edge Impulse Inferencing Demo

Camera initialized

I tried to search for this error message, but I could not find any post or blog that could match exactly my situation. But I think it is something related to memory allocation or something like that. Let’s try then using the TensorFlow Lite compiler.

It worked! However, the model still runs poorly. It only detects precisely windows, photo frames, potted plants and lamps. Based on the platform, the model runs with a 600ms latency. But here we have a latency of 405ms. Which is really good. Since my threshold is 5 seconds. What means space for a bigger dataset. This is an output example:

597656) [ x: 16, y: 112, width: 8, height: 8 ]

Predictions (DSP: 10 ms., Classification: 405 ms., Anomaly: 0 ms.):

Object detection bounding boxes:

window (0.503906) [ x: 16, y: 104, width: 8, height: 8 ]

window (0.652344) [ x: 16, y: 120, width: 8, height: 16 ]

Predictions (DSP: 10 ms., Classification: 405 ms., Anomaly: 0 ms.):

Object detection bounding boxes:

potted plant (0.519531) [ x: 72, y: 96, width: 16, height: 8 ]

window (0.519531) [ x: 56, y: 112, width: 8, height: 8 ]

window (0.527344) [ x: 24, y: 120, width: 16, height: 16 ]

I still do not know why the confusion matrix showed a poor performance to the other classes. Even with a decent dataset. Any idea why this is happening? What do I need to do for improvement? I tried different settings but I always managed a bad score and a bad confusion matrix.