Failed to upload 1052.jpg An item with this hash already exists (ids: 89520108)

Hello, when I upload data, part of the data will report such error, resulting in data upload failure, may I ask what is the error?

Hi @caifan ,

That means the image is already present in the studio and you are trying to re-upload it again.

Regards,

Clinton

@oduor_c Hello, I have clicked the button Delete All data in this project when I re-uploaded the data. However, there will still be images that cannot be uploaded when re-uploading.

Do you need to restart the platform?

In addition, Network Error may occur

Hi @caifan,

Could you kindly share your project ID so that I can file a ticket to our infra team?

Thanks,

Clinton

@oduor_c Hello, my project ID is 92434, now only a few can not be uploaded. Thank you very much.

Hi @caifan ,

Thanks for providing your project ID. I have filled a ticket on this issue to our infra team and I will get back to you once the issue has been resolved.

Regards,

Clinton

Hi @caifan ,

Does your dataset have duplicates?

To deal with the hash issue during uploading you can use the edge impulse uploader by going to your terminal/CMD and typing:

edge-impulse-uploader --allow-duplicates path_to_your_folder/*.jpg

Let me know if this helps.

Thanks,

Clinton

Hi Clinton,

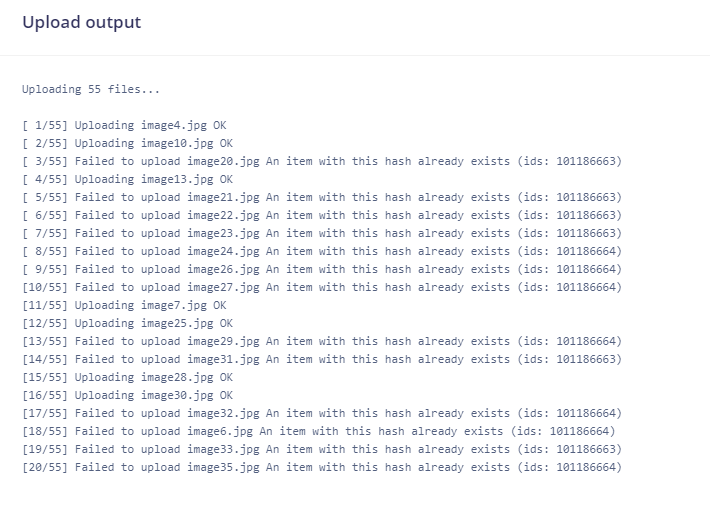

I believe I got the problem. I get this message “[10/55] Failed to upload imagexx.jpg An item with this hash already exists (ids: 101186664)” although I deleted all previous data in the project 106207. Even deleted the project and created a new one but didn’t help!!

Any thought?

Hi @sinameshksar ,

Could you try reuploading using the CLI Uploader by typing:

edge-impulse-uploader --allow-duplicates hash_error/*.jpg

Let me know how it goes.

Thanks,

Clinton

please i am also seeing this error. when i uploaded my data, it uploaded but i can’t see it on the platform, when i tried to reupload it, it says the hash already exist.ID is 95031

Hi @usmansalehtoro,

The error “An item with this hash already exists” means that you are trying to upload a sample with the exact same information as a sample already in your dataset. You will need to either delete your data and try uploading again or carefully go through your data to identify the duplicates. As @oduor_c mentioned, you can get around this with the --allow-duplicates flag.