Hi everyone,

I’m having a bit of trouble with one of my custom projects.

I recently made a project which successfully runs real-time voice recognition on the STM32 IoT Discovery board.

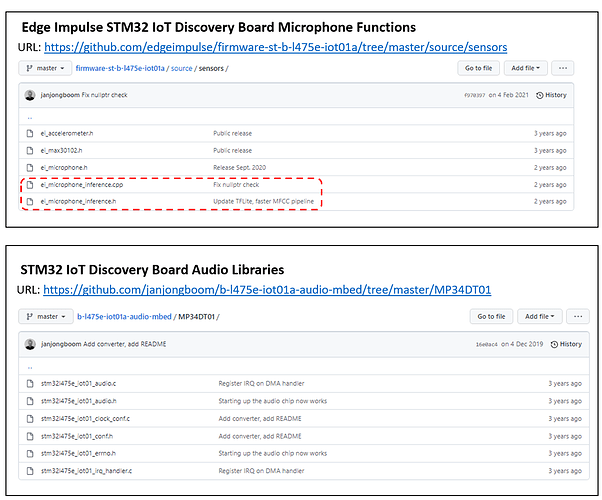

I used libraries and functions from the following sources to get the code to run successfully:

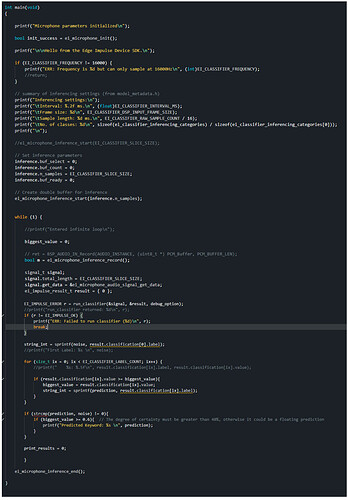

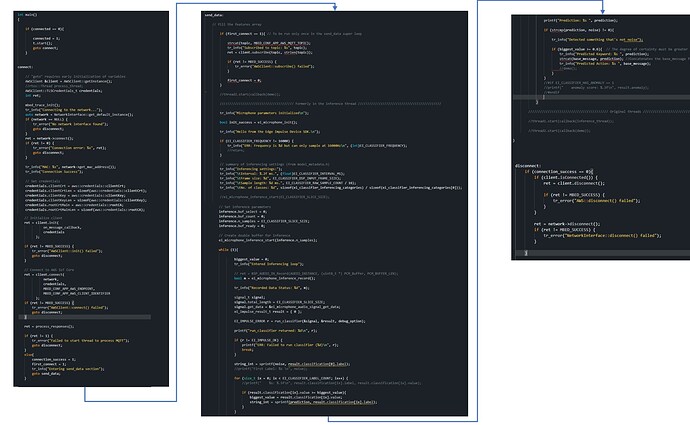

Below is the core component of the main.cpp file for the real-time voice recognition project:

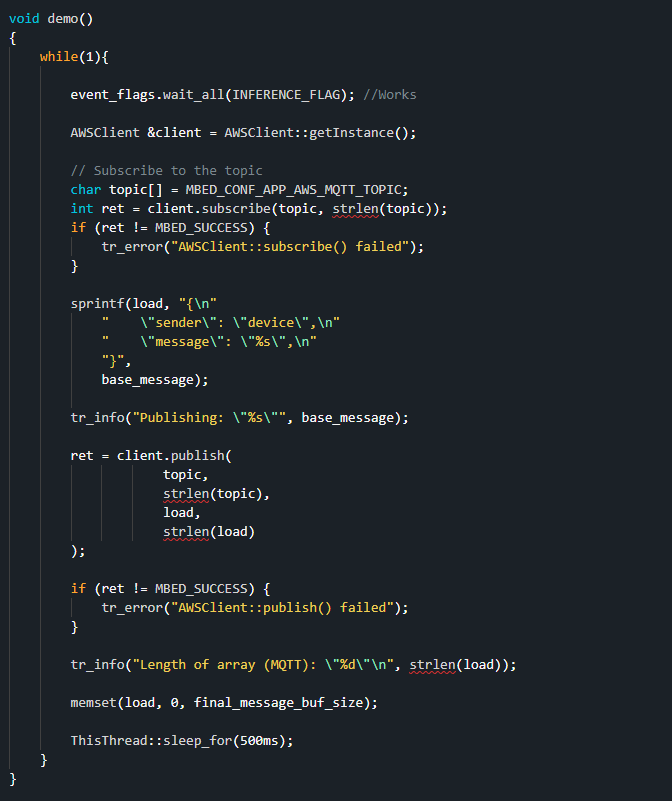

Since it worked as standalone code, I was trying to adapt this project so that I could run two concurrent tasks using RTOS for two different purposes:

Task 1: Runs the main inferencing loop (for voice recognition) in a very similar way to the original voice recognition project.

Task 2: Periodically publishes the result of the inferencing loop to AWS.

I’ve successfully done a very similar project in the past (only with motion recognition instead of voice recognition): https://github.com/Martin-Biomed/Sending-STM-B-L475-IOT01-Motion-Data-to-AWS

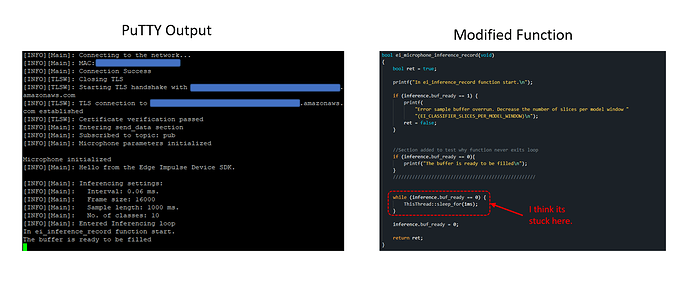

The problem in my code is that the function (ei_microphone_inference_record) seems to be stuck in an endless loop.

Does anyone have any ideas as to why this function is stuck at this point?

Huge thank you in advance for your time

Hi @Martin_08,

I haven’t played with inferencing in a separate thread (yet), so I don’t know how much help I’ll be.

Are you able to do some step-through debugging and register peeking? If so, you might want to put a breakpoint somewhere in the audio_buffer_inference_callback() (or whatever you called your function whenever the I2S buffer fills up, e.g. HAL_I2S_RxHalfCpltCallback()) to see if it’s actually being called. That’s the only place where inference.buf_ready can be set to 1, so my first suspicion is that something is preventing your buffer from filling (i.e. I2S/DMA is not actually running).

Hi Shawn,

I have to admit, I’m having a lot of trouble with debugging using the online Keil Mbed Studio IDE.

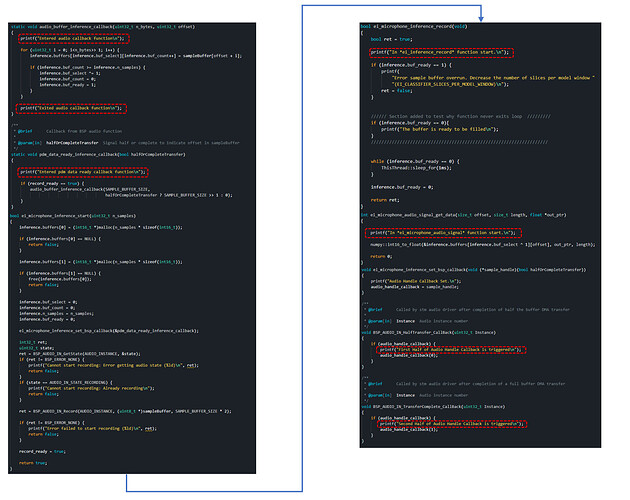

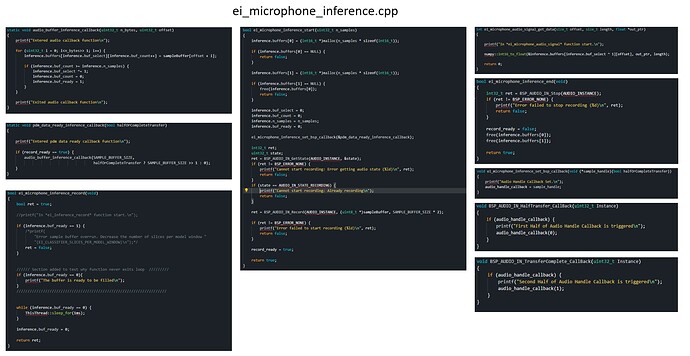

What I’ve tried to do instead is add a bunch of printf statements in the ei_microphone_inference.cpp file to see which functions are being triggered, and in what order. The function ei_microphone_inference.cpp was made by the Edge Impulse team, and I’ve used it to great effect without the AWS Client part in (https://github.com/Martin-Biomed/B-L4675-IOT01A-Voice-Keyword-Recognition).

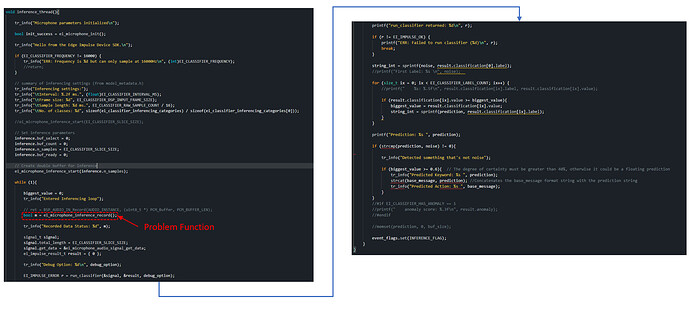

The ei_microphone_inference.cpp commenting is shown below:

I’ve tried doing away with the threading stuff I added, by commenting out the demo() thread, and taking the contents of the voice inferencing (Task 1) and putting them inside the main() loop:

Despite these changes, the PuTTY output has not changed from my original post, which makes me think the AWS Client stuff (mbed-client-for-aws - AWS IoT SDK port for Mbed OS | Mbed) is doing something funky that’s making the PDM microphone functions not execute. Just to clarify, that means all of the added printf statements I added to ei_microphone_inference.cpp have not been shown in the PuTTY terminal.

I really have no idea why none of my functions are executing as I expect them to. I’m not sure whether any of this added data has helped in coming up with theories on why the PDM buffers are not filled, but it’s all I can think of at the moment.

Thank you in advance for your support

Hi @Martin_08,

I chatted with a dev. It looks like it is possible to run an impulse in a thread. I don’t know enough about Mbed OS to be of much help here, unfortunately. However, maybe our Zephyr example might give you a starting point for seeing out to run inference in a separate thread: https://github.com/edgeimpulse/firmware-nordic-thingy53/blob/main/src/inference/ei_run_audio_impulse.cpp

Hi Shawn,

I appreciate your guidance, although I’m still stuck with the memory management implications of using the mbed client for AWS along with my microphone inferencing task.

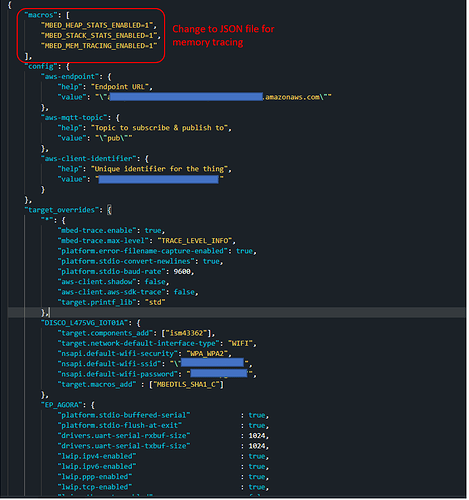

I’ve started checking the execution stats of the threads in my code using the function mentioned here: Tracking memory usage with Mbed OS | Mbed

At the moment I’m not really sure what the threads running in my code are actually doing, but I at least have a pretty good idea of how many threads are running (which I previously wasn’t aware of).

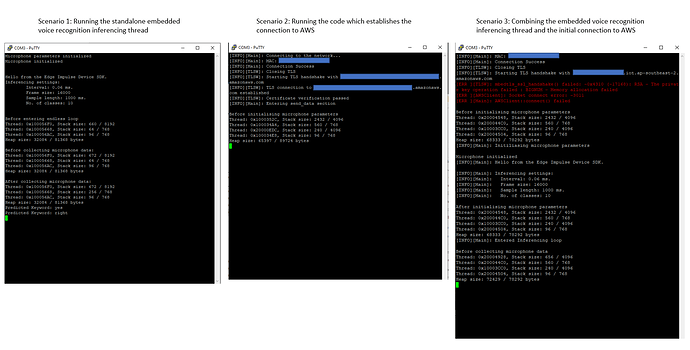

I’ve run the thread stats for three different scenarios:

- Running the standalone embedded voice recognition inferencing thread (successful)

- The code creates three different threads which are running inside the inferencing superloop. The code is successfully able to recognize if one of 9 spoken keywords has been spoken.

- Running the code which establishes the connection to AWS (successful)

- After commenting out all the inferencing code, we see that just before we start setting up the mic parameters, 4 threads are already running.

- Combining the embedded voice recognition inferencing thread and the initial connection to AWS

- For some reason, the changes I made to the JSON file (which were also included in the other two scenarios) only becomes a problem in this scenario.

- These additions to the JSON file (which enable mbed thread stat tracing) cause a Socket connection error when trying to verify my certifications, even if I comment out any code in the main.cpp file related to tracking the thread stats.

- When the code execution reaches the inferencing thread (just before collecting mic data), we can see that there are four threads running.

- In any case, the main inferencing loop will never actually fill the buffers defined in ei_microphone_inferencing.cpp, which is the main problem I’m trying to solve.

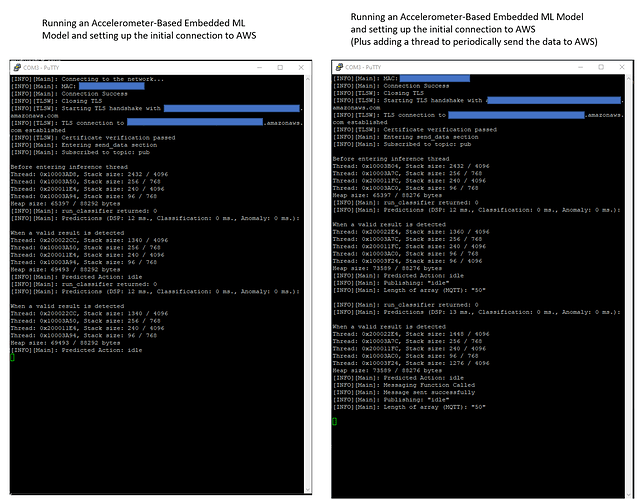

I compared the thread stats of this project to one I’ve previously done, which is very similar.

The project I used for comparison was (https://github.com/Martin-Biomed/Sending-STM-B-L475-IOT01-Motion-Data-to-AWS), modified to cover two scenarios:

- Running an Accelerometer-Based Embedded ML Model and setting up the initial connection to AWS

- When the code enters the inferencing loop, there are four threads which are running. The code runs successfully.

- Running an Accelerometer-Based Embedded ML Model and setting up the initial connection to AWS (Plus adding a thread to periodically send the data to AWS)

- When the code enters the inferencing loop, five threads are running because I added one to periodically send the result of the embedded ML model to my AWS server. The code runs successfully.

I made the same modifications to the JSON file for this project for thread memory tracing.

Basically, I still have no idea why one of my projects runs so successfully while the other one has so many problems, seemingly due to memory management

I’ve tried to be as informative as I can think of, but does this give you any ideas as to what might be happening?

Turn on an LED or printf something in BSP_AUDIO_IN_TransferComplete_CallBack() to make sure the STM audio driver is communicating correctly with ei_microphone_inference.cpp.

From there, the code calls from BSP_AUDIO_IN_TransferComplete_CallBack() look correct and should end up at audio_buffer_inference_callback(). where inference.buf_ready flag gets set.

Thank you for the reply.

I have modified the ei_microphone_inference.cpp file to include printf statements after every function call:

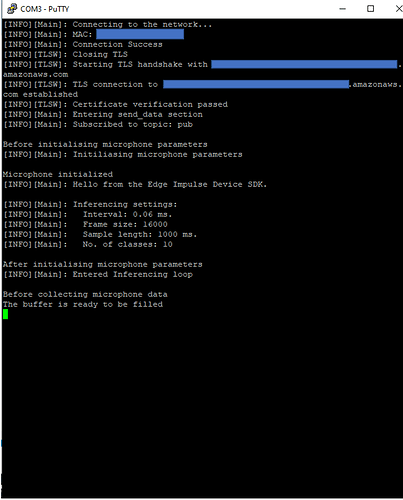

The specific printf output after the BSP_AUDIO_IN_TransferComplete_CallBack() function is called should be: “Second Half of the Audio Handle Callback is Triggered”

As you can see from my PuTTY output (shown below) the printf statement never executes

I ran my code again (without the previously added thread status functions which where causing issues for the AWS certification). This was the PuTTY output:

There are a lot of functions which aren’t being executed in this cpp file. Anyways I hope the previously-provided thread data along with this PuTTY output can help in painting a picture of what is happening in the code. I’m still lost as to why the functions aren’t being called.

Thank you in advance for your time.

Please update your GitHub project. These screen shots are to hard to follow and are not searchable (maybe I need an AI for that).

Is your audio_handle_callback() similar to get_audio_from_dma_buffer()?

I think you need function something like ei_microphone_record() that has a call ei_microphone_inference_set_bsp_callback(&get_audio_from_dma_buffer) that hooks the hardware DMA into the EI buffers.

Heard you and am happy to oblige

I uploaded a ZIP file with all my mbed code for the AWS voice recognition project (at least what I’ve done so far) to my github: https://github.com/Martin-Biomed/Unfinished-AWS_ML_Voice_Data

It’s a public project so you should be able to download it hassle-free. I sanitized the AWS and Wi-FI credentials but other than that it’s an exact copy of my current code. Let me know if there’s anything else I can do to help.

I hope that helps.

Unfortunately,

I think I’ve reached my limit on trying things to make this project work. I tried both suggestions and they didn’t have an effect. Thank you very much for your time, but I think I might have to admit defeat to this problem