I am following the tutorial. I have deployed the model in KV260. I want to ask is how to get only the count of objects detected in the main.cpp and how to transfer the data on AWS?

Hi @timothy.malche,

Please see this example. In there, you can find the following snippet that shows how to work with bounding box results from object detection:

#if EI_CLASSIFIER_OBJECT_DETECTION == 1

for (size_t ix = 0; ix < EI_CLASSIFIER_OBJECT_DETECTION_COUNT; ix++) {

auto bb = result.bounding_boxes[ix];

if (bb.value == 0) {

continue;

}

printf("%s (%f) [ x: %u, y: %u, width: %u, height: %u ]\n", bb.label, bb.value, bb.x, bb.y, bb.width, bb.height);

}

#else

...

#endif

You should just need to count the number of result.bounding_boxes[] that exist with a bb.value over 0. For example:

int num_bboxes = 0;

for (size_t ix = 0; ix < EI_CLASSIFIER_OBJECT_DETECTION_COUNT; ix++) {

auto bb = result.bounding_boxes[ix];

if (bb.value == 0) {

continue;

}

num_bboxes++;

}

After that for loop runs, num_bboxes will contain the number of objects detected (up to the maximum defined by EI_CLASSIFIER_OBJECT_DETECTION_COUNT.

I’m not sure what kind of data you wish to send to AWS. It will depend on what service you are using and what kind of connection you have. I recommend starting with some AWS tutorials (such as this one) to see how to log IoT data to AWS.

hi @shawn_edgeimpulse thanks for your help. I find it working. Now my question is, how can I run inference continuously and feed video data to input_buff[]. Thanks

Hi @timothy.malche,

Continuous inference is trickier, as run_classifier() is blocking (whenever you call it, your main thread can’t do anything until that function returns). I recommend using run_classifier_continuous() to do continuous inference, which will optimize some of the feature generation and collection for you.

There are a few ways to do continuous inference:

- Use a dual-core processor where one core captures data and the other performs inference with

run_classifier_continuous() - Use multiple threads: one high-priority thread captures data and fills your buffer and the other, lower-priority thread runs

run_classifier_continuou()in the background - Use hardware timer interrupts and DMA to fill a double buffer at a set interval while

run_classifier_continuous()runs in the background

These require some intermediate/advanced knowledge of embedded systems to work with multiple threads and/or interrupts.

Hi @shawn_edgeimpulse thanks for information. I am able to run the object detection model locally using edge-impulse-linux-runner command on Kria KV260 very similar to RPi as shown by @janjongboom in the tutorial. I can access live stream in web browser. Now, what I want is to customize this to count object and send it on cloud server over MQTT while the output being displayed in web browser locally on KV260. So for this I need source code that work same as edge-impulse-linux-runner. Where do I get it? Thanks!

Hi @timothy.malche,

Apologies–I misunderstood the problem. You probably do not need run_classifier_continuous() for object detection. If you can capture one image and call run_classifier(), you should be able to put that in a while(1) {...} loop to continuously capture images from your camera and perform inference on each frame. Your FPS will be limited by whatever your camera can capture and the speed of inference.

In each iteration of the while loop, you can count the number of objects seen and sent that information to your MQTT server.

Here is an example that demonstrates continuous object detection with C++: https://github.com/edgeimpulse/example-standalone-inferencing-linux/blob/master/source/camera.cpp

Hi @shawn_edgeimpulse thanks. Yes, I have also located the source. I’ll try it. Just one thing, will it compile on KV260 with APP_CAMERA=1 make -j ? Thanks

Hi @timothy.malche,

I honestly do not know, as I have never used a KV260. Assuming that you have the correct build environment set up (e.g. GNU Make, gcc, and g++) and that these tools will run on the KV260, it should work. That command looks correct, so I’d say give it a shot!

Hey @shawn_edgeimpulse I have installed Ubuntu on Virtual Box and using example-standalone-inferencing-linux example. I have cloned the repository and init submodules

$ git clone https://github.com/edgeimpulse/example-standalone-inferencing-linux

$ cd example-standalone-inferencing-linux && git submodule update --init --recursive

then installed libraries

$ sudo apt install libasound2

$ sh build-opencv-linux.sh

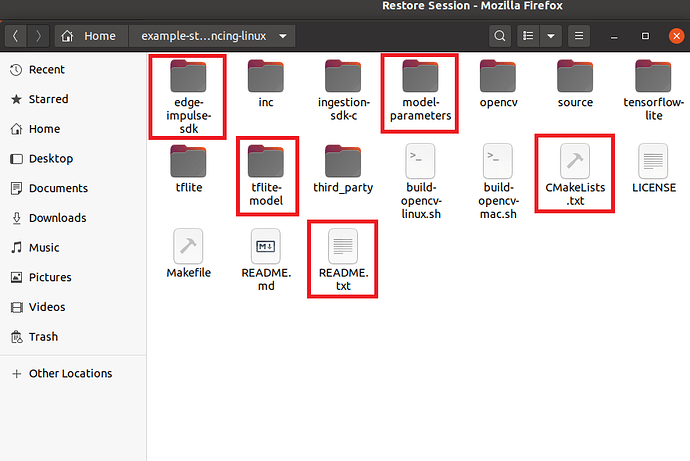

Trained impulse in EI for Object Detection and copied the model to root example-standalone-inferencing-linux example folder as following

and executed the command

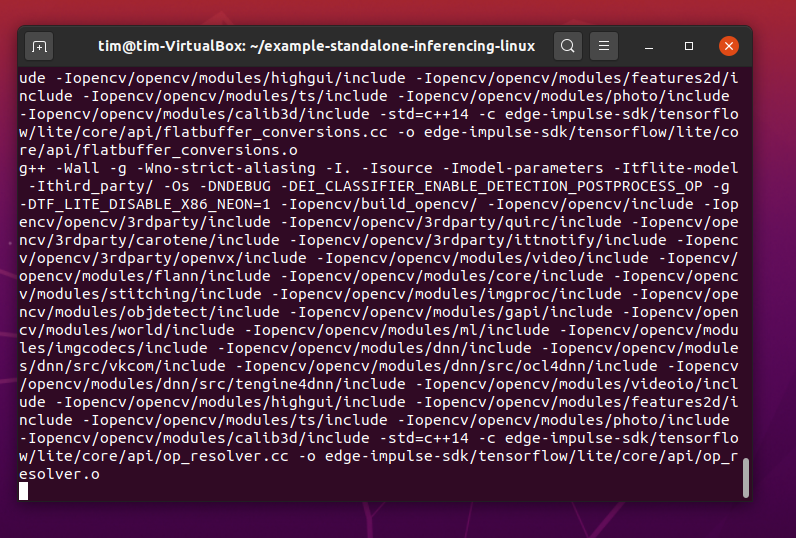

$ APP_CAMERA=1 make -j

but it always get stuck at following point and sometimes Failed message is displayed. What is the reason?

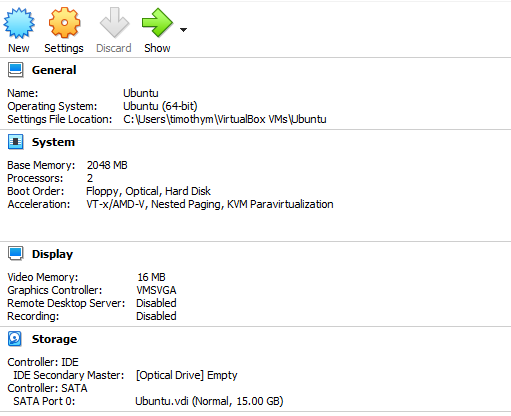

That looks like some memory issue. How much RAM/CPU cores have you allocated to your VM?

You can also try APP_CAMERA=1 make -j2 to enable compiling on 2 cores.

Aurelien

Thanks @aurel, I have enabled 2 cores with 2048 MB Ram in VM and then used command APP_CAMERA=1 make -j2 and it worked.

Now I want to use MQTT client library in main.cpp. Could you please suggest one? thanks

I have successfully build the object detection model for Kria KV260 with Eclipse PAHO MQTT Library. Now I am facing one issue. Whenever I run the application with ./build/camera /dev/video0 command it runs and detects and counts the object in front of camera. But whenever I change objects (remove or add more) in front of camera, the old inferencing result display so I have to re-run application again and then correct results display. Why? and How to solve this? Kindly help me urgently.

still waiting for response to above? And how to enable object detection in live stream using the C++ source?