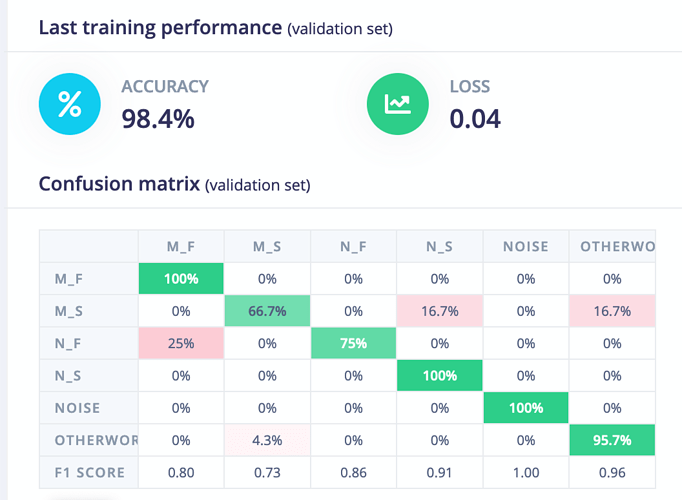

What is the threshold being used to generate the confusion matrix output, accuracy and recall metrics?

1 Like

In the model testing the default I think is 0.6.

when it comes to training I don’t know

Hmmm… be good if the product team could confirm

1 Like

Hi @msalexms,

For validation data, whichever class has the highest probability wins. For testing data, we set the threshold to a default of 0.6, which you can change via Set confidence thresholds on the Model testing page.