For some reason the last part of the guide does not work. ‘Seeing the output’.

I’m using Putty and similar setup with it gives me console output if I update the firmware.

- Are you setting the correct baud rate (try both 115,200 and 9,600?

- Have you configured the right UART peripheral, and are writing to the right peripheral?

- Can you print anything from your application and get output via normal STM32HAL functions?

Got this working. the STM IDE set the UART configuration to the wrong pins. Had to force it to correct pins.

Next question is that how can I gather the raw data with HW so that I dont have to copy and paste it from the cloud?

Here’s something that might help. This was added a few weeks ago so you might have missed out - https://docs.edgeimpulse.com/docs/cli-data-forwarder#classifying-data

Check the Mbed OS example and use the code to gather Raw features from sensor data so you do not need to copy-paste it from the EdgeImpulse studio

No I did not. I used C++ libraries instead.

I think I will do the same till they find the solution!

Hi,

I try the ei-keyword-spotting tutorial from @ShawnHymel with NUCLEO L476RG and SPH0645 mic, but I have some problems (ERROR: audio buffer overrun).

I measure the inference time execution and it’s about 480 ms, which I think is too much if the sampling rate is 32K and the buffer size is 6400 samples.(but probably I didn’t get exactly the flow)

Do you have some suggestions to solve it?

thank you so much for the tutorial and work

I think 32 kHz is too fast. The example I use is 16 kHz, which is what Edge Impulse uses by default. Even though my I2S mic samples at 32 kHz, I drop every other sample to make it 16 kHz. I know, I should be using a low-pass filter to avoid aliasing if I’m down-sampling, but that would take what little resources I have, so I just have to assume that I won’t encounter frequencies above 8 kHz (as my application is for vocal range anyway).

If you look for audio_buffer_inference_callback() function in my main.cpp of the L476 example, you can see where I drop samples and convert the 24-bit audio to 16-bit audio.

6400 is the size of my I2S buffer. Every time half of that is filled up, it calls the audio_buffer_inference_callback() function. Here, the audio samples are converted to 16-bit PCM and 16 kHz and stored in one of the inference.buffers (it’s a double buffer). The size of that buffer is EI_CLASSIFIER_SLICE_SIZE.

One of these inference.buffers is sent to run_classifier_continuous() each time it’s filled up. That function should take no more than 250 ms to perform feature extraction (MFCCs) and inference, as it needs to meet the goal of performing inference 4 times per second.

Hope that helps!

Thank you so much for your support!

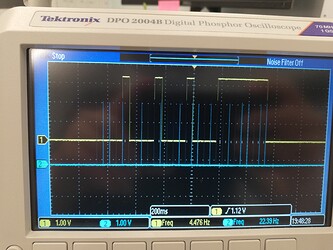

I did some debug with the oscilloscope, and I found a strange behavior.

The inference algorithm seems to work properly for the first 3 times and then, it takes too much time.

I put a toggle pin UP-DOWN across “run_classifier_continuous” function The YELLOW

and a toggle pin UP-DOWN across half callback and complete callback --> the BLUE signal

The project I tried is the one downloaded from the repository (same BOARD and MIC), compile with timing optimization

If I can give you other information let me know.

Thank you so much

What is the “timing optimization” option? It’s been a couple of months since I made that project, so I may not remember. Is there a particular checkbox in preferences or flag you set that’s different from my example project?

Good morning,

I did two tests:

- The first test with all the same preferences you set, but the behaviour is the same as the image I posted.

- The second one with “timing optimization” (is in properties-> C/C++ build -> Settings-> MCU GCC Compiler-> Optimization) With the same result.

Do you have some other suggestion?

thank you so much

I tried now, but it goes in overrun anyway.

@Fede99 so a full frame of inference costs 480ms. when doing only half that this will be even further reduced, so I’m surprised about that. I’d follow Shawn’s suggestion and downsample to 16KHz on the device.

Hello Edge Team,

I had followed the instructions to include *.pack library into STM32CubeIDE but after compiling the c++ project i got below compiler error:

./Middlewares/Third_Party/EdgeImpulse_AudioPCBM_MachineLearning/edgeimpulse/edge-impulse-sdk/dsp/spectral/processing.hpp:26:10: fatal error: vector: No such file or directory

26 | | #include

same for #include

looks like below two include file are not able to fine into below include path from g++ include settings

…/Middlewares/Third_Party/EdgeImpulse_AudioPCBM_MachineLearning/edgeimpulse/

Kindly help me to resolve the compiler error related to header file not able locate in above path.

-Vikas B

it is select as

![]()

Any updates or suggestion to resolve the compiler issue?