Hello everyone!

I’m working on a project for university, where I’m trying to detect bees on flowers with an ESP-EYE. Therefore I’d like to use the FOMO object detection. I collected images of pollinators on different flowers and labeled them. However, as of right now the dataset is only about 300 pictures big and the accuracy of the model is at about 75%. My goal would be to reduce the false positives and increase the accuracy.

I experienced better results using RGB over grayscale and am currently using a 82% / 18% train/test split with ~250 images.

My best results where with these parameters:

Training cycles: 125

Learning rate: 0.015

Validation set size: 20%

However, due to the time constraints of 20min I’m not able to increase the training cycles or the dataset.

Does anyone have any experiences with detecting such small objects in complex images?

Thanks in advance!

The sweet spot for FOMO is detecting items that are in an output cell 1/8th of the input size. However, I did read somewhere that this is customizable, tho I could not find the link. This article explains output cells.

there are some minor customisations available (see FOMO: Object detection for constrained devices - Edge Impulse Documentation ) which include some changes around output resolution. the cut point in particular though currently would only a coarser grid, not a finer one sorry.

depending on the complexity of your background i expect you’ll get a bump by using the alpha=0.35 variant of mobile net and / or upping the number of filters from 32 to, say, 64+ (see the ‘FOMO classifier capacity’ section of that same page)

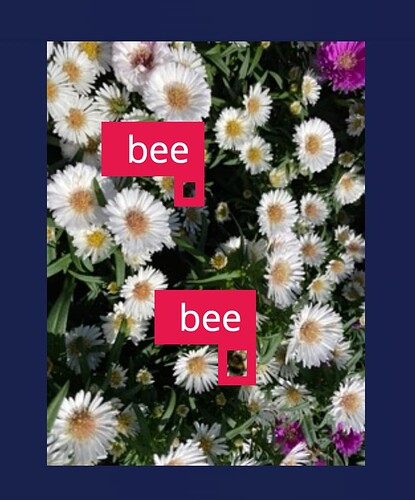

some of the key ideas for FOMO were developed with respect to bee counting, so it’s fundamentally doable, we just haven’t yet sorry got to the high res version… yet…

mat

( example from prior work )

1 Like

Thank you very much. Indeed, with customizing the layers and establishing 2x32 filters e.g. I managed to get a higher accuracy of about 80% and a significant lower false positive rate of about 8% on the validation set (before the false positive rate was around 38%). However in the model testing tab the accuracy drops to around 57%. In order to allow for 2x32 filters, I had to lower the training cycles, to not exceed the 20min limit.

I tried to add around 50 more images and trained the model again with fewer training cycles, so that now the accuracy of the training is about 76% and the accuracy of the testing is around 60%.

However, everything else I’d like to try, like expanding the dataset, increasing training cycles, re-training the model etc. is not possible due to the time limit.

Do you have any suggestions regarding this problem?

Hi @nordcode,

Regarding the 20 min training time limit on the free version of Edge Impulse:

1 Like

Hi @shawn_edgeimpulse ,

thanks for reaching out. Indeed, it’s for a sustainable cause. At university, we are developing projects where we try to meet at least 1-2 of the sustainable development goals of the UN and with our project we want to create an edge device that enables the monitoring of pollinators (wild bees) on flowers. This would contribute to population monitoring techniques that don’t harm pollinators and create data relatively easy and cheap, without too much human interaction. We also use environmental sensors to gather different other parameters alongside to get a better picture and display all the data (bee count, environmental data) on a dashboard. This may e.g. help in seeing decreasing or increasing trends in wild bee populations. As it’s a project for university, the underlying motive is research and non-commercial.

I hope the intentions came through, thanks for helping!