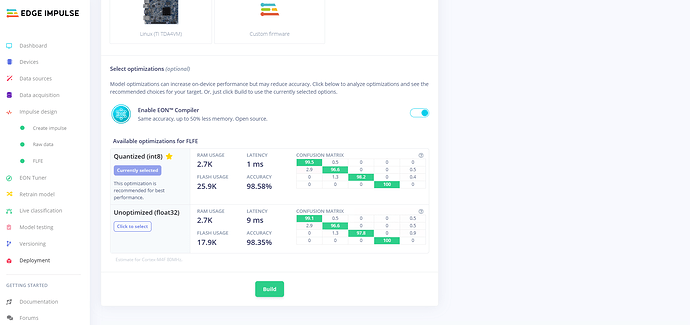

In my project, after using Quantized (int8), the LATENCY decreases significantly, but the FLASH USAGE increases significantly. For the Unoptimized (float32) the FLASH USAGE is 17.9K, it is 25.9K for the Quantized (int8). In the Docs, the FLASH USAGE after using Quantized (int8) is generally smaller than the Unoptimized (float32), I also think it should be less than. Why does this happen to my project? Is this correct? I suspect it is because of the use of 2D Convolution/pooling. What is the underlying reason? Can you give a further explanation from the perspective of methodology?

Hi @GuChunyue,

There are quantization parameters for every channel, so if you have many channels (i.e. for a small model), the quantized model may be bigger than the float version.

Also, the CMSIS-NN library needs to be compiled in, which results in some flash overhead.