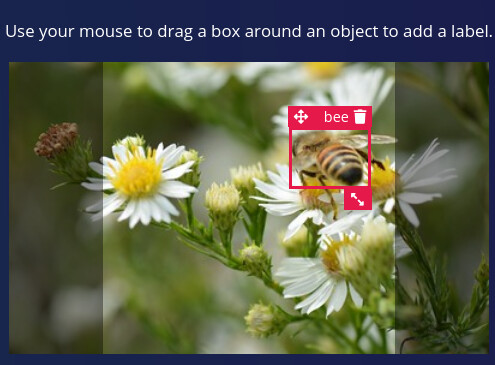

Please have a look at this picture from the training dataset and the box I have drawn around.

Is this correct for you ?

36610956464_0af824d834_w.jpg

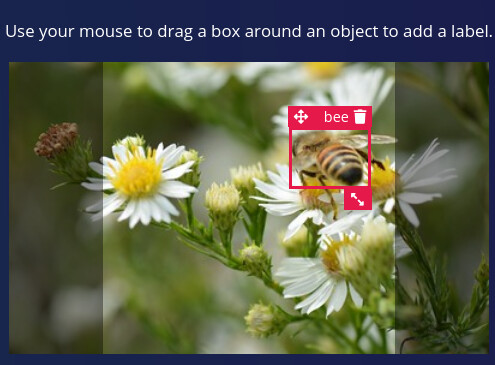

Please have a look at this picture from the training dataset and the box I have drawn around.

Is this correct for you ?

36610956464_0af824d834_w.jpg

Yep, that box looks perfect. I think your process of doing the initial classification with a deep learning model and then using OpenCV to detect the pollen colors is a good idea—always good to use the simplest tools for the job

@mathijs Thank you. Yesterday It works finally fine. But today same problem again !

Epoch 20 of 20, loss=0.51556426, val_loss=0.6717124

Finished fine tuning

Checkpoint saved

Finished training

Creating SavedModel for conversion…

Finished creating SavedModel

Loading for conversion…

Attached to job 814549…

Converting TensorFlow Lite float32 model…

Converting TensorFlow Lite int8 quantized model with float32 input and output…

Failed to check job status: [object Object]

Job failed (see above)

So I’ve modified boxes and labels according to @dansitu, generates again the features and train again this morning. Then I’ve discovered I can clone version of training model. That will be very helpful to increase the efficiency of the future models.

If I want to run in parallel classification model, should I publish a new version of the entire project and restart it with this basic parameter, or I can run it inside the same project ? My feeling is to create a new version ? Therefore can I import all the dataset with boxes and labels ?

I am learning a lot right now and trying to follow all the tutorials I need. There are some short cuts I am asking directly. And I feel so happy to receive good support from the developers around.

Thank you evry one.

And it works

But look at this performance 10%  !!!

!!!

I have to improve a lot of things.

I ran a model testing session, but got this error message.

Will try again later.

Creating job... OK (ID: 814692)

Generating features for Image...

Scheduling job in cluster...

Job started

Creating windows from 165 files...

[ 1/165] Creating windows from files...

[ 65/165] Creating windows from files...

[165/165] Creating windows from files...

[165/165] Creating windows from files...

Error windowing Reduce of empty array with no initial value

TypeError: Reduce of empty array with no initial value

at Array.reduce (<anonymous>)

at createWindows (/app/node/windowing/build/window-images.js:87:71)

at async /app/node/windowing/build/window-images.js:21:13

Application exited with code 1 (Error)

Job failed (see above)Hello @alcab,

It seems that you do not have any labels for your testing data.

The issue might come from it.

When testing your model, it compares the given output with the predicted output to give you an accuracy score.

Regards

Did I read Alcab’s output correctly though that the 0.1 precision score is against validation? ( And validation is originally split from training at the start? )

From post #30 it appears the model is overfitting (continuing drop in training loss while validation loss flattens out ) …

I did some work a few years back with bee images and initially framed it as object detection; one big win for me was to keep bounding boxes the same size (centered on the bee). This can make the problem much simpler for the training in the cases where the objects don’t vary much in size (especially with a low number of classes) But since the object count was high (i.e. loads of bees) I switched to framing it as per pixel segmentation, but that’s something that isn’t quite supported (as I understand it)… yet!

See http://matpalm.com/blog/counting_bees/ for some more ideas

Mat

It’s the quantized score though, f32 is 25%. Still not great. But indeed seems like overfitting. I figure if setting the LR lower and training longer would work @dansitu

@louis Cool. Makes sense. Work on it tomorrow.  as I have to spend some times with some of my bees in a beautiful blueberry field driven in biodynamic.

as I have to spend some times with some of my bees in a beautiful blueberry field driven in biodynamic.

@mat_kelcey Not sure I do understand all your message. Sounds great the job you have done. Bounding boxes appears more and more to be the key. I will work again on it.

@janjongboom I am supporting this idea too that training longer is the best to do. I will catch some more materials this afternoon. The new raspi should arrive tomorrow. Do you think I can run live test soon ? Is there a minimum ratio to start live prediction ?

Interesting results, and big thanks to @mat_kelcey for bringing your experience! Mat, you are right in saying that the precision score provided is based on the validation set, which is a 20% split from the training dataset.

So @alcab—before spending more time with object detection, I would definitely still do some thinking about whether classification vs. object detection is the right approach. Do you really need to know the location of the bees and pollen grains within each photograph, or is it fine just to know that a particular photo contains a bee that is carrying pollen grains?

Classification requires much less effort in terms of getting the labels right, and the resulting model will be a lot smaller.

I agree with @mat_kelcey and @janjongboom that it appears the model is overfitting. Since object detection is such a new feature we haven’t yet added some of our usual features that help with this, like regularization and data augmentation. But with that in mind, here are some ideas that might be worth exploring to try and get better results:

Train with a higher learning rate (perhaps try 0.35), which can have the side effect of reducing overfitting. But keep an eye on the “loss” and “val_loss” values, since you don’t want the “loss” to go significantly lower than “val_loss”. If that happens, run training again but reduce the number of epochs to the point it was at before the loss numbers significantly diverged.

Your training dataset appears to have a lot more images containing just bees than images containing bees with pollen. You might get better results if you “balance” these classes. Try removing images that have just bees until you have approximately the same number of both types of images. If most of your images have just bees, the model might learn during training that the pollen boxes don’t matter.

Since you are training a model to count and classify pollen, does your model even need to know about the bees? You could try removing the bee labels entirely from your dataset and just train a model to detect the pollen grains.

Failing that, and if you still want to proceed with object detection, I’d definitely recommend trying @mat_kelcey’s approach of re-labelling the bees (again) with a small, regularly sized box located at the center of each bee. @janjongboom maybe we should make it easier in the UI to create a box with specific dimensions?

Note that if you go to Dashboard > Export you can download your full dataset with all labels (you can import it back with the CLI) - useful if you’re going to experiment with removing some data.

FYI we have fixed an issue with memory leakage in the object detection training pipeline, the 137 OOM errors should be a thing of the past!

Hi!

I’ve started looking at the exact same problem of classifying what kind of pollen a bee is bringing into the hive. It seems to me that this is quite a demanding task computing wise, and as mentioned here and in the object detection tutorial that something like a Raspberry Pi is recommended to use. However it is essential for the project that I’m working on to keep power consumption at a minimum.

Will it be possible to do pollen detection and classification on an nRF5340, or will this task be way too demanding for a board like that?

Mathias

Hello @mathias,

You could run some image classification (not object detection) on the nRF5340 but the images size would have a maximum size of 96x96 using MobileNetV1 otherwise it would be too demanding for this board. So I guess for this project it would be hard to get a good accuracy using this board.

Louis

Thank you so much for your quick response, @louis!

What you’re saying sounds reasonable. Do you think it would be possible to do any sort of image classification with Edge Impulse on the nRF5340, or will MobileNetV1 be my only alternative? I’m quite new to Edge Impulse, but so far it seems to be absolutely awesome, so would really prefer being able to use Edge Impulse. When it comes to MobileNetV1, this is the first time I’ve ever heard about it.

How is your project progressing, @alcab? Have you found any cool and relevant datasets to use, or do you collect most of the data yourself?

I think you would be able to classify whether there is a bee on a picture or not with the nRF52840 but classifying what kind of pollen the bee is bringing into the hive is a complete different story :D.

One thing I have seen previously is to be able to detect the hive occupancy using the sound of the bees, this could be low-power but it probably does not match your use case.

Regards,

I guess what you’re saying is correct. Think I have to look a bit more into the beehive relative use cases for the combination of Edge Impulse and low-power.

Thanks!

Thank you for the advice! I will do that!

Hello @mathias,

FYI, we recently released FOMO, brand new approach to run object detection models on constrained devices: https://www.edgeimpulse.com/blog/announcing-fomo-faster-objects-more-objects

I thought you might be interesting in seeing how it performs for your use case

Regards,

Louis

@mathias @alcab and others on this thread - we’ve upped the memory limits for all jobs to be less stringent (they can go over memory limits without being killed immediately) and this should resolve all OOMKilled issues. We’re monitoring actively to see if any others happen and can tweak the limits if that’s the case.