I was attempting to deploy a pretrained model that I created outside of Edge Impulse. I came across this link and was wondering if this link works and what to do regarding the API keys.

Please provide help on this topic!

I was attempting to deploy a pretrained model that I created outside of Edge Impulse. I came across this link and was wondering if this link works and what to do regarding the API keys.

Please provide help on this topic!

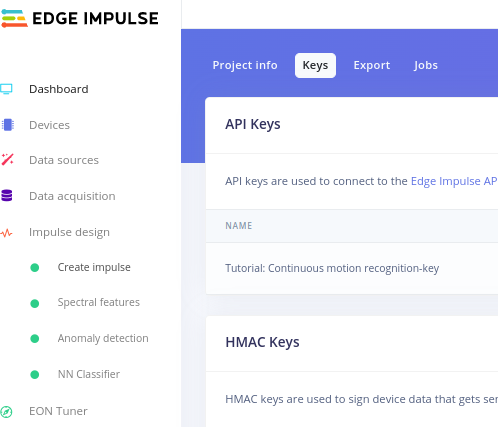

The API keys are accessed on the Studio Dashboard…

Will I get access to the API keys when I create a new project or from a preexisting project?

Each project has a unique API key. You will obtain a unique API key if you create a new project.

Check the Edge Impulse API for more information.

Note: you can bring a pre-trained model into the training pipeline Bring your own model

However, it is not clear what you are trying to do. Do you have already a tflite model? Maybe thIs can help you to come up with a solution: TensorFlow Lite Tutorial Part 3: Speech Recognition on Raspberry Pi

What if I already have a pretrained model that is a tflite model? Also which method should I follow to properly bring my own model into the training pipeline?

Check:

Is this a model you have developed by yourself?

If yes, the only approach is to retrain the model in the EI studio. The Expert mode can be an approach.

@Joeri I’m sure I’m missing something but why would a pretrained model have to be retrained in EI studio? Isn’t that the point of a pretrained model? Asking b/c I’m trying to do something similar. Thanks.

You are correct if you pre-trained a model it makes no sense to retrain it. But if I am correct, the question was importing pre-trained tflite model files into Edge Impulse. So far, I know importing pre-trained tflite is not possible reference: How would I run a pre-trained tflite model inedge impulse enviornment?.

So far, I see there are two solutions. If you have built your model architecture on your local machine, you can use the expert mode and retrain it or go for Bring your own model to load your transfer learning model. See also Bring Your Own Model Into Edge Impulse with ONNX and Edge Impulse Imagine 2022 Day 1: Edge Impulse Demos and Features with Jan Jongboom

@louis, am I correct that Bring your own model is the way to go?

So if I want to deploy a model using Edge Impulse platform, I would need to create the Custom Block and then utilize that? Is the BYOM the most accurate and how do you use it? Do you create a Docker Container and in there you store the data, code, and weights? Can someone please help me with this?

@saxenapratham6 You have already a tflite model and your goal is to deploy this model on the Raspberry Pi. Maybe this can be a road to your solution TensorFlow Lite Speech Recognition Demo in part 3 @ShawnHymel explains how to perform inference on Rpi.